To test Ceph tiering functionality, two RADOS pools are required. If you are running these examples on a laptop or desktop hardware, although spinning disk-based OSDs can be used to create the pools; SSDs are highly recommended if there is any intention to read and write data. If you have multiple disk types available in your testing hardware, then the base tier can exist on spinning disks and the top tier can be placed on SSDs.

Let's create tiers using the following commands, all of which make use of the Ceph tier command:

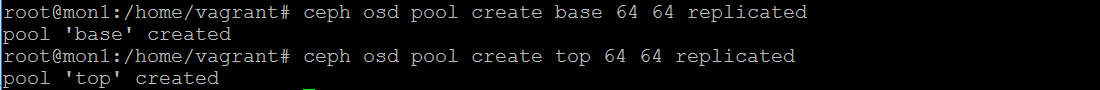

- Create two RADOS pools:

ceph osd pool create base 64 64 replicated

ceph osd pool create top 64 64 replicated

The preceding commands give the following output:

- Create a tier consisting of the two pools:

ceph osd tier add base top

The preceding command gives the following output:

- Configure the cache mode:

ceph osd tier cache-mode...