Data Pump export (expdp) is the database utility used to export data, it generates a file in a proprietary format. The generated file format is not compatible with the one generated by the old export (exp) utility.

Data Pump export modes define the different operations that are performed with Data Pump. The mode is specified on the command line using the appropriate parameters. Data Pump has the following modes:

Full export mode: This mode exports the whole database; this requires the user to have the

exp_full_databaserole granted.Schema mode: This mode selects only specific schemas, all objects belonging to the listed schemas are exported. When using this mode you should be careful, if you direct a table to be exported and there are objects such as triggers which were defined using a different schema, and this schema is not explicitly selected then the objects belonging to this schema are not exported.

Table mode: The tables listed here are exported, the list of tables, partitions, and dependent objects are exported. You may export tables belonging to different schemas, but if this is the case then you must have the

exp_full_databaserole explicitly granted to be able to export tables belonging to different schemas.Tablespace mode: This mode allows you to export all tables belonging to the defined tablespace set. The tables along with the dependent objects are dumped. You must have the

exp_full_databaserole granted to be able to use this mode.Transportable tablespace mode: This mode is used to transport a tablespace to another database, this mode exports only the metadata of the objects belonging to the target set of listed tablespaces. Unlike tablespace mode, transportable tablespace mode requires that the specified tables be completely self-contained.

Data Pump Export provides different working interfaces such as:

Command line interface: The command line interface is the default and the most commonly used interface. Here the user must provide all required parameters from the OS command line.

Parameter file interface: In this mode the parameters are written in a plain text file. The user must specify the parameter file to be used for the session.

Interactive command line interface. This is not the same interactive command line most users know from the regular exp command. This interactive command line is used to manage and configure the running jobs.

Now let's start with our first simple Data Pump export session. This will show us some initial and important features of this tool.

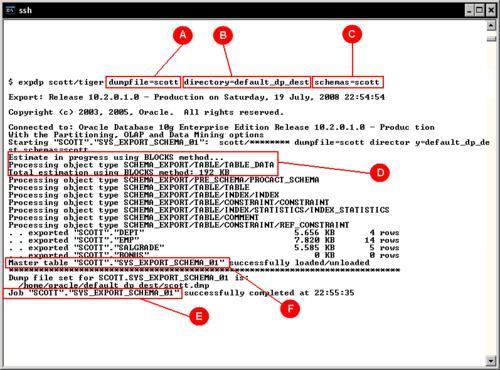

Here we will start with a basic Data Pump session to perform a simple logical backup. The command expdp has the following arguments:

Initially we define a Dumpfile(A) . As it will be managed by means of a database directory object(B) it is not necessary to define the path where the dump file will be stored. Remember, the directory objects were previously defined at the database level. This session will export a user's Schema(C) . No other parameters are defined at the command prompt and the session begins.

It can be seen from the command output that an estimation(D) takes place; this estimates the size of the file at the file system, and as no other option for the estimation was defined at the command line it is assumed the BLOCKS method will be used. The estimation by means of the BLOCKS method isn't always accurate, as it depends on the blocks sampled. Block density is a meaningful error factor for this estimation, it is better to use STATISTICS as the estimation method.

At the output log, the Master table(F) where the job running information is temporarily stored can be seen. The job name takes a default name(E) . It is a good practice to define the job name and not let Oracle define it at execution time, if a DBA names the Job, it will be easier to reference it at a later time.

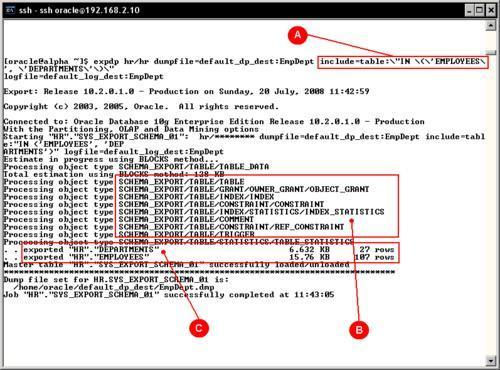

At the command line, filtering options can be specified. In this example, it is used to define the tables to export, but we can also specify whether the dump file will (or will not) include all other dependent objects.

The include (A) and exclude options are mutually exclusive, and in this case as include was declared at the command line and it requires special characters, those must be escaped so the OS doesn't try to interpret them. When a longer include or exclude option is required, it is better to use a parameter file, where the escape characters are not required.

All the filtered objects (C) to be exported were saved in the dump file along with their dependent objects (B). If you change the command line with the following, it will prevent all the indexes being exported:

$ expdp hr/hr dumpfile=default_dp_dest:EmpDeptNoIndexes tables=EMPLOYEES,DEPARTMENTS exclude=INDEX:\"LIKE \'\%\'\" logfile=default_log_dest:EmpDeptNoIndexes

As can be seen, the exclude or include clause is actually a where predicate.

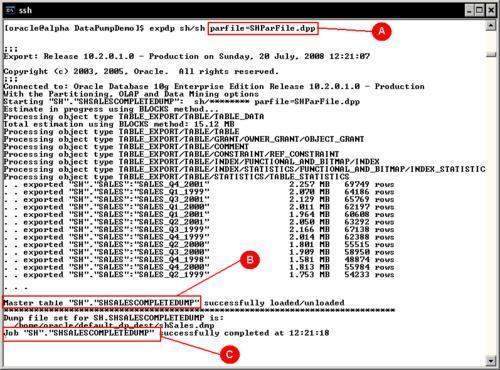

Using a parameter file simplifies an otherwise complex to write command line, it also allows the user to define a library of repeatable operations, even for simple exports. As previously seen, if a filtering (object or row) clause is used—some extra operating system escape characters are required. By writing the filtering clauses inside a parameter file, the command line can be greatly simplified.

Comparing this command line (A) against the previously exposed command lines, it can be seen that it is more readable and manageable. The SHParFile.dpp file from the example contains these command options:

USERID=sh/sh DUMPFILE=shSales DIRECTORY=default_dp_dest JOB_NAME=shSalesCompleteDump TABLES=SALES LOGFILE=default_log_dest:shSales

The parameter file is a plain text format file. You may use your own naming conventions. Oracle regularly uses .par for the parameter files, in this case it used .dpp to denote a Data Pump parameter file. The file name can be dynamically defined using environment variables, but this file name formatting is beyond the scope of Oracle and it exclusively depends on the OS variable management.

JOBNAME (C) is the option to specify a non-default job name, otherwise oracle will use a name for it. It is good practice to have the job name explicitly defined so the user can ATTACH to it at a later time, and related objects such as the Master table (B) can be more easily identified.

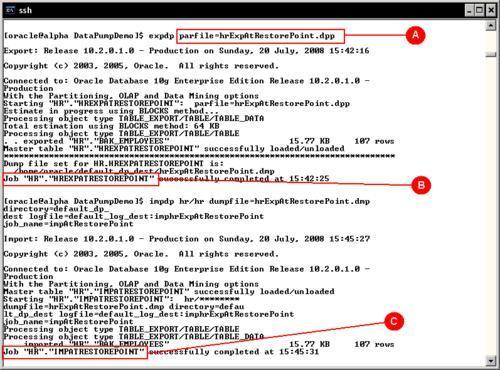

In some circumstances, it may be useful to export the image of a table the way it existed before a change was committed. If the database is properly configured, the database flashback query facility—also integrated with dpexp—may be used. It is useful for obtaining a consistent exported table image.

In this example a copy of the original HR.EMPLOYEES table is made (HR.BAK_EMPLOYEES), and all the tasks will update the BAK_EMPLOYEES table contents. A Restore Point is created so that you can easily find out the exact time stamp when this change took place:

SQL> CREATE RESTORE POINT ORIGINAL_EMPLOYEES;

Restore point created.

SQL> SELECT SCN, NAME FROM V$RESTORE_POINT;

SCN NAME

---------- --------------------------------

621254 ORIGINAL_EMPLOYEES

SQL> SELECT SUM(SALARY) FROM EMPLOYEES;

SUM(SALARY)

-----------

691400

This is the way data was, at the referred SCN. This number will be used later, to perform the expdp operation and retrieve data as it was, at this point in time.

Next a non-reversible update on the data takes place.

SQL> UPDATE BAK_EMPLOYEES SET SALARY=SALARY*1.1;

107 rows updated.

SQL> COMMIT;

Commit complete.

SQL> SELECT SUM(SALARY) FROM BAK_EMPLOYEES

SUM(SALARY)

-----------

760540

Here we have a time reference and the goal is to restore data as it was.

Below are the contents of the data pump parameter file used to retrieve data.

USERID=hr/hr DIRECTORY=default_dp_dest DUMPFILE=hrExpAtRestorePoint JOB_NAME=hrExpAtRestorePoint TABLES=BAK_EMPLOYEES LOGFILE=default_log_dest:hrExpAtRestorePoint FLASHBACK_SCN=621254

The parameter FLASHBACK_SCN states the point in time from when the table is to be retrieved.

Once the backup is taken, the current table is dropped. When the import takes place it rebuilds the table with the data, as it was before. The import parameter file has been temporarily modified so it defines the log file name, and it includes only the minimum required parameters for the impdp task (C).

USERID=hr/hr DIRECTORY=default_dp_dest DUMPFILE=hrExpAtRestorePoint JOB_NAME=ImpAtRestorePoint TABLES=BAK_EMPLOYEES LOGFILE=default_log_dest:hrImpAtRestorePoint

Once the import job is finished, a query to the current table shows the data 'as it was', prior to the update command.

SQL> select sum(salary) from bak_employees;

SUM(SALARY)

-----------

691400

Proactively estimating the amount of space required by an export file prevents physical disk space shortages. Data Pump has two methods to estimate the space requirements: Estimation by block sampling (BLOCKS) or estimation by object statistics (STATISTICS).

ESTIMATE={BLOCKS | STATISTICS}

BLOCKS—The estimate is calculated by multiplying the number of database blocks used by the target objects times the appropriate block sizes.

STATISTICS—The estimate is calculated using statistics for each table. For this method to be as accurate as possible, all tables should have been analyzed recently.

The second method leads to more accurate results and can be performed in a more efficient way than the BLOCKS method; this method requires reliable table statistics.

It can be seen from an export execution, that space estimation is always carried out, and the default estimation method is BLOCKS. The BLOCKS method is used by default as data blocks will always be present at the table, while the presence of reliable statistics cannot be taken for granted. From performance and accuracy perspectives it is not the best choice. It takes longer to read through the whole table, scanning the data block to estimate the space required by the dump file. This method may not be accurate as it depends on the block data distribution. This means that it assumes all block data is evenly distributed throughout all the blocks, which may not be true in every case, leading to inaccurate results. If the STATISTICS keyword is used, it is faster; it only has to estimate the file size from the information already gathered by the statistics analysis processes.

Taking the export of the SH schema with the ESTIMATE_ONLY option and the option BLOCKS, the estimation may not be as accurate as the STATISTICS method. As these test results shows:

|

ESTIMATE_ONLY |

Reported Estimated Dump File Size |

|---|---|

|

BLOCKS |

15.12 MB |

|

STATISTICS |

25.52 MB |

|

ACTUAL FILE SIZE |

29.98 MB |

From the above results, it can be seen how important it is to have reliable statistics at the database tables, so any estimation performed by data pump can be as accurate as possible.

Data Pump export is an exporting method that is faster than the old exp utility. Export speed can between 15 and 45 times faster than the conventional export utility. This is because the original export utility uses only conventional mode inserts, whereas Data Pump export uses the direct path method of loading, but in order for it to reach the maximum possible speed it is important to perform the parallel operations on spindles other than those where the database is located. There should be enough I/O bandwidth for the export operation to take advantage of the dump file multiplexing feature.

The options used to generate an export dump in parallel with multiplexed dump files are:

USERID=sh/sh DUMPFILE=shParallelExp01%u,shParallelExp02%u DIRECTORY=default_dp_dest JOB_NAME=shParallelExp TABLES=SALES LOGFILE=default_log_dest:shParallelExp ESTIMATE=statistics PARALLEL=4

Notice the %u flag, which will append a two digit suffix to the Data Pump file. These options will direct export data pump to generate four dump files which will be accessed in a round robin fashion, so they get uniformly filled.

The resulting export dump files are:

shParallelExp0101.dmp shParallelExp0102.dmp shParallelExp0201.dmp shParallelExp0202.dmp

Data Pump allows data transfers among different Oracle versions that support the feature. (Note the feature was introduced in Oracle Database 10g Release. 1). The database must be configured for compatibility of 9.2.0 or higher. This feature simplifies data transfer tasks. In order for this to work it is important to consider the source version versus the destination version. It works in an ascending compatible mode, so a Data Pump export taken from a lower release can always be read by the higher release, but an export taken from a higher release must be taken with the VERSION parameter declaring the compatibility mode. This parameter can either take the value of COMPATIBLE (default) which equals the compatible instance parameter value, LATEST, which equals the metadata version or any valid database version greater than 9.2.0. This last statement doesn't mean Data Pump can be imported on a 9.2.0 database. Rather, it stands for the recently migrated 10g databases which still hold the compatible instance parameter value set to 9.2.0.

If the COMPATIBLE parameter is not declared an export taken from a higher release won't be read by a lower release and a run time error will be displayed.

When performing data transfers among different database versions, you should be aware of the Data Pump compatibility matrix:

Data Pump client and server compatibility:

|

|

10.1.0.X |

10.2.0.X |

11.1.0.X |

|---|---|---|---|

|

10.1.0.X |

Supported |

Supported |

Supported |

|

10.2.0.X |

NO |

Supported |

Supported |

|

11.1.0.X |

NO |

NO |

Supported |

Each Oracle version produces a different Data Pump file version, when performing expdp/impdp operations using different Data Pump file versions you should be aware of the file version compatibility.

|

Version Data Pump Dumpfile Set |

Written by database with compatibility |

Can be imported into Target | ||

|---|---|---|---|---|

|

10.1.0.X |

10.2.0.X |

11.1.0.X | ||

|

0.1 |

10.1.X |

Supported |

Supported |

Supported |

|

1.1 |

10.2.X |

No |

Supported |

Supported |

|

2.1 |

11.1.X |

No |

No |

Supported |

Data Pump is meant to work as a batch utility, but it also has a prompt mode, which is known as the interactive mode. It should be emphasized that the data pump interactive mode is conceptually different from the old interactive export/import mode. In this release, the interactive mode doesn't interfere with the currently running job, it is used to control some parameter of the running job, such as the degree of parallelism, kill the running job, or resume job execution in case of a temporary stop due to lack of disk space.

In order for the user to ATTACH to a running job in interactive mode, the user must issue the Ctrl-C keystroke sequence from an attached client. If the user is running on a terminal different from the one where the job is running, it is still possible to attach to the running job by means of the explicit ATTACH parameter. It is because of this feature that it is useful to not let Oracle define the job name.

Once attached there are several commands that can be issued from the open Data Pump prompt:

|

Command |

Description (Default) |

|---|---|

|

|

Return to logging mode. Job will be re-started if idle |

|

|

Quit client session and leave the job running |

|

|

Summarize interactive commands |

|

|

Detach and delete job |

|

|

Change the number of active workers for current job |

|

|

Start/resume current job. |

|

|

Frequency (seconds) job status is to be monitored where the default (0) will show new status when available |

|

|

Orderly shutdown of job execution and exits the client. |

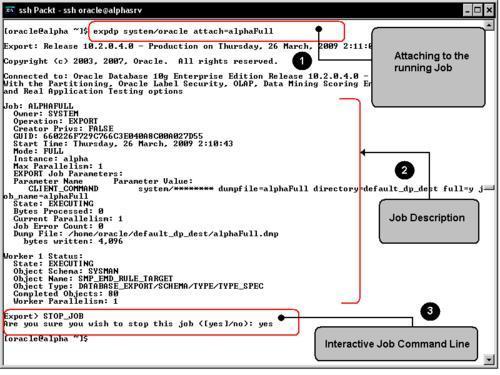

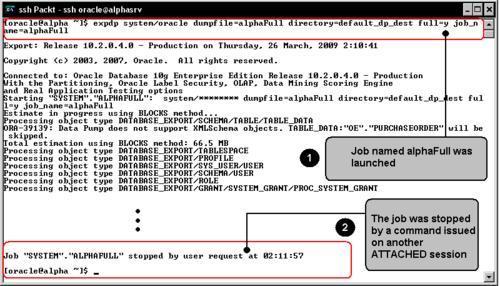

In this scenario the expdp

Command Line Interface (CLI) is accessed to manage a running job. First a simple session is started using the command:

expdp system/oracle dumpfile=alphaFull directory=default_dp_dest full=y job_name=alphaFull

The JOB_NAME parameter provides a means to quickly identify the running job.

Once the job is running on a second OS session a new expdp command instance is started, this time using the ATTACH command. This will open a prompt that will allow the user to manage the running job.

expdp system/oracle attach=alphaFull

After showing the job status it enters the prompt mode where the user can issue the previously listed commands.

In this case a STOP_JOB command has been issued. This notifies the running session that the command execution has been stopped, the job output is stopped and the OS prompt is displayed. After a while the user reattaches to the running job, this time the START_JOB command is issued, this resumes the job activity, but as the expdp session was exited no more command output is displayed. The only way the user can realize the job is running is by querying the DBA_DATAPUMP_JOBS view or by browsing the log file contents.