MapReduce is a programming model used to process and generate large data sets in parallel across multiple computers in a cluster using a distributed algorithm. In the case of Hadoop, the MapReduce programs are executed on a cluster of commodity servers, which offers a high degree of fault tolerance and linear scalability.

MapReduce libraries are available in several programming languages for various database systems. The open source implementation of MapReduce in Hadoop delivers fast performance, not just because of the MapReduce, but also because Hadoop minimizes the expensive movement of data on a network by performing data processing close to where the data is stored.

Until the launch of Apache YARN, MapReduce was the dominant programming model on Hadoop. Though MapReduce is simple to understand at conceptual level, the implementation of MapReduce programs is not very easy. As a result, several higher order tools, such as Hive and Pig, have been invented which let users take advantage of Hadoop's large data set processing capabilities without knowing the inner workings of MapReduce. Hive and Pig are open source tools, which internally use MapReduce to run jobs on Hadoop cluster.

The introduction of Apache YARN (Yet Another Resource Negotiator), gave Hadoop the capability to run jobs on a Hadoop cluster without using the MapReduce paradigm. The introduction of YARN does not alter or enhance the capability of Hadoop to run MapReduce jobs, but MapReduce now turns into one of the application frameworks in the Hadoop ecosystem that uses YARN to run jobs on a Hadoop cluster.

From Apache Hadoop version 2.0, MapReduce has undergone a complete redesign and it is now an application on YARN, and called MapReduce version 2. This book covers MapReduce Version 2. The only exception is the next section, where we discuss MapReduce Version 1 for background information to understand YARN.

In this section, we will discuss the execution model of MapReduce Version 1 so that we can better understand how Apache YARN has improved it.

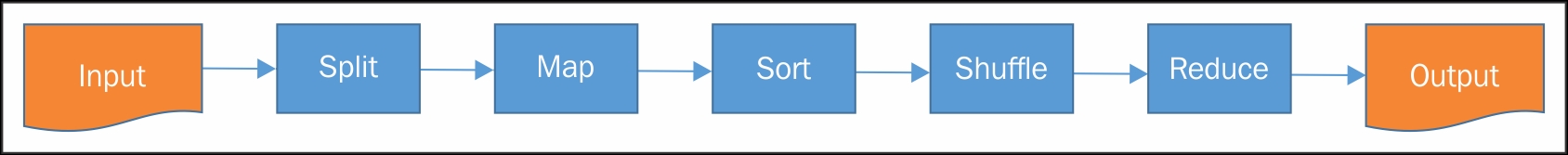

MapReduce programs in Hadoop essentially take in data as their input and then generate an output. In MapReduce terminology, the unit of work is a job which a client program submits to a Hadoop cluster. A job is broken down into tasks. These tasks perform map and reduce functions.

Hadoop controls the execution of jobs with the help of a JobTracker and a number of TaskTrackers. JobTrackers manage resources and all the jobs scheduled on a Hadoop cluster. Several TaskTrackers run tasks and periodically report the progress to the JobTracker, which keeps track of the overall progress of a job. The JobTracker is also responsible for rescheduling a task if it fails.

In Hadoop, data locality optimization is an important consideration when scheduling map tasks on nodes. Map tasks are scheduled on the node where the input data resides in the HDFS. This is done to minimize the data transfer over the network.

Hadoop splits the input to MapReduce jobs into fixed size chunks. For each chunk, Hadoop creates a separate map task that runs the user-defined map function for each record in the chunk. The records in each chunk are specified in the form of key-value pairs.

An overview of a MapReduce processing stage is shown in Figure 8:

Apache YARN provides a more scalable and isolated execution model for MRv2. In MRv1, a singular JobTracker handled resource management, scheduling and task monitoring work. To keep the backwards compatibility, the MRv1 framework has been rewritten so that it can submit jobs on top of YARN.

In YARN, the responsibilities of the JobTracker have been split into two separate components. These components are as follows:

ResourceManager

ApplicationMaster

ResourceManager allocates the computing resources to various applications running on top of Apache YARN. For each application running on YARN, ApplicationMaster manages the lifecycle of the application. These two components run as two daemons on a cluster.

YARN architecture also introduces the concept of the NodeManager that manages the Hadoop processes running on that machine.

The ResourceManager runs two main services. The first service is a pluggable Scheduler service. The Scheduler service manages the resource scheduling policy. The second service is the ApplicationsManager, which manages the ApplicationMasters by starting, monitoring, and restarting them in case they fail.

A container is an abstract notion on the YARN platform representing a collection of physical resources such as the CPU cores and disk, along with the RAM. When an application is about to get submitted into the YARN platform, the client allocates a container from the ResourceManager, where its ApplicationMaster will run.

Figure 9 (The Apache Software Foundation, 2015) explains the execution model of Hadoop with YARN.

Readers who are interested to learn about YARN in detail can find elaborate information on the Cloudera blog. (Radwan, 2012)