We cannot begin talking about neural networks without understanding their origins, including the term as well. The terms neural networks (NN) and ANN are used as synonyms in this book, despite NNs being more general, covering the natural neural networks as well. So, what actually is an ANN? Let's explore a little of the history of this term.

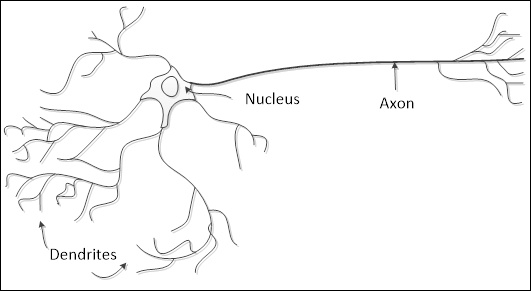

In the 1940s, the neurophysiologist Warren McCulloch and the mathematician Walter Pitts designed the first mathematical implementation of an artificial neuron combining the neuroscience foundations with mathematical operations. At that time, the human brain was being studied largely to understand its hidden and mystery behaviors, yet within the field of neuroscience. The natural neuron structure was known to have a nucleus, dendrites receiving incoming signals from other neurons, and an axon activating a signal to other neurons, as shown in the following figure:

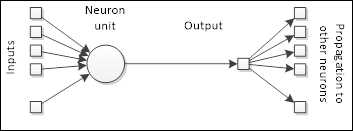

The novelty of McCulloch and Pitts was the math component included in the neuron model, supposing a neuron as a simple processor summing all incoming signals and activating a new signal to other neurons:

Furthermore, considering that the brain is composed of billions of neurons, each one interconnected with another tens of thousands, resulting in some trillions of connections, we are talking about a giant network structure. On the basis of this fact, McCulloch and Pitts designed a simple model for a single neuron, initially to simulate the human vision. The available calculators or computers at that time were very rare, but capable of dealing with mathematical operations quite well; on the other hand, even tasks today such as vision and sound recognition are not easily programmed without the use of special frameworks, as opposed to the mathematical operations and functions. Nevertheless, the human brain can perform sound and image recognition more efficiently than complex mathematical calculations, and this fact really intrigues scientists and researchers.

However, one known fact is that all complex activities that the human brain performs are based on learned knowledge, so as a solution to overcome the difficulty that conventional algorithmic approaches face in addressing these tasks easily solved by humans, an ANN is designed to have the capability to learn how to solve some task by itself, based on its stimuli (data):

|

Tasks Quickly Solvable by Humans |

Tasks Quickly Solvable by Computers |

|---|---|

|

Classification of images Voice recognition Face identification Forecast events on the basis of experience |

Complex calculation Grammatical error correction Signal processing Operating system management |

By taking into account the human brain characteristics, it can be said that the ANN is a nature-inspired approach, and so is its structure. One neuron connects to a number of others that connect to another number of neurons, thus being a highly interconnected structure. Later in this book, it will be shown that this connectivity between neurons accounts for the capability of learning, since every connection is configurable according to the stimuli and the desired goal.

Let's explore the most basic artificial neural element – the artificial neuron. Natural neurons have proven to be signal processors since they receive micro signals in the dendrites that can trigger a signal in the axon depending on their strength or magnitude. We can then think of a neuron as having a signal collector in the inputs and an activation unit in the output that can trigger a signal that will be forwarded to other neurons, as shown in the following figure:

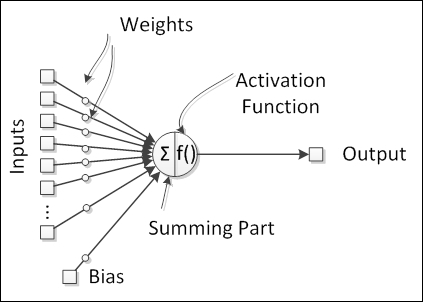

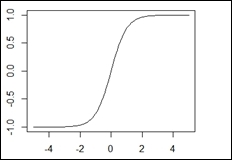

This activation function is what fires the neuron's output, based on the sum of all incoming signals. Mathematically it adds nonlinearity to neural network processing, thereby providing the artificial neuron nonlinear behaviors, which will be very useful in emulating the nonlinear nature of natural neurons. An activation function is usually bounded between two values at the output, therefore being a nonlinear function, but in some special cases, it can be a linear function.

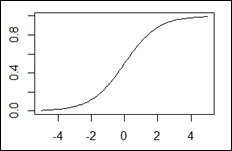

Although any function can be used as activation, let's concentrate on common used ones:

|

Function |

Equation |

Chart |

|---|---|---|

|

Sigmoid |

|

|

|

Hyperbolic tangent |

|

|

|

Hard limiting threshold |

|

|

|

Linear |

|

|

In these equations and charts the coefficient a can be chosen as a setting for the activation function.

While the neural network structure can be fixed, weights represent the connections between neurons and they have the capability to amplify or attenuate incoming neural signals, thus modifying them and having the power to influence a neuron's output. Hence a neuron's activation will not be dependent on only the inputs, but on the weights too. Provided that the inputs come from other neurons or from the external world (stimuli), the weights are considered to be a neural network's established connections between its neurons. Since the weights are an internal neural network component and influence its outputs, they can be considered as neural network knowledge, provided that changing the weights will change the neural network's outputs, that is, its answers to external stimuli.

It is useful for the artificial neuron to have an independent component that adds an extra signal to the activation function: the bias. This parameter acts like an input, except for the fact that it is stimulated by one fixed value (usually 1), which is multiplied by an associated weight. This feature helps in the neural network knowledge representation as a more purely nonlinear system, provided that when all inputs are zero, that neuron won't necessarily produce a zero at the output, instead it can fire a different value according to the bias associated weight.

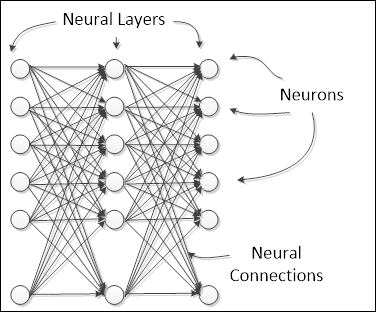

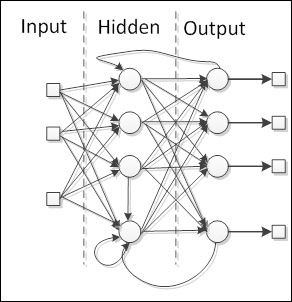

In order to abstract levels of processing, as our mind does, neurons are organized in layers. The input layer receives direct stimuli from the outside world, and the output layers fire actions that will have a direct influence on the outside world. Between these layers, there are a number of hidden layers, in the sense that they are invisible (hidden) from the outside world. In artificial neural networks, a layer has the same inputs and activation function for all its composing neurons, as shown in the following figure:

Neural networks can be composed of several linked layers, forming the so-called multilayer networks. Neural layers can then be classified as Input, Hidden, or Output.

In practice, an additional neural layer enhances the neural network's capacity to represent more complex knowledge.

A neural network can have different layouts, depending on how the neurons or layers are connected to each other. Each neural network architecture is designed for a specific goal. Neural networks can be applied to a number of problems, and depending on the nature of the problem, the neural network should be designed in order to address this problem more efficiently.

Neural network architectures classification is two-fold:

Neuron connections

Monolayer networks

Multilayer networks

Signal flow

Feedforward networks

Feedback networks

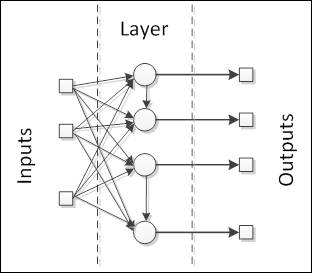

In this architecture, all neurons are laid out in the same level, forming one single layer, as shown in the following figure:

The neural network receives the input signals and feeds them into the neurons, which in turn produce the output signals. The neurons can be highly connected to each other with or without recurrence. Examples of these architectures are the single-layer perceptron, Adaline, self-organizing map, Elman, and Hopfield neural networks.

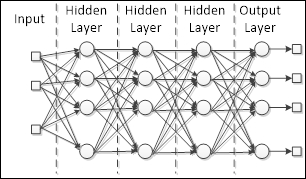

In this category, neurons are divided into multiple layers, each layer corresponding to a parallel layout of neurons that shares the same input data, as shown in the following figure:

Radial basis functions and multilayer perceptrons are good examples of this architecture. Such networks are really useful for approximating real data to a function especially designed to represent that data. Moreover, because they have multiple layers of processing, these networks are adapted to learn from nonlinear data, being able to separate it or determine more easily the knowledge that reproduces or recognizes this data.

The flow of the signals in neural networks can be either in only one direction or in recurrence. In the first case, we call the neural network architecture feedforward, since the input signals are fed into the input layer; then, after being processed, they are forwarded to the next layer, just as shown in the figure in the multilayer section. Multilayer perceptrons and radial basis functions are also good examples of feedforward networks.

When the neural network has some kind of internal recurrence, it means that the signals are fed back in a neuron or layer that has already received and processed that signal, the network is of the feedback type. See the following figure of feedback networks:

The special reason to add recurrence in the network is the production of a dynamic behavior, particularly when the network addresses problems involving time series or pattern recognition, which require an internal memory to reinforce the learning process. However, such networks are particularly difficult to train, because there will eventually be a recursive behavior during the training (for example, a neuron whose outputs are fed back into its inputs), in addition to the arrangement of data for training. Most of the feedback networks are single layer, such as Elman and Hopfield networks, but it is possible to build a recurrent multilayer network, such as echo and recurrent multilayer perceptron networks.