The primary virtual machine resources

Virtualization decouples physical hardware from an operating system. Each virtual machine contains a set of its own virtual hardware and there are four primary resources that a virtual machine needs in order to correctly function. These are CPU, memory, network, and hard disk. These four resources look like physical hardware to the guest operating systems and applications. The virtual machine is granted access to a portion of the resources at creation and can be reconfigured at any time thereafter. If a virtual machine experiences constraint, one of the four primary resources is generally where a bottleneck will occur.

In a traditional architecture, the operating system interacts directly with the server's physical hardware without virtualization. It is the operating system that allocates memory to applications, schedules processes to run, reads from and writes to attached storage, and sends and receives data on the network. This is not the case with a virtualized architecture. The virtual machine guest operating system still does the aforementioned tasks, but also interacts with virtual hardware presented by the hypervisor.

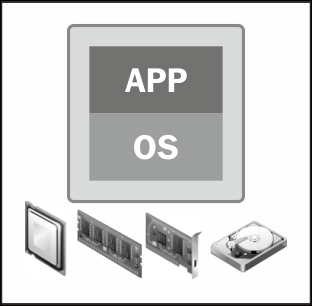

In a virtualized environment, a virtual machine interacts with the physical hardware through a thin layer of software known as the virtualization layer or the hypervisor; in this case the hypervisor is ESXi. This hypervisor allows the VM to function with a degree of independence from underlying physical hardware. This independence is what allows vMotion and Storage vMotion functionality. The following diagram demonstrates a virtual machine and its four primary resources:

This section will provide an overview of each of the "primary four" resources. Configurations for these resources will be discussed in Chapter 2, Creating a Virtual Machine Using the Wizard and Chapter 4, Advanced Virtual Machine Settings.

The virtualization layer runs CPU instructions to make sure that the virtual machines run as though accessing the physical processor on the ESXi host. Performance is paramount for CPU virtualization, and therefore will use the ESXi host physical resources whenever possible. The following image displays a representation of a virtual machine's CPU:

A virtual machine can be configured with up to 64 virtual CPUs (vCPUs) as of vSphere 5.5. The maximum vCPUs able to be allocated depends on the underlying logical cores that the physical hardware has. Another factor in the maximum vCPUs is the tier of vSphere licensing; only Enterprise Plus licensing allows for 64 vCPUs. The VMkernel includes a CPU scheduler that dynamically schedules vCPUs on the ESXi host's physical processors.

The VMkernel scheduler, when making scheduling decisions, considers socket-core-thread topology. A socket is a single, integrated circuit package that has one or more physical processor cores. Each core has one or more logical processors, also known as threads. If hyperthreading is enabled on the host, then ESXi is capable of executing two threads, or sets of instruction, simultaneously. Effectively, hyperthreading provides more logical CPUs to ESXi on which vCPUs can be scheduled, providing more scheduler throughput. However, keep in mind that hyperthreading does not double the core's power. During times of CPU contention, when VMs are competing for resources, the VMkernel timeslices the physical processor across all virtual machines to ensure that the VMs run as if having a specified number of vCPUs.

VMware vSphere Virtual Symmetric Multiprocessing (SMP) is what allows the virtual machines to be configured with up to 64 virtual CPUs, which allows a larger CPU workload to run on an ESXi host. Though most supported guest operating systems are multiprocessor aware, many guest OSes and applications do not need and are not enhanced by having multiple vCPUs. Check vendor documentation for operating system and application requirements before configuring SMP virtual machines.

In a physical architecture, an operating system assumes that it owns all physical memory in the server, which is a correct assumption. A guest operating system in a virtual architecture also makes this assumption but it does not, in fact, own all of the physical memory. A guest operating system in a virtual machine uses a contiguous virtual address space that is created by ESXi as its configured memory. The following image displays a representation of a virtual machine's memory:

Virtual memory is a well-known technique that creates this contiguous virtual address space, allowing the hardware and operating system to handle the address translation between the physical and virtual address spaces. Since each virtual machine has its own contiguous virtual address space, this allows ESXi to run more than one virtual machine at the same time. The virtual machine's memory is protected against access from other virtual machines.

This effectively results in three layers of virtual memory in ESXi: physical memory, guest operating system physical memory, and guest operating system virtual memory. The VMkernel presents a portion of physical host memory to the virtual machine as its guest operating system physical memory. The guest operating system presents the virtual memory to the applications.

The virtual machine is configured with a set of memory; this is the sum that the guest OS is told it has available to it. A virtual machine will not necessarily use the entire memory size; it only uses what is needed at the time by the guest OS and applications. However, a VM cannot access more memory than the configured memory size. A default memory size is provided by vSphere when creating the virtual machine. It is important to know the memory needs of the application and guest operating system being virtualized so that the virtual machine's memory can be sized accordingly.

There are two key components with virtual networking: the virtual switch and virtual Ethernet adapters. A virtual machine can be configured with up to ten virtual Ethernet adapters, called vNICs. The following image displays a representation of a virtual machine's vNIC:

Virtual network switching is software interfacing between virtual machines at the vSwitch level until the frames hit an uplink or a physical adapter, exiting the ESXi host and entering the physical network. Virtual networks exist for virtual devices; all communication between the virtual machines and the external world (physical network) goes through vNetwork standard switches or vNetwork distributed switches.

Virtual networks operate on layer 2, data link, of the OSI model. A virtual switch is similar to a physical Ethernet switch in many ways. For example, virtual switches support the standard VLAN (802.1Q) implementation and have a forwarding table, like a physical switch. An ESXi host may contain more than one virtual switch. Each virtual switch is capable of binding multiple vmnics together in a network interface card (NIC) team, which offers greater availability to the virtual machines using the virtual switch.

There are two connection types available on a virtual switch: a port group and a VMkernel port. Virtual machines are connected to port groups on a virtual switch, allowing access to network resources. VMkernel ports provide a network service to the ESXi host to include IP storage, management, vMotion, and so on. Each VMkernel port must be configured with its own IP address and network mask. The port groups and VMkernel ports reside on a virtual switch and connect to the physical network through the physical Ethernet adapters known as vmnics. If uplinks (vmnics) are associated with a virtual switch, then the virtual machines connected to a port group on this virtual switch will be able to access the physical network.

In a non-virtualized environment, physical servers connect directly to storage, either to an external storage array or to their internal hard disk arrays to the server chassis. The issue with this configuration is that a single server expects total ownership of the physical device, tying an entire disk drive to one server. Sharing storage resources in non-virtualized environments can require complex filesystems and migration to file-based Network Attached Storage (NAS) or Storage Area Networks (SAN). The following image displays a representation of a virtual disk:

Shared storage is a foundational technology that allows many things to happen in a virtual environment (High Availability, Distributed Resource Scheduler, and so on). Virtual machines are encapsulated in a set of discrete files stored on a datastore. This encapsulation makes the VMs portable and easy to be cloned or backed up. For each virtual machine, there is a directory on the datastore that contains all of the VM's files. A datastore is a generic term for a container that holds files as well as .iso images and floppy images. It can be formatted with VMware's Virtual Machine File System (VMFS) or can use NFS. Both datastore types can be accessed across multiple ESXi hosts.

VMFS is a high-performance, clustered filesystem devised for virtual machines that allows a virtualization-based architecture of multiple physical servers to read and write to the same storage simultaneously. VMFS is designed, constructed, and optimized for virtualization. The newest version, VMFS-5, exclusively uses 1 MB block size, which is good for large files, while also having an 8 KB subblock allocation for writing small files such as logs. VMFS-5 can have datastores as large as 64 TB. The ESXi hosts use a locking mechanism to prevent the other ESXi hosts accessing the same storage from writing to the VMs' files. This helps prevent corruption.

Several storage protocols can be used to access and interface with VMFS datastores; these include Fibre Channel, Fibre Channel over Ethernet, iSCSI, and direct attached storage. NFS can also be used to create a datastore. VMFS datastore can be dynamically expanded, allowing the growth of the shared storage pool with no downtime.

vSphere significantly simplifies accessing storage from the guest OS of the VM. The virtual hardware presented to the guest operating system includes a set of familiar SCSI and IDE controllers; this way the guest OS sees a simple physical disk attached via a common controller. Presenting a virtualized storage view to the virtual machine's guest OS has advantages such as expanded support and access, improved efficiency, and easier storage management.

Free Chapter

Free Chapter