The technical rationale for moving to the cloud can often be summed up in two words—autoscaling and autohealing.

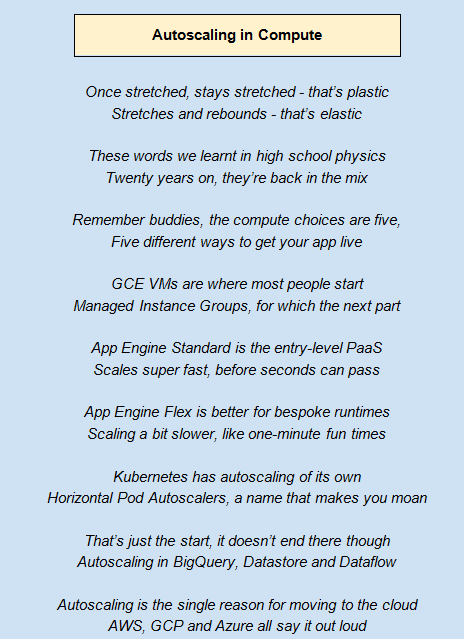

- Autoscaling: The idea of autoscaling is simple enough although the implementations can get quite involved—apps are deployed on compute, the amount of compute capacity increases or decreases depending on the level of incoming client requests. In a nutshell, all the public cloud providers have services that make autoscaling and autohealing easily available. Autoscaling, in particular, is a huge deal. Imagine a large Hadoop cluster, with say 1,000 nodes. Try scaling that; it probably is a matter of weeks or even months. You'd need to get and configure the machines, reshard the data and jump through a trillion hoops. With a cloud provider, you'd simply use an elastic version of Hadoop such as Dataproc on the GCP or Elastic MapReduce (EMR) on AWS and you'd be in business in minutes. This is not some marketing or sales spiel; the speed of scaling up and down on the cloud is just insane.

Here’s a little rhyme to help you remember the main point of our conversation here—we’ll keep using them throughout the remainder of the book just to mix things up a bit. Oh, and they might sometimes introduce a few new terms or ideas that will be covered at length in the following sections, so don’t let any forward references bother you just yet!

- Autohealing: The idea of autohealing is just as important as that of autoscaling, but it is less explicitly understood. Let's say that we deploy an app that could be a Java JAR, Python package, or Docker container to a set of compute resources, which again could be cloud VMs, App Engine backends, or pods in a Kubernetes cluster. Those compute resources will have problems from time to time; they will crash, hang, run out of memory, throw exceptions, and misbehave in all kinds of unpredictable ways. If we did nothing about these problems, those compute resources would effectively be out of action, and our total compute capacity would fall and, sooner or later, become insufficient to meet client requests. So, clearly, we need to somehow detect whether our compute resources got sick, and then heal them. In the pre-cloud days, this would have been pretty manual, some poor sap of an engineer would have to nurse a bare metal or VM back to health. Now, with cloud-based abstractions, individual compute units are much more expendable. We can just take them down and replace them with new ones. Because these units of compute capacity are interchangeable (or fungible—a fancier word that means the same thing), autohealing is now possible: