When running benchmarks to test the performance of a Ceph cluster, you are ultimately measuring the result of latency. All other forms of benchmarking metrics, including IOPS, MBps, or even higher-level application metrics, are derived from the latency of that request.

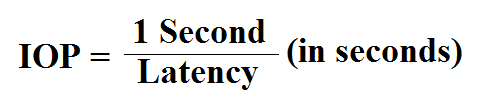

IOPS are the number of I/O requests done in a second; the latency of each request directly effects the possible IOPS and can be calculated using this formula:

An average latency of 2 milliseconds per request will result in roughly 500 IOPS, assuming each request is submitted in a synchronous fashion:

1/0.002 = 500

MBps is simply the number of IOPS multiplied by the I/O size:

500 IOPS * 64 KB = 32,000 KBps

When you are carrying out benchmarks, you are actually measuring the end result of a latency. Therefore, any tuning that you are carrying out should be done to reduce end-to-end latency for each I/O request...