Network security does not just end when we implement security products or processes. A network is like a living and breathing organism that evolves with time, but sometimes breaks down and needs maintenance. Apart from security issues, there are many common issues that can occur, including network connectivity issues, power outages, network crashes, and black holes in routing.

Typically, a Security Operations Center (SOC) is something that is at the center of security monitoring and operations, but at the same time, a Network Operations Center (NOC) can play a very important role in network resilience and optimal performance. In this section, we'll take a look at some of the key attributes of the NOC.

Network Operations Center overview

An NOC is a central entity for an organization's network monitoring endeavor. This encompasses technology and processes essential to actively managing and responding to networking-related issues. A typical NOC consists of engineers and analysts monitoring the network, ensuring smooth operations, and ensuring network/infrastructure uptime. This includes, but is not limited to, the following:

- Network device, server, application, and endpoint monitoring

- Hardware and software installation concerning network devices

- Network analysis (discovery and assessments) and troubleshooting

- Monitoring common threats, viruses, and DOS attacks

- Alarm handling and performance improvement (including QoS)

- Monitoring power failures and system backups

- Application, email, policy, backup and storage, and patch management

- Service optimization and performance reporting

- Threat analysis

NOCs often encounter complex networking issues that might need troubleshooting and collaboration between different IT teams to investigate and resolve the issue. To increase the overall effectiveness of an NOC, organizations focus on a few areas, as discussed in the following subsections.

Proper incident management

This will include identifying an incident, investigating the root cause, resolving the incident, and preventing its recurrence to avoid business disruption. For a more evolved look at the best practices for incident management, the organization should review and analyze their adherence to the ITIL incident management framework. This includes the following:

- Prioritizing incidents based on their impact.

- Accurately reflecting on the current status and documentation of all artifacts.

- Implementing a streamlined process to ensure the effective handling of incidents that's in line with the organization policy.

- Automating elementary manual iterative tasks and escalations.

- Implementing an effective communication mechanism for sharing real-time updates with the required stakeholders.

- Integrating third-party applications such as ticketing systems, monitoring dashboards, a knowledge base, threat intelligence, and so on to make the analyst more empowered.

- Establishing key performance indicators and driving continuous improvement by reporting on them. This helps the organization continuously improve and innovate on its performance metrics and key deliverables, such as higher performance quality, lower costs to serve them, and their mean time to resolve.

An incident response team should consist of a hierarchical team structure, where each level is accountable and responsible for certain activities, as shown here:

Let's take a quick look at each layer:

- Tier 1 Analyst: Acts as the first point of contact in the incident response process. They areresponsible for recording, classification, and first-line investigation.

- Tier 2 Analyst: Acts as an escalation point for Tier 1. Also acts as an SME for deeper investigation and the creation of knowledge articles. They are also required to escalate major incidents to Tier 3.

- Tier 3 Analyst: Acts as an escalation point for Tier 2 and is responsible for restoring an impacted service. They escalate unresolved incidents to the relevant vendor or team for resolution. They also act as a liaison between internal and vendor teams.

- Incident Coordinator: Acts as the administrative authority ensuring that the process is being followed and that quality is maintained. They are responsible for assigning an incident within a group, maintaining communication with the incident manager, and providing trend analysis for iterative incidents.

- Incident Manager: Manages the entire process until normal service is restored. They are primarily responsible for planning and coordinating activities such as monitoring, resolution, and reporting. They act as a point for major escalations, monitor the workload and SLA adherence, conduct incident reviews, provide guidance to the team, and ensure continuous improvement and process excellence.

In some organizations, there are other roles such as incident assignment group manager and incident process owner (who is accountable for designing, maintaining, and improving the process) who ensure the efficiency and effectiveness of the service's delivery.

Functional ticketing system and knowledge base

A ticketing system encompasses all technical and related details of an incident. This includes the incident number, status, priority, affected user base, assigned group and resource name, time of incident response, resolution, and the type of incident, among other details. It's also important to maintain a centralized knowledge base that encompasses various process documents, structured and unstructured information, learnings, and process practices.

The preceding screenshot is from ServiceNow, which is a leading ITSM platform, often used by SOC/NOC as a ticketing system.

Monitoring policy

The NOC establishes and implements standard monitoring policies and procedures for performance benchmarking and capacity monitoring for organization infrastructure. To rule out false positives at the beginning of an implementation, it is imperative to set a baseline of normal activities, traffic patterns, network spikes, and other behaviors by studying the network for an initial period. It is also important to have visibility into the network at all levels and be able to detect the root cause in a short amount of time.

A well-defined investigation process

To have a robust and dependable investigation process, it is important to have a well-defined and documented process flow that utilizes the best practices and standards. A good Root Cause Analysis (RCA) is the keyto uncovering the root problems and enhancing performance and connectivity.

Reporting and dashboards

For regulatory and compliance requirements, as well as to adhere to business goals and performance metrics, it is important to have explicit reporting and dashboards for real-time visibility and situational awareness. The following screenshot shows the dashboard of ServiceNow, a leading platform that I mentioned earlier:

These reporting dashboards allow us to be aware of the current situation of the SOC/NOC and help in identifying and responding to any issues that might arise.

Escalation

A streamlined, time-sensitive escalation process with an accurate reflection of artifacts is one of the most important factors for smoothly running operations and timely responses. Analysts are told to escalate issues if they do not have the relevant reaction plan or playbook in place for the said incident, so as to get insights from the next level for the appropriate resolution.

High availability and failover

Due to the implementation setup, our monitoring capabilities might suffer as part of a network disruption. In this case, your network and security teams will be flying blind. Hence, steps should be taken to ensure that the monitoring system is up at all times and has a documented and tested BCP/DR process in place.

Apart from the ones discussed in the preceding subsections, there are several other important procedures that play a role in the overall service delivery process and incident management best practices, such as change management, problem management, and capacity and vendor management. These can be studied in detail in ITIL as part of larger ITSM practices.

Most NOCs today are innovating for better performance by including analytics for deriving insights and correlation, from AI for predicting issues and recommending best fixes, to automation and orchestration for reducing the time to respond and human errors. Today, a lot of Managed Service Providers (MSPs) are coming into the picture of managing an NOC. The reason for this is that a lot of organizations are leaning toward outsourcing their NOC operations due to perceived benefits such as improved efficiency, better reliability, less downtime, enhanced security and compliance, improved ROI, cost savings, and risk transfer. The other major benefit of outsourcing is the skilled resource and industry expertise that comes with an MSP.

Now that we know how an NOC operates and the different segments that make it operational for effective network security monitoring, let's discuss how to assess the network security's effectiveness and the efficiency of an organization.

Assessing network security effectiveness

Network security assessment addresses the broader aspects of the security functionality of a network. This should involve exploring the network infrastructure to identify the different components present in the environment and cataloging them. Ideally, this should be followed by assessing the technical architecture, technological configurations, and vulnerabilities and threats that might have been identified as part of the first step. Following this, we should focus on the ability to exploit these identified threats to validate their impact and risk factor, which may be based on a qualitative or quantitative approach. This helps us in driving accurate prioritization and business contexts, as well as the corresponding remediation plan.

Key attributes to be considered

Some of the key concepts that we will be covering in this book as part of network security will form the foundational capabilities that enable us to check the right boxes and derive an appropriate value for each. In this section, we will investigate the major domains that can help us assess the maturity of the network:

- Static analysis: This focuses on auditing application code, network and server configuration, and providing an architecture review of the network. This is exhaustive and is work and time-intensive but derives a lot of valuable insight into the inner workings of the various components and the configuration errors and vulnerabilities that may persist in the environment as they are conducted at runtime. Therefore, we need to break this into small, actionable steps such as design review, configuration review, and static code analysis.

- Dynamic analysis: This focuses on the threat actor's perspective, who aims to exploit services and threat vectors that can result in the loss of Confidentiality, Integrity, and Availability (CIA). This can be inclusive of network infrastructure testing, web application and services testing, and dynamic code analysis.

- Configuration review: This focuses on the auditing network components at a low level, such as firewalls, routers, switches, storage, and virtualization infrastructure, server and appliance operating system configuration, and application configurations. You can leverage configuration reviews to perform gap analysis and document possible flaws in the configuration and harden/mitigate the identified ones. You can also prioritize the recommended actions based on their severity and impact. The low-hanging ones should be addressed quickly.

- Design review: This concentrates on implementing security controls and evaluating their effectiveness and applicability. ISO/IEC 15408-1:2009 is an industry-recognized certification that accounts for general concepts and principles of IT security evaluation. It captures a variety of operations. The functional and assurance components are given in ISO/IEC 15408-2 and ISO/IEC 15408-3, and can be tailored for the relevant operations.

- Network infrastructure testing: This segregates network testing in the form of vulnerability assessment and penetration testing. There is a wide range of tools and platforms that can be utilized for the same that will scan the network infrastructure for potential vulnerabilities and provide a base for manual or automated testing of the explored vulnerabilities or flaws.

- Web application testing: Web application testing involves assessing an application from various approaches to test for weakness in the source code, application and business logic, authenticated and unauthenticated application processing, and configuring and implementing flaws. OWASP is a brilliant resource for digging deeper into the application security practice.

So far, we have explored the various major aspects that should form the basic building blocks of a good network security architecture. Next, we will take a look at some techno-management aspects.

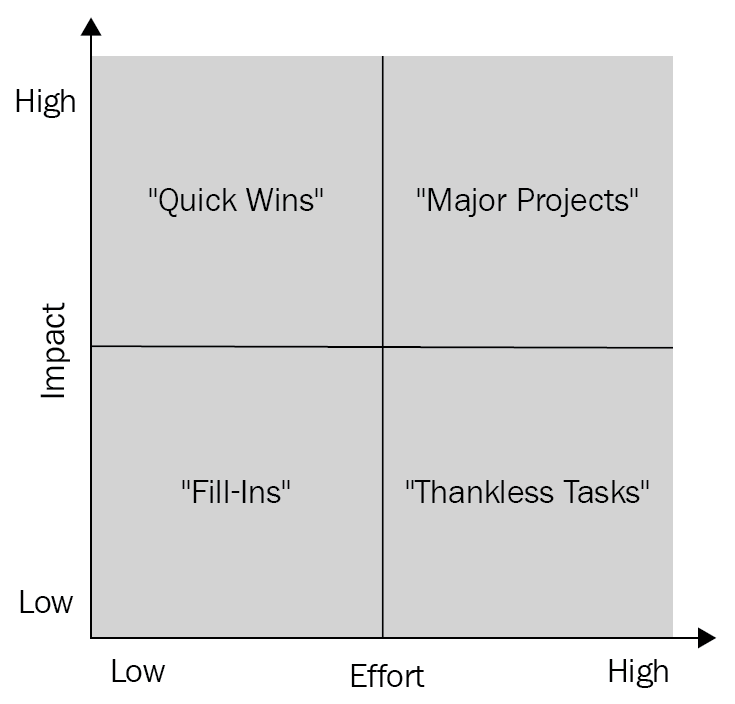

The action priority matrix

The action priority matrix is an approach that helps us prioritize the findings from each of these phases and processes. This helps us identify the most important activities, along with their complexity and required time and effort. This is used by all organizations in one form or another to showcase and plan for execution.

The overall consensus reflects the following path once all the activities have been mapped:

- Quick Wins: These are activities that take less time/effort yet have a high impact.

- Major Projects: These are activities that require more time and effort and have a high impact.

- Fill-Ins: These are activities that take less time but have less impact.

- Thankless Tasks: These are activities that take a huge amount of time/effort yet don't have a sizable impact.

An example of this matrix can be seen in the following graph:

This approach provides a strategic outline to the team and helps them decide how and what to prioritize with respect to the timelines. Generally, it's recommended to aim for Quick Wins first as they provide the momentum for achieving goals in a short span of time. Then, you should focus on the major projects.

Threat modeling

This is a structured approach toward (network) security that assesses the potential threat landscape concerning the point of view of an attacker. This takes into consideration the attacker's motives, threat profile (their capability and skill), key assets of interest, and the most likely attack vector to be used, among other attributes, to understand which threats are most likely to materialize and how they will unfold in the environment. The idea behind this is to understand the environment better by reviewing all the components and processes.

Today, most threat modeling methodologies focus on one of the following approaches: asset-centric, attacker-centric, and software-centric. The following diagram shows what risk inherently means. Risk is when we have an asset that is vulnerable to a certain flaw or loophole, and we have a threat vector that can exploit the vulnerability. Ultimately, this impacts the asset and Confidentiality, Integrity, and Availability (CIA).

A + T + V = R

Here A is Asset, T is Threat, V is Vulnerability, and R is Risk:

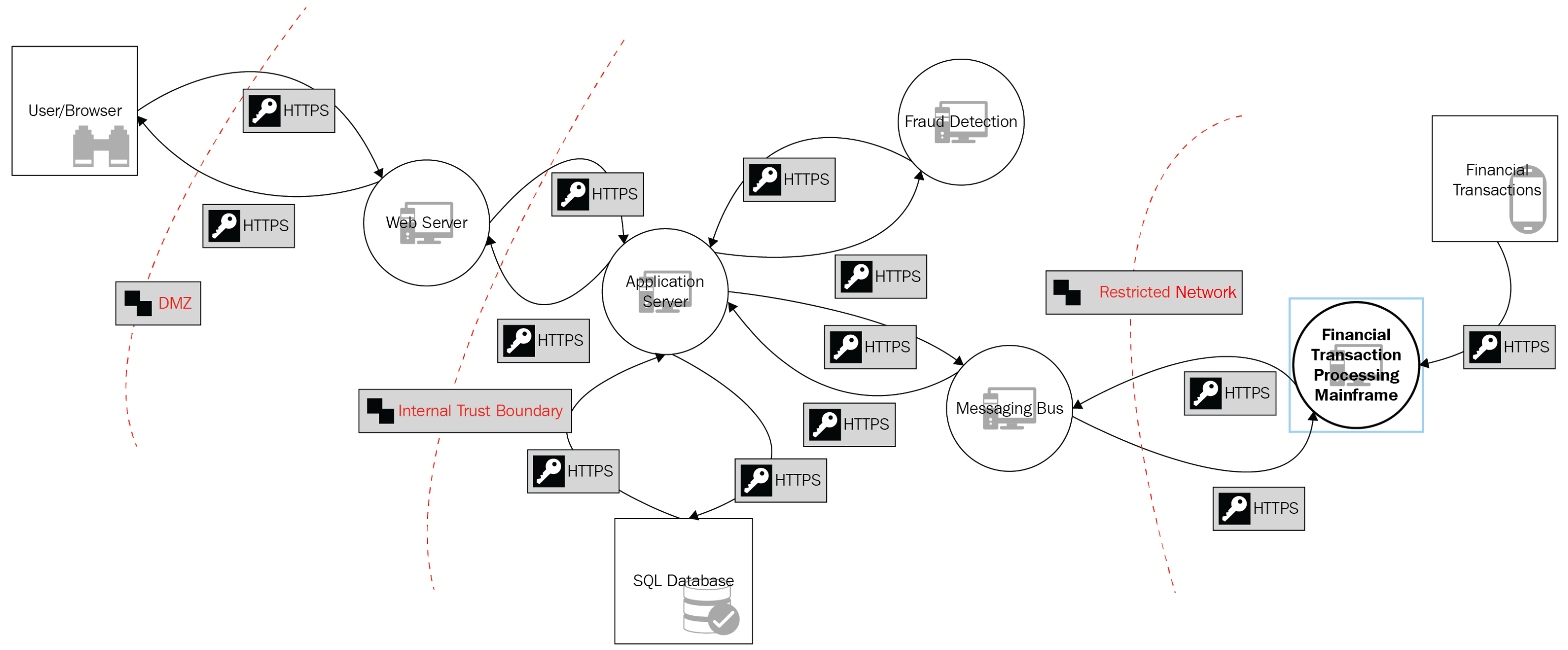

The following steps explain the threat modeling process:

- First, the scope of the analysis is defined and each component of the application and its infrastructure is documented.

- This is followed by developing a data flow diagram that shows how each of these components interacts. This helps us assess the control mechanism. Privileges are verified for data movement.

- Then, potential threats are mapped to these components and their risk impact is quantified.

- Finally, various security mitigation steps are evaluated that might already be in place to mitigate such threats. Here, we document the requirements for additional security controls (if applicable).

On the flip side, an attacker might conduct an exercise similar to the following threat modeling:

- They would start by evaluating all possible entry points into the network/application/infrastructure.

- The next step would be to focus on the dataset or assets that would be accessible to them via these access points and then evaluate the value or possibility of using these as a pivot point.

- Post this, the attacker crafts the exploit and executes it.

Now that we have a basic understanding of the threats that we may face, it is important to have standardized frameworks that can be referred to by professionals to assess the nature of these threats and the impact they may have.

Assessing the nature of threats

You, as a security professional, can use various industrialized risk frameworks and methodologies to assess and quantify the nature of threats. Some of the prominent ones will be discussed in the following subsections.

STRIDE

STRIDE is a security framework that classifies security threats into six categories, as follows:

- Spoofing of user identity

- Tampering

- Repudiation

- Information disclosure (privacy breach or data leak)

- Denial-of-service (DoS)

- Elevation of privilege

This was developed by Microsoft to verify security concepts such as authenticity, integrity, known reputability, confidentiality, availability, and authorization.

PASTA

Process for Attack Simulation and Threat Analysis (PASTA) is a risk-centric approach focused on identifying potential threat patterns. This is an integrated application threat analysis that focuses on an attacker-centric view that security analysts can leverage to develop an asset-centric defense strategy.

It has seven stages that build up to the impact of a threat. These stages are as follows:

- Definition of the Objectives for the Treatment of Risks

- Definition of the Technical Scope

- Application Decomposition and Assertion

- Threat Analysis

- Weakness and Vulnerability Analysis

- Attack Modeling and Simulation

- Risk Analysis and Management

Next, we will take a look at the Trike framework and see how it's used for security auditing for risk management.

Trike

Trike is a framework for security auditing from a risk management outlook perspective. The process starts with defining the requirement model that the threat models are based on. The requirement model outlines the acceptable level of risk, which is associated with each asset class (the actor-asset-action matrix):

This matrix is further broken down into actions such as creating, reading, updating, and deleting, along with associated privileges such as allowed, restricted, and conditional. By following this, possible threats are specified/mapped alongside a risk value, which is based on a five-point scale for each action based on its probability.

VAST

Visual, agile, and simple threat modeling (VAST) is an agile software development methodology with a focus on scaling the process across infrastructure and SDLC. VAST aims to provide actionable outputs for various stakeholders and its scalability and usability is a key factor for its adaptability in larger organizations. The following diagram illustrates a VAST model:

VAST utilizes two threat models – the application threat model and the operational threat model. The application threat model uses process flow diagrams to represent the architectural viewpoint, whereas the operational threat model uses data flow diagrams to represent the attacker's viewpoint.

OCTAVE

Operationally critical threat, asset, and vulnerability evaluation (OCTAVE) is a security framework that's utilized for assessing risk levels and planning countermeasures against them. The focus is to reduce risk exposure to potential threats and determine the likelihood of an attack and its impact. It has three broad stages, as follows:

- Building asset-based threat profiles

- Classification of infrastructure vulnerabilities

- Creation of an overall security strategy and an activity plan for the successive exercises

It has two known formats – OCTAVE-S, which is a simplified format suitable for smaller organizations, and OCTAVE Allegro, which is a more comprehensive format suitable for large organizations.