Containers and modern DevOps practices

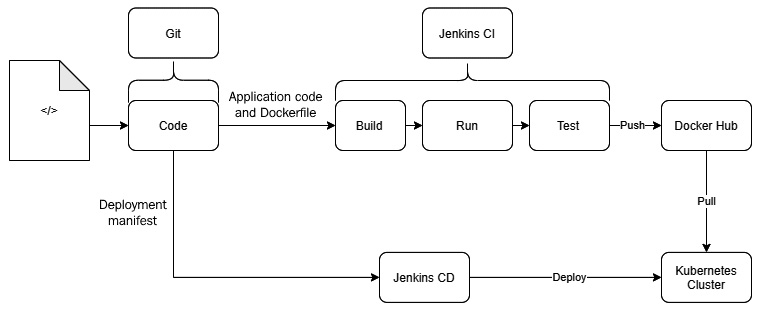

Containers follow DevOps practices right from the start. If you look at a typical container build and deployment workflow, this is what you get:

- First, code your app in whatever language you wish.

- Then, create a

Dockerfilethat contains a series of steps to install the application dependencies and environment configuration to run your app. - Next, use the Dockerfile to create container images by doing the following:

a) Build the container image

b) Run the container image

c) Unit test the app running on the container

- Then, push the image to a container registry such as DockerHub.

- Finally, create containers from container images and run them in a cluster.

You can embed these steps beautifully in the CI/CD pipeline example shown here:

Figure 1.6 – Container CI/CD pipeline example

This means your application and its runtime dependencies are all defined in code. You are following configuration management from the very beginning, allowing developers to treat containers like ephemeral workloads (ephemeral workloads are temporary workloads that are dispensible, and if one disappears, you can spin up another one without it having any functional impact). You can replace them if they misbehave – something that was not very elegant with virtual machines.

Containers fit very well within modern CI/CD practices as you now have a standard way of building and deploying applications across, irrespective of what language you code in. You don't have to manage expensive build and deployment software as you get everything out of the box with containers.

Containers rarely run on their own, and it is a standard practice in the industry to plug them into a container orchestrator such as Kubernetes or use a Container as a Service (CaaS) platform such as AWS ECS and EKS, Google Cloud Run and Kubernetes Engine, Azure ACS and AKS, Oracle OCI and OKE, and others. Popular Function as a Service (FaaS) platforms such as AWS Lambda, Google Functions, Azure Functions, and Oracle Functions also run containers in the background. So, though they may have abstracted the underlying mechanism from you, you may already be using containers unknowingly.

As containers are lightweight, you can build smaller parts of applications into containers so that you can manage them independently. Combine that with a container orchestrator such as Kubernetes, and you get a distributed microservices architecture running with ease. These smaller parts can then scale, auto-heal, and get released independently of others, which means you can release them into production quicker than before and much more reliably.

You can also plug in a service mesh (infrastructure components that allow you to discover, list, manage, and allow communication between multiple components (services) of your microservices application) such as Istio on top, and you will get advanced Ops features such as traffic management, security, and observability with ease. You can then do cool stuff such as Blue/Green deployments and A/B testing, operational tests in production with traffic mirroring, geolocation-based routing, and much more.

As a result, large and small enterprises are embracing containers quicker than ever before, and the field is growing exponentially. According to businesswire.com, the application container market is showing a compounded growth of 31% per annum and will reach US$6.9 billion by 2025. The exponential growth of 30.3% per annum in the cloud, expected to reach over US$2.4 billion by 2025, has also contributed to this.

Therefore, modern DevOps engineers must understand containers and the relevant technologies to ship and deliver containerized applications effectively. This does not mean that Virtual Machines are not necessary, and we cannot completely ignore the role of Infrastructure as a Service (IaaS) based solutions in the market, so we will also cover a bit of config management in further chapters. Due to the advent of the cloud, Infrastructure as code (IaC) has been gaining a lot of momentum recently, so we will also cover Terraform as an IaC tool.