Understanding monoliths and microservices

Let's put Kubernetes and Docker to one side for the moment, and instead, let's talk a little bit about how internet and software development evolved together over the past 20 years. This will help you to gain a better understanding of where Kubernetes sits and what problem it solves.

Understanding the growth of the internet since the late 1990s

Since the late 1990s, the popularity of the internet has grown rapidly. Back in the 1990s, and even in the early 2000s, the internet was only used by a few hundred thousand people in the world. Today, almost 2 billion people are using the internet, whether for email, web browsing, video games, or more.

There are now a lot of people on the internet, and we're using it to answer tons of different needs, and these needs are adressed by dozens of applications deployed on dozens of devices.

Additionally, the number of connected devices has increased, as each person can now have several devices of a different nature connected to the internet: laptops, computers, smartphones, TVs, tablets, and more.

Today, we can use the internet to shop, to work, to entertain, to read, or to do whatever. It has entered almost every part of our society and has led to a profound paradigm shift for the last 20 years. All of this has given the utmost importance to software development.

Understanding the need for more frequent software releases

To cope with this ever-increasing number of users who are always demanding more in terms of features, the software development industry had to evolve in order to make new software releases faster and more frequent.

Indeed, back in the 1990s, you could build an application, deploy it to production, and simply update it once or twice a year. Today, companies must be able to update their software in production, sometimes several times a day, whether to deploy a new feature, to integrate with a social media platform, to support the resolution of the latest fashionable smartphone, or even to release a patch to a security breach identified the day before. Everything is far more complex today, and you must go faster than before.

We constantly need to update our software, and in the end, the survival of many companies directly depends on how often they are able to offer releases to their users. But how do we accelerate software developments life cycles so that we can deliver new versions of our software to our users more frequently?

IT departments of companies had to evolve, both in an organizational sense and a technical sense. Organizationally, they changed the way they managed projects and teams in order to shift to agile methodologies, and technically, technologies such as cloud computing platforms, containers, virtualization were adopted widely and helped a lot to align technical agility with organizational agility. All of this to ensure more frequent software releases! So, let's focus on this evolution next.

Understanding the organizational shift to agile methodologies

From a purely organizational point of view, agile methodologies such as Scrum, Kanban, and DevOps became the standard way to organize IT teams.

Typical IT departments that do not apply agile methodologies are often made of three different teams, each of them having a single responsibility toward the development and release process life cycle.

Before the adoption of agile methodologies, there was very strong opposition between them:

- The business team: These teams are in charge of explaining the need for a new feature to other teams, especially the developers. Their job is hard because they need to translate business needs into concrete technical features that can be understood by the developers.

- The development team: These teams are in charge of writing the code. First, they take the specs from the business team, and then they implement the software and features. If they do not understand the need, the development of new features can go back and forth between them and the business team, which can lead to a massive loss of time. Even worse, back in the old days, these guys had no clear vision of the type of environment their code would ultimately run on because it was kept at the sole discretion of the operation team.

- The operation team: These teams are in charge of deploying the software to the production servers and operating it. Often, they are not happy when they hear that a new version of a piece of software, which includes new features, has to be deployed because the management judges them on their ability to provide stability to the app. In general, they are here to deploy something that was developed by another team without having a clear vision of what it contains and how it is configured since they did not participate in its development.

These are what we call silos. The roles are clearly defined, people do not work together that much, and when something goes wrong, everyone loses time in an attempt to find the right information from the proper person.

This kind of siloed organization has led to major issues:

- A significantly longer development time

- Greater risk in the deployment of a release that might not work at all in production

And that's essentially what agile methodologies and DevOps broke. The change agile methodologies wrought was to make people work together by creating multidisciplinary teams.

An agile team consists of a product owner describing concrete features by writing them as user stories that are readable by the developers who are working in the same team as them. Developers should have visibility over the production environment and the ability to deploy on top of it, preferably using a continuous integration and continuous deployment (CI/CD) approach. Testers should also be part of agile teams in order to write tests.

Simply put, by adopting agile methodologies and DevOps, these silos were broken and multidisciplinary teams capable of formalizing a need, implementing it, testing it, releasing it, and maintaining it in the production environment were created.

Important Note

Rest assured, even though we are currently discussing agile methodologies and the whole internet in a lot of detail, this book is really about Kubernetes! We just need to explain some of the problems that we have faced before introducing Kubernetes for real!

Agile development teams are complete operational units that are capable of handling all development steps on their own. An agile team should understand the business value brought by a new feature. They should have a minimal view of the software architecture, understand how to build it, how to test it, and the production environment it will run on.

That's the purpose of the expression You Build It, You Run It that you'll see everywhere when reading about this subject: an agile team should be able to cover all aspects of an app's development, release, and maintenance life cycles.

You just have to bear in mind that before this, teams were siloed and each had its own scope and working process. So, we've covered the organizational transition brought by the adoption of the agile methodologies, now let's discuss the technical evolution that we've gone through over the past several years.

Understanding the shift from on-premises to the cloud

Having agile teams is very nice. But agility must also be applied to how the software is built and hosted.

With the aim to always achieve faster and more recurrent releases, agile software development teams had to revise two important aspects of software development and release:

- Hosting

- Software architecture

Today, apps are not just for a few hundred users but potentially for millions of users concurrently. Having more users on the internet also means having more computing power capable of handling them. And indeed, hosting an application became a very big challenge.

Back in the old days, there were two ways to get machines to host your apps. We call this on-premises hosting:

- Renting servers from established hosting providers

- Building your own data center, only for companies willing to invest a large amount of money in data centers

When your user base grows, the need to get more powerful machines to handle the load. The solution is to purchase a more powerful server and install your app on it from the start or to order and rack new hardware if you manage your data center. This is not very flexible. Today, a lot of companies are still using an on-premises solution, and often, it's not super flexible.

The game-changer was the adoption of the public cloud, which is the opposite of on-premises. The whole idea behind cloud computing is that big companies such as Amazon, Google, and Microsoft, which own a lot of data centers, decided to build virtualization on top of their massive infrastructure to ensure the creation and management of virtual machines was accessible by APIs. In other words, you can get virtual machines with just a few clicks or just a few commands.

Understanding why the cloud is well suited for scalability

Today, virtually anyone can get hundreds or thousands of servers, in just a few clicks, in the form of virtual machines or instances created on physical infrastructure maintained by cloud providers such as Amazon Web Services, Google Cloud Platform, and Microsoft Azure. A lot of companies decided to migrate their workload from on-premises to a cloud provider, and their adoption has been massive over these last years.

Thanks to that, now, computing power is one of the simplest things you can get.

Cloud computing providers are now typical hosting solutions that agile teams possess in their arsenal. The main reason for this is that the cloud is extremely well suited to modern development.

Virtual machine configurations, CPUs, OSes, network rules, and more are publicly displayed and fully configurable, so there are no secrets for your team in terms of what the production environment is made of. Because of the programmable nature of cloud providers, it is very easy to replicate a production environment in a development or testing environment, providing more flexibility to teams and helping them face their challenges when developing software.

That's a useful advantage for an agile development team built around the DevOps philosophy that needs to manage development, release, and application maintenance in production.

Cloud providers have brought many benefits, as follows:

- Offering elasticity and scalability

- Helping to break up silos and enforcing agile methodologies

- Fitting well with agile methodologies and DevOps

- Offering low costs and flexible billing models

- Ensuring there is no need to manage physical servers

- Allowing virtual machines to be destroyed and recreated at will

- More flexible compared to renting a bare-metal machine monthly

Due to these benefits, the cloud is a wonderful asset in the arsenal of an agile development team. Essentially, you can build and replicate a production environment over and over without the hassle of managing the physical machine by yourself. The cloud enables you to scale your app based on the number of users using it or the computing resources they are consuming. You'll make your app highly available and fault-tolerant. The result is a better user experience for your end users.

Important Note

Please note that Kubernetes can run both on the cloud and on-premises. Kubernetes is very versatile, and you can even run it on a Raspberry Pi. However, you'll discover that it's better to run it on a cloud due to the benefits they provide. Kubernetes and the public cloud are a good match, but you are not required or forced to run it on the cloud.

Now that we have explained what the cloud brought, let's move on to software architecture, as over the years, a few things have also changed there.

Essentially, software architecture consists of design paradigms that you can choose when developing software. In the 2020s, we can name two architectures:

- Monolithic architecture

- Microservices architecture

Exploring the monolithic architecture

In the past, applications were mostly composed as monoliths. A typical monolith application consists of a simple process, a single binary, or a single package.

This unique component is responsible for the entire implementation of the business logic, to which the software must respond. Monoliths are a good choice if you want to develop fairly simple applications that might not necessarily be updated frequently in production. Why? Well, because monoliths have one major drawback. If your monolith becomes unstable or crashes for some reason, your entire application will become unavailable:

Figure 1.1 – A monolith application consists of one big component that contains all your software

The monolithic architecture can allow you to gain a lot of time during your development and that's perhaps the only benefit you'll find by choosing this architecture. However, it also has many disadvantages. Here are a few of them:

- A failed deployment to production can break your whole application.

- Scaling activities become difficult to achieve; if you fail to scale, all your applications might become unavailable.

- A failure of any kind on a monolith can lead to the complete outage of your app.

In the 2010s, these drawbacks started to cause real problems. With the increase in the frequency of deployments, it became necessary to think of a new architecture that would be capable of supporting frequent deployments and closer update cycles, while reducing the risk or general unavailability of the application. This is why the microservices architecture was designed.

Exploring the microservices architecture

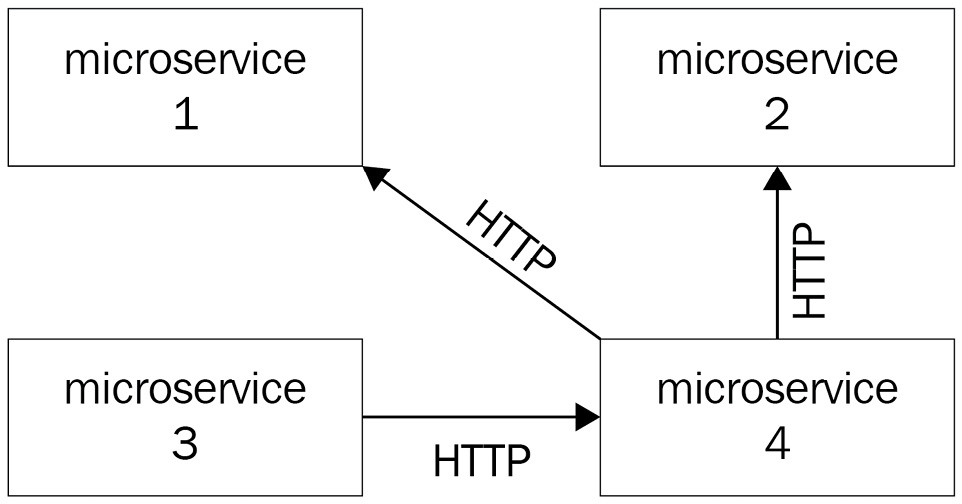

The microservices architecture consists of developing your software application as a suite of independent micro-applications. Each of these applications, which is called a microservice, has its own versioning, life cycle, environment, and dependencies. Additionally, it can have its own deployment life cycle. Each of your microservices must only be responsible for a limited number of business rules, and all of your microservices, when used together, make up the application. Think of a microservice as real full-featured software on its own, with its own life cycle and versioning process.

Since microservices are only supposed to hold a subset of all the features that the entire application has, they have to be accessible to expose their functions. You have to get data from a microservice, but you might also want to push data into it. You can make your microservice accessible through widely supported protocols such as HTTP or AMQP, and they need to be able to communicate with each other if needed.

That's why microservices are generally built as web services that are accessible through HTTP REST APIs. This is something that greatly differs from the monolithic architecture:

Figure 1.2 – A microservice architecture where different microservices communicate with the HTTP protocol

Another key aspect of the microservice architecture is that microservices need to be decoupled: if a microservice becomes unavailable or unstable, it must not affect the other microservices nor the entire application's stability. You must be able to provision, scale, start, update, or stop each microservice independently without affecting anything else. If your microservices need to work with a database engine, bear in mind that even the database must be decoupled. Each microservice should have its own SQL database and so on. So, if the database of microservice A crashes, it won't affect microservice B:

Figure 1.3 – A microservice architecture where different microservices communicate with the HTTP protocol and also with a dedicated SQL server; this way, the microservices are isolated and have no common dependencies

The key rule is to decouple as much as possible so that your microservices are fully independent. Because they are meant to be independent, microservices can also have completely different technical environments and be implemented in different languages. You can have one microservice implemented in Go, another one in Java, and another one in PHP, and all together they form one application. In the context of a microservice architecture, this is not a problem. Because HTTP is a standard, they will be able to communicate with each other even if their underlying technologies are different.

Microservices must be decoupled from other microservices, but they must also be decoupled from the operating system running them. Microservices should not operate at the host system level but at the upper level. You should be able to provision them, at will, on different machines without needing to rely on a strong dependency with the host system; that's why microservice architectures and containers are a good combination.

If you need to release a new feature in production, you simply deploy the microservices that are impacted by the new feature version. The others can remain the same.

As you can imagine, the microservice architecture has tremendous advantages in the context of modern application development:

- It is easier to enforce recurring production deliveries with minimal impact on the stability of the whole application.

- You can only upgrade to a specific microservice each time, not the whole application.

- Scaling activities are smoother since you might only need to scale specific services.

However, on the other hand, the microservice architecture has a few disadvantages, too:

- The architecture requires more planning and is considered to be hard to develop.

- There are problems in managing each microservice's dependencies.

Indeed, microservice applications are considered hard to develop, and it is easy to just do it incorrectly. This approach might be hard to understand, especially for junior developers. On the other hand, dependency management also becomes complex since all microservices can potentially have different dependencies.

Choosing between monolithic and microservices architectures

Presented in this way, you might think that microservices are the better of the two architectures. However, this is not always the case.

Although the monolithic architecture is older than microservice architecture, monolithic applications are not dead yet, and they can still be a good choice in certain situations. Microservices are not necessarily the ideal answer to all projects. If your application is simple, if there are only a few developers on your team working on your project, or if you can tolerate outages when you deploy a new version in production, then you can still opt for an application architecture that is a monolith.

On the other hand, if your application is more complex, if there are many developers with different skills on your team, or if you have a high level of requirements in terms of operational quality in production, scalability, and availability, then you should opt for a microservice architecture.

The problem is that microservices are slightly more complex to develop and manage in production since managing microservices essentially consists of managing multiple applications that each have their own dependencies and life cycles. Thankfully, the rise of Docker has enabled a lot of developers to adopt the microservice architecture.