Eventually you'll find that you need to start splitting your application to accommodate growth. This recipe discusses some components and strategies that you might consider, including Ultra Monkey, a methodology that will help you get started navigating the different clustering components that can be used in your application.

Chances are your setup will be more complex than a simple web plus database server. When that happens, it's time to start thinking about how to scale your architecture. You can start with simple separation (as outlined in the Optimizing your solution performance recipe) such as moving the databases to different servers, moving the backup components to different servers, and so on, or by splitting the application entirely.

In this section, you will set up Nginx as a frontend server, which queries Apache for URIs and uses Memcache to cache some responses. This will provide in-memory caching of objects, an event-based frontend, and will not require you to change your Apache configuration.

Change the port where Apache's running, so it doesn't conflict with Nginx:

Run the command

sudo editor /etc/apache2/ports.conf.Change 80 to

8080in the Listen and NameVirtualHost directives.Run the command

sudo editor /etc/apache2/sites-enabled/*.Change 80 to

8080in the VirtualHost directive.Run the command

sudo service apache2 restart.

Now, you can use Netfilter to avoid connections to TCP port 8080, since it will only be accessed locally by Nginx:

sudo iptables –A INPUT –p tcp –dport 8080 –j DROPInstall Nginx (if it wasn't installed before) using the following steps:

Run the command

sudo apt-get install nginx.Run the command

sudo editor /etc/nginx/sited-enabled/default.Search for the

location /section and replace it with:server { location / { set $memcached_key $uri; memcached_passlocalhost:11211; default_type text/html; error_page 404 @fallback; } location @fallback { proxy_pass http://localhost:8080; } }

Restart Nginx with

sudo service nginx restart.Install memcached with

sudo apt-get install memcached.Now you need to load objects into memcached. The key for the object is the URI; if you're trying to reach

http://example.com/icon.jpg, and you wanticon.jpgserved from Memcache, you need to load it first. Here's a simple PHP script to do it:<?php $mc = new Memcached(); $mc->addServer("localhost", 11211); $value = file_get_contents('/var/www/icon.jpg'); $mc->set("/icon.jpg", $value); ?>

You can also use memcdump or other tools to massively load objects in your Memcache. If the key is not found in Memcache, Nginx will fall back to Apache.

As mentioned before, Ultra Monkey is not a product but a methodology for setting up service clusters. It leverages existing open source technologies such as Heartbeat and Linux Virtual Server. Heartbeat provides high availability and Linux Virtual Server provides load balancing. Ultra Monkey enables different architectures or topologies ( http://www.ultramonkey.org/3/topologies/ha-lb-overview.html), and we will cover the load balancing one.

You need two or more web servers to do this, and it's supposed that you're running the same application in all of them, connecting to a single database.

Note

This particular scenario (only two servers) actually requires more setup (ARP replies, packet forwarding) which is described in full in the Ultra Monkey page. It has been abbreviated here for space, and this procedure will work well when you have several backends and one "gateway" balancer running ldirectord. You could add Heartbeat to another gateway and have a fully available gateway director for a large number of nodes in your cluster.

Install LVS' ldirectord via

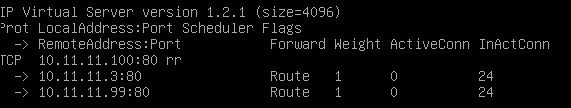

sudo apt-get install ldirectord.Now, let's assume web01 has the IP address

10.11.11.3and web02 has10.11.11.99. You need to decide in which one you will run the ldirectord software or, the virtual IP address of your cluster. In our case, we'll use10.11.11.100as a virtual address, and the director will be10.11.11.99.In both nodes, enable the virtual address using an alias or by adding a new address to the existing interface,

sudo ip addr add 10.11.11.100/24 dev eth0(this change will be temporary until you declare a new virtual interface on/etc/network/interfaces).In your cluster director, run

sudo editor /etc/ldirectord.cfand type in:checktimeout=3 checkinterval=1 autoreload=yes quiescent=yes virtual=10.11.11.100:80 real=10.11.11.3:80 gate real=10.11.11.99:80 gate service=http request="index.html" receive="It works" scheduler=rr protocol=tcp checktype=negotiate checkport=80

Notice that in addition to the round-robin load balancing functionality, ldirectord is also checking for the existence of

index.htmland the words "It works" inside the file (this is the default Apache index file), which can help to determine that a badly configured web server should no longer be part of the cluster.Make sure ldirectord is enabled,

sudo editor /etc/default/ldirectord.Now start ldirectord using

sudo service ldirectord start.Check the configuration with

sudo ipvsadm –L -n.

Your cluster should look as follows:

There are many other approaches to proxying, clustering, and caching on Linux and Debian, both open source and proprietary, such as Red Hat Cluster Suite for applications or even OpenStack for OS-level massive clustering. Evaluate all options to find an architecture that matches not only your existing solution but potential future needs as well.