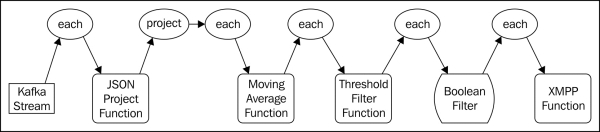

With the means to write our log data to Kafka, we're ready to turn our attention to the implementation of a Trident topology to perform the analytical computation. The topology will perform the following operations:

Receive and parse the raw JSON log event data.

Extract and emit necessary fields.

Update an exponentially-weighted moving average function.

Determine if the moving average has crossed a specified threshold.

Filter out events that do not represent a state change (for example, rate moved above/below threshold).

Send an instant message (XMPP) notification.

The topology is depicted in the following diagram with the Trident stream operations at the top and stream processing components at the bottom: