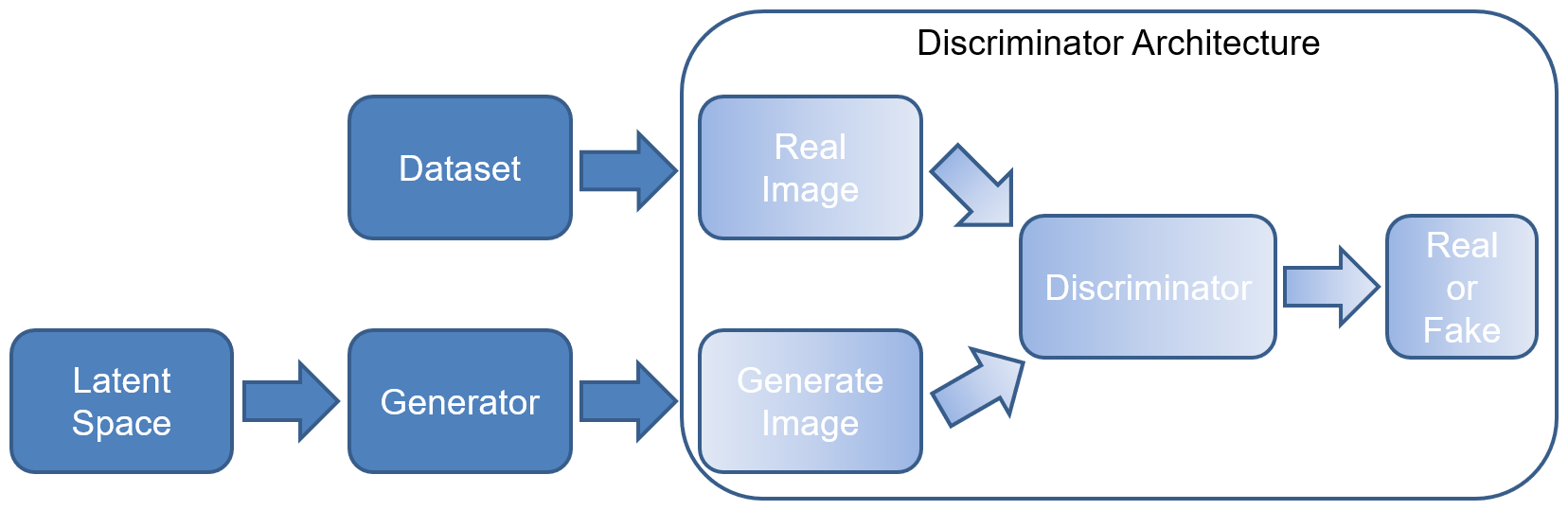

The generator generates the data in the GAN architecture, and now we are going to introduce the Discriminator architecture. The discriminator is used to determine whether the output of the generator and a real image are real or fake.

The discriminator architecture determines whether the image is real or fake. In this case, we are focused solely on the neural network that we are going to create- this doesn't involve the training step that we'll cover in the training recipe in this chapter:

The basic components of the discriminator architecture

The discriminator is typically a simple Convolution Neural Network (CNN) in simple architectures. In our first few examples, this is the type of neural network we'll be using.

Here are a few steps to illustrate how we would build a discriminator:

- First, we'll create a convolutional neural network to classify real or fake (binary classification)

- We'll create a dataset of real data and we'll use our generator to create fake dataset

- We train the discriminator model on the real and fake data

- We'll learn to balance training of the discriminator with the generator training - if the discriminator is too good, the generator will diverge

So, why even use the discriminator in this case? The discriminator is able to take all of the good things we have with discriminative models and act as an adaptive loss function for the GAN as a whole. This means that the discriminator is able to adapt to the underlying distribution of data. This is one of the reasons that current deep learning discriminative models are so successful today—in the past, techniques relied too heavily on directly computing some heuristic on the underlying data distribution. Deep neural networks today are able to adapt and learn based on the distribution of the data, and the GAN technique takes advantage of that.

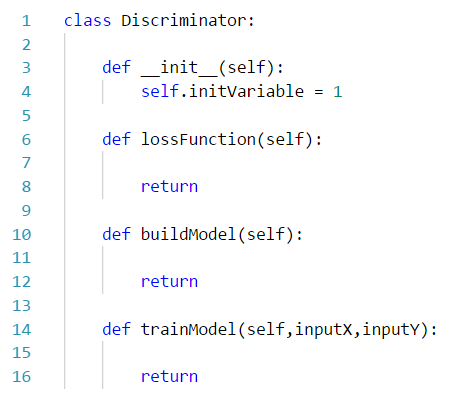

Ultimately, the discriminator is going to evaluate the output of the real image and the generated image for authenticity. The real images will score high on the scale initially, while the generated images will score lower. Eventually, the discriminator will have trouble distinguishing between the generated and real images. The discriminator will rely on building a model and potentially an initial loss function. The following class template will be used throughout this book to represent the discriminator:

Class template for developing the discriminator—these represent the basic components we need to implement for each of our discriminator classes

In the end, the discriminator will be trained along with the generator in a sequential model; we'll only use the trainModel method in this class for specific architectures. For the sake of simplicity and uniformity, the method will go unimplemented in most recipes.