Now that you have a fairly firm understanding of the core machine learning concepts, we can now dive into Microsoft's ML.NET framework. ML.NET is Microsoft's premier machine learning framework. It provides an easy-to-use framework to train, create, and run models with relative ease all in the confines of the .NET ecosystem.

Microsoft's ML.NET was announced and released (version 0.1) in May 2018 at Microsoft's developer conference BUILD in Seattle, Washington. The project itself is open source with an MIT License on GitHub (https://github.com/dotnet/machinelearning) and has seen a total of 17 updates since the first release at the time of writing.

Some products using ML.NET internally at Microsoft include Chart Decisions in Excel, Slide Designs in PowerPoint, Windows Hello, and Azure Machine Learning. This emphasizes the production-readiness of ML.NET for your own production deployments.

ML.NET, from the outset, was designed and built to facilitate the use of machine learning for C# and F# developers using an architecture that would come naturally to someone familiar with .NET Framework. Until ML.NET arrived, there was not a full-fledged and supported framework where you could not only train but also run a model without leaving the .NET ecosystem. Google's TensorFlow, for instance, has an open-source wrapper written by Miguel de Icaza available on GitHub (https://github.com/migueldeicaza/TensorFlowSharp); however, at the time of writing this book, most workflows require the use of Python to train a model, which can then be consumed by a C# wrapper to run a prediction.

In addition, Microsoft was intent on supporting all of the major platforms .NET developers have grown accustomed to publishing their applications in the last several years. Here are some examples of a few of the platforms, with the frameworks they targeted in parentheses:

- Web (ASP.NET)

- Mobile (Xamarin)

- Desktop (UWP, WPF, and WinForms)

- Gaming (MonoGame and SharpDX)

- IoT (.NET Core and UWP)

Later in this book, we will implement several real-world applications on most of these platforms to demonstrate how to integrate ML.NET into various application types and platforms.

Technical details of ML.NET

With the release of ML.NET 1.4, the targeting of .NET Core 3.0 or later is recommended to take advantage of the hardware intrinsics added as part of .NET Core 3.0. For those unfamiliar, .NET Core 2.x (and earlier) along with .NET Framework are optimized for CPUs with Streaming SIMD Extensions (SSE). Effectively, these instructions provide an optimized path for performing several CPU instructions on a dataset. This approach is referred to as Single Instruction Multiple Data (SIMD). Given that the SSE CPU extensions were first added in the Pentium III back in 1999 and later added by AMD in the Athlon XP in 2001, this has provided an extremely backward-compatible path. However, this also does not allow code to take advantage of all the advancements made in CPU extensions made in the last 20 years. One such advancement is the Advanced Vector Extensions (AVX) available on most Intel and AMD CPUs created in 2011 or later.

This provides eight 32-bit operations in a single instruction, compared to the four SSE provides. As you can probably guess, machine learning can take advantage of this doubling of instructions. For CPUs in .NET Core 3 that are not supported yet (such as ARM), .NET Core 3 automatically falls back to a software-based implementation.

Components of ML.NET

As mentioned previously, ML.NET was designed to be intuitive for experienced .NET developers. The architecture and components are very similar to the patterns found in ASP.NET and WPF.

At the heart of ML.NET is the MLContext object. Similar to AppContext in a .NET application, MLContext is a singleton class. The MLContext object itself provides access to all of the trainer catalogs ML.NET offers (some are offered by additional NuGet packages). You can think of a trainer catalog in ML.NET as a specific algorithm such as binary classification or clustering.

Here are some of the ML.NET catalogs:

- Anomaly detection

- Binary classification

- Clustering

- Forecasting

- Regression

- Time series

These six groups of algorithms were reviewed earlier in this chapter and will be covered in more detail in subsequent dedicated chapters in this book.

In addition, added recently in ML.NET 1.4 was the ability to import data directly from a database. This feature, while in preview at the time of writing, can facilitate not only an easier feature extraction process, but also expands the possibilities of making real-time predictions in an existing application or pipeline possible. All major databases are supported, including SQL Server, Oracle, SQLite, PostgreSQL, MySQL, DB2, and Azure SQL. We will explore this feature in Chapter 4, Classification Model, with a console application using a SQLite database.

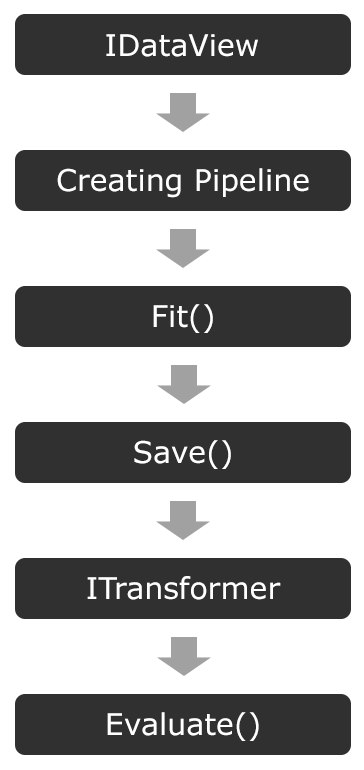

The following diagram presents the high-level architecture of ML.NET:

Here, you can see an almost exact match to the traditional machine learning process. This was intentionally done to reduce the learning curve for those familiar with other frameworks. Each step in the architecture can be summarized as follows:

- IDataView: This is used to store the loaded training data into memory.

- Creating a Pipeline: The pipeline creation maps the IDataView object properties to values to send to the model for training.

- Fit(): Regardless of the algorithm, after the pipeline has been created, calling Fit() kicks off the actual model training.

- Save(): As the name implies, this saves the model (in a binary format) to a file.

- ITransformer: This loads the model back into memory to run predictions.

- Evaluate(): As the name implies, this evaluates the model (Chapter 2, Setting Up the ML.NET Environment will dive further into the evaluation architecture).

Over the course of this book, we will dive into these methods more thoroughly.

Extensibility of ML.NET

Lastly, ML.NET, like most robust frameworks, provides considerable extensibility. Microsoft has since launched added extensibility support to be able to run the following externally trained model types, among others:

- TensorFlow

- ONNX

- Infer.Net

- CNTK

TensorFlow (https://www.tensorflow.org/), as mentioned previously, is Google's machine learning framework with officially supported bindings for C++, Go, Java, and JavaScript. Additionally, TensorFlow can be accelerated with GPUs and, as previously mentioned, Google's own TPUs. In addition, like ML.NET, it offers the ability to run predictions on a wide variety of platforms, including iOS, Android, macOS, ARM, Linux, and Windows. Google provides several pre-trained models. One of the more popular models is the image classification model, which classifies objects in a submitted image. Recent improvements in ML.NET have enabled you to create your own image classifier based on that pre-trained model. We will be covering this scenario in detail in Chapter 12, Using TensorFlow with ML.NET.

ONNX (https://onnx.ai/), an acronym for Open Neural Network Exchange Format, is a widely used format in the data science field due to the ability to export to a common format. ONNX has converters for XGBoost, TensorFlow, scikit-learn, LibSVM, and CoreML, to name a few. Microsoft's native support of the ONNX format in ML.NET will not only allow better extensibility with existing machine learning pipelines but also increase the adoption of ML.NET in the machine learning world. We will utilize a pre-trained ONNX format model in Chapter 13, Using ONNX with ML.NET.

Infer.Net is another open source Microsoft machine learning framework that focuses on probabilistic programming. You might be wondering what probabilistic programming is. At a high level, probabilistic programming handles the grey area where traditional variable types are definite, such as Booleans or integers. Probabilistic programming uses random variables that have a range of values that the result could be, akin to an array. The difference between a regular array and the variables in probabilistic programming is that for every value, there is a probability that the specific value would occur.

A great real-world use of Infer.Net is the technology behind Microsoft's TrueSkill. TrueSkill is a rating system that powers the matchmaking in Halo and Gears of War, where players are matched based on a multitude of variables, play types, and also, maps can all be attributed to how even two players are. While outside the scope of this book, a great whitepaper diving further into Infer.Net and probabilistic programming, in general, can be found here: https://dotnet.github.io/infer/InferNet_Intro.pdf.

CNTK, also from Microsoft, which is short for Cognitive Toolkit, is a deep learning toolkit with a focus on neural networks. One of the unique features of CNTK is its use of describing neural networks via a directed graph. While outside the scope of this book (we will cover neural networks in Chapter 12 with TensorFlow), the world of feed-forward Deep Neural Networks, Convolutional Neural Networks, and Recurrent Neural Networks is extremely fascinating. To dive further into neural networks specifically, I would suggest Hands-On Neural Network Programming with C#, also from Packt.

Additional extensibility into Azure and other model support such as PyTorch (https://pytorch.org/) is on the roadmap, but no timeline has been established at the time of writing.