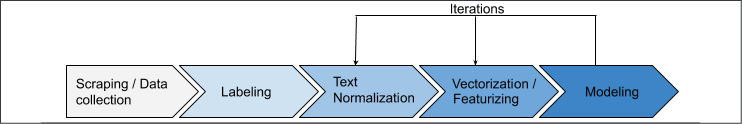

A typical text processing workflow

To understand how to process text, it is important to understand the general workflow for NLP. The following diagram illustrates the basic steps:

Figure 1.1: Typical stages of a text processing workflow

The first two steps of the process in the preceding diagram involve collecting labeled data. A supervised model or even a semi-supervised model needs data to operate. The next step is usually normalizing and featurizing the data. Models have a hard time processing text data as is. There is a lot of hidden structure in a given text that needs to be processed and exposed. These two steps focus on that. The last step is building a model with the processed inputs. While NLP has some unique models, this chapter will use only a simple deep neural network and focus more on the normalization and vectorization/featurization. Often, the last three stages operate in a cycle, even though the diagram may give the impression of linearity. In industry, additional features require more effort to develop and more resources to keep running. Hence, it is important that features add value. Taking this approach, we will use a simple model to validate different normalization/vectorization/featurization steps. Now, let's look at each of these stages in detail.