DataRobot architecture

DataRobot is one of the most well-known commercial tools for automated ML (AutoML). It only seems appropriate that the technology meant to automate everything should itself benefit from automation. As you go through the data science process, you will realize that there are many tasks that are repetitive in nature and standardized enough to warrant automation. DataRobot has done an excellent job of capturing such tasks to increase the speed, scale, and efficiency of building and deploying ML models. We will cover these aspects in great detail in this book. Having said that, there are still many tasks and aspects of this process that still require decisions, actions, and tradeoffs to be done by data scientists and data analysts. We will highlight these as well. The following figure shows a high-level view of the DataRobot architecture:

Figure 1.2 – Key components of the DataRobot architecture

The figure shows five key layers of the architecture and the corresponding components. In the following sections, we will describe each layer and how it enables a data science project.

Hosting platform

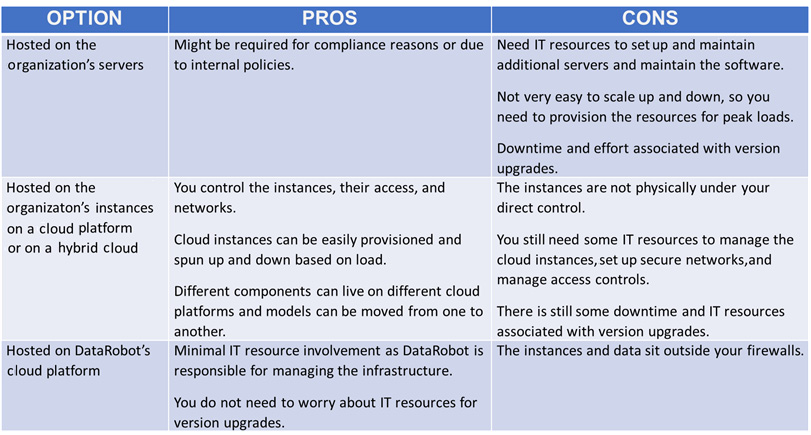

The DataRobot environment is accessed via a web browser. The environment itself can be hosted on an organization's servers, or within an organization's server instances on a cloud platform, such as AWS or DataRobot's cloud. There are pros and cons to each hosting option and which option you should choose depends on your organization's needs. Some of these are discussed at a high level in Table 1.1:

Figure 1.3 – Pros and cons of various hosting options

As you can gather from this table, DataRobot offers you a lot of choices, and you can pick the option that suits your environment the best. It is important to get your IT, information security, and legal teams involved in this conversation. Let's now look at how data comes into DataRobot.

Data sources

Datasets can be brought into DataRobot via local files (csv, xlsx, and more), by connecting to a relational database, from a URL, or from Hadoop Distributed File System (HDFS) (if it is set up for your environment). The datasets can be brought directly into a project or can be placed into an AI catalog. The datasets in the catalog can be shared across multiple projects. DataRobot has integrations and technology alliances with several data management system providers.

Core functions

DataRobot provides a fairly comprehensive set of capabilities to support the entire ML process, either through the core product or through add-on components such as Paxata, which provides easy-to-use data preparation and Exploratory Data Analysis (EDA) capabilities. Discussion of Paxata is beyond the scope of this book, so we will provide details of the capabilities of the core product. DataRobot automatically performs several EDA analyses that are presented to the user for gaining insights into the datasets and catching any data quality issues that may need to be fixed.

The automated modeling functions are the most critical capability offered by DataRobot. This includes determining the algorithms to be tried on the selected problem, performing basic feature engineering, automatically building models, tuning hyperparameters, building ensemble models, and presenting results. It must be noted that DataRobot mostly supports supervised ML algorithms and time series algorithms. Although there are capabilities to perform Natural Language Processing (NLP) and image processing, these functions are not comprehensive. DataRobot has also been adding to MLOps capabilities recently by providing functions for rapidly deploying models as REST APIs, monitoring data drift and service health, and tracking model performance. DataRobot continues to add capabilities such as support for geospatial data and bias detection.

These tasks are normally done by using programming languages such as R and Python and can be fairly time-consuming. The time spent coding up data analysis, model building, output analysis, and deployment can be significant. Typically, a lot of time is also spent debugging and fixing errors and making the code robust. Depending on the size and complexity of the model, this can take anywhere from weeks to months. DataRobot can reduce this time to days. This time can in turn be used to deliver projects faster, build more robust models, and better understand the problem being solved.

External interactions

DataRobot functions can be accessed via a comprehensive user interface (which we will describe in the next section), a client library that can be used in a Python or R framework to programmatically access DataRobot capabilities via an API, and a REST API for use by external applications. DataRobot also provides the ability to create applications that can be used by business users to enable them to make data-driven decisions.

Users

While most people believe that DataRobot is for data analysts and data scientists who do not like to code, it offers significant capabilities for data scientists who can code and can significantly increase the productivity of any data science team. There is also some support for business users for some specific use cases. Other systems can integrate with DataRobot models via the API, and this can be used to add intelligence to external systems or to store predictions in external databases. Several tool integrations exist through their partners program.