Creating an alert to monitor an Azure storage account

We can create an alert on multiple available metrics to monitor an Azure storage account. To create an alert, we need to define the trigger condition and the action to be performed when the alert is triggered. In this recipe, we'll create an alert to send an email if the used capacity metrics for an Azure storage account exceed 5 MB. The used capacity threshold of 5 MB is not a standard and is deliberately kept low to explain the alert functionality.

Getting ready

Before you start, perform the following steps:

- Open a web browser and log in to the Azure portal at https://portal.azure.com.

- Make sure you have an existing storage account. If not, create one using the Provisioning an Azure storage account using the Azure portal recipe in Chapter 1, Creating and Managing Data in Azure Data Lake.

How to do it…

Follow these steps to create an alert:

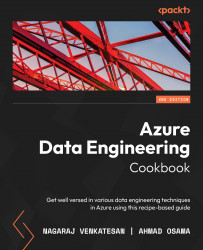

- In the Azure portal, locate and open the storage account. In our case, the storage account is packtadestoragev2. On the storage account page, search for

alertand open Alerts in the Monitoring section:

Figure 2.28 – Selecting Alerts

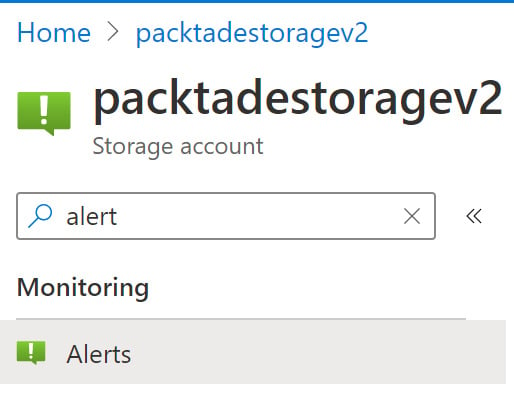

- On the Alerts page, click on + New alert rule:

Figure 2.29 – Adding a new alert

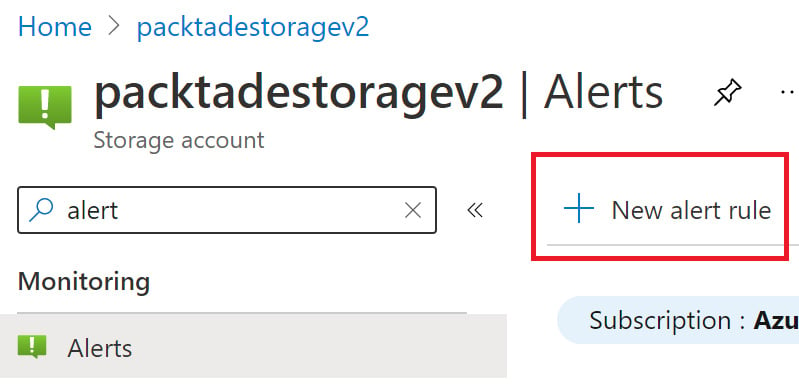

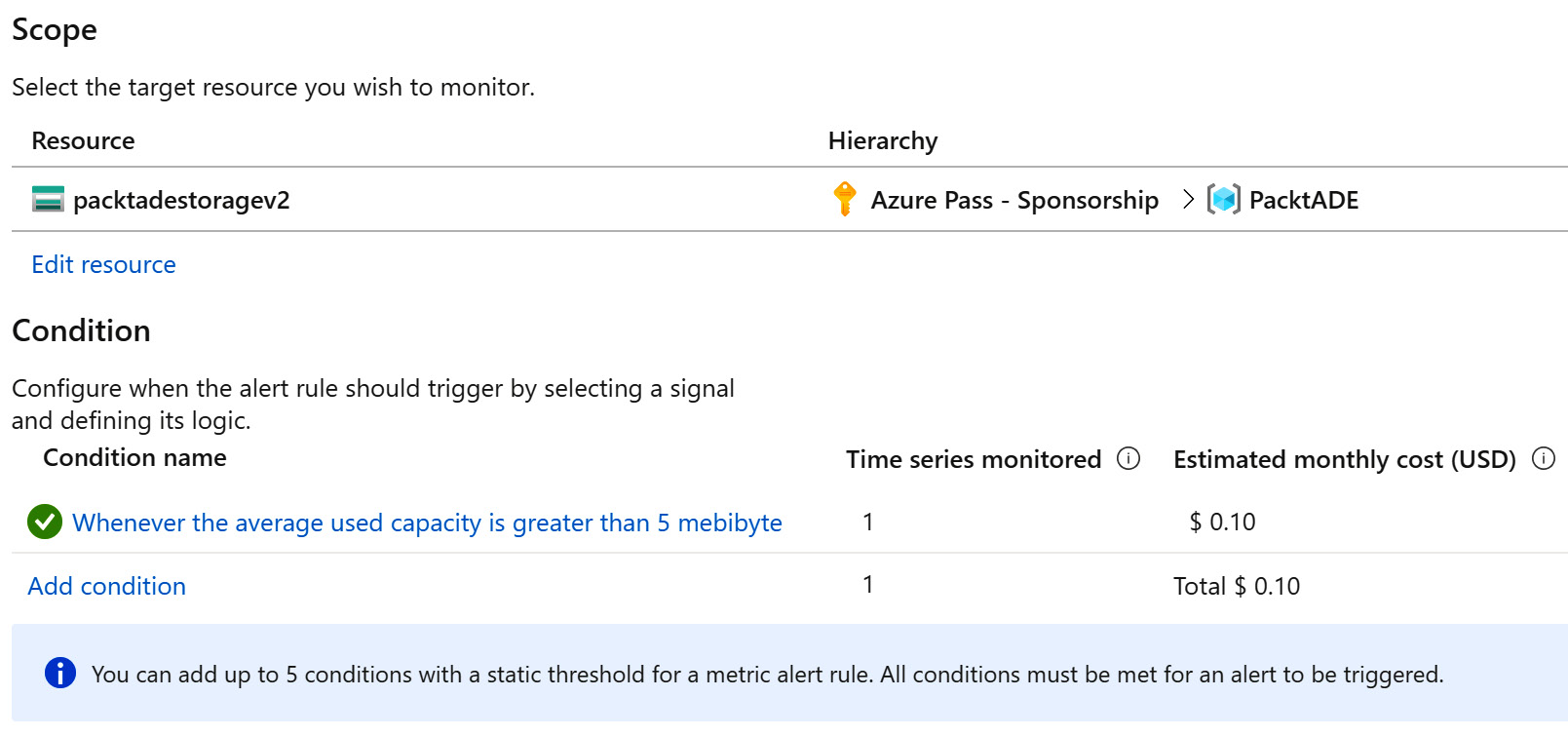

- On the Alerts | Create alert rule page, observe that the storage account is listed by default in the Resource section. You can add multiple storage accounts in the same alert. Under the Condition section, click Add condition:

Figure 2.30 – Adding a new alert condition

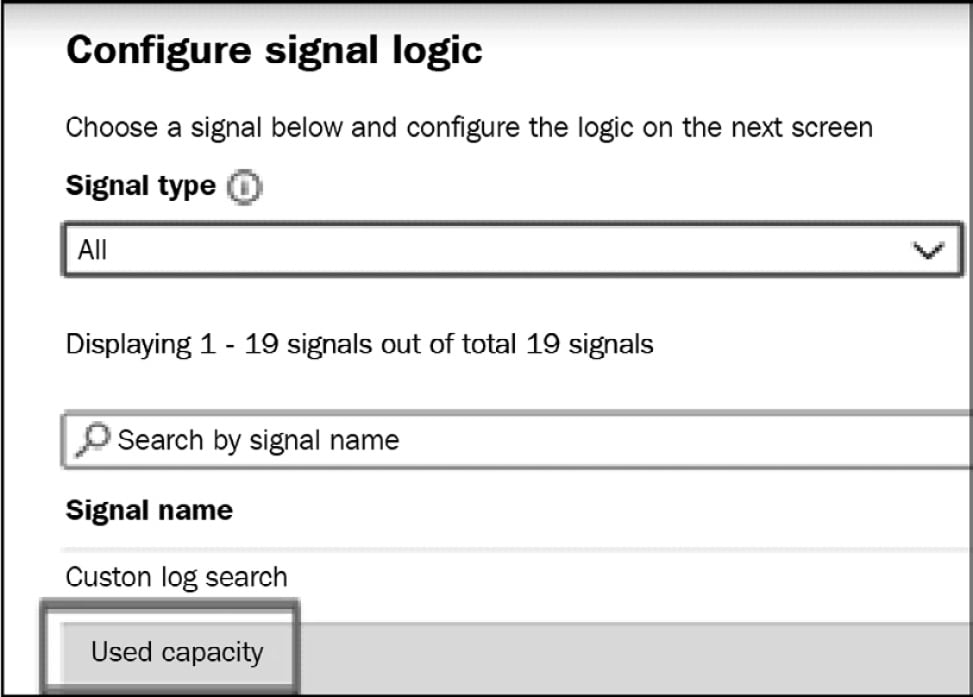

- On the Configure signal logic page, select Used capacity under Signal name:

Figure 2.31 – Configuring the signal logic

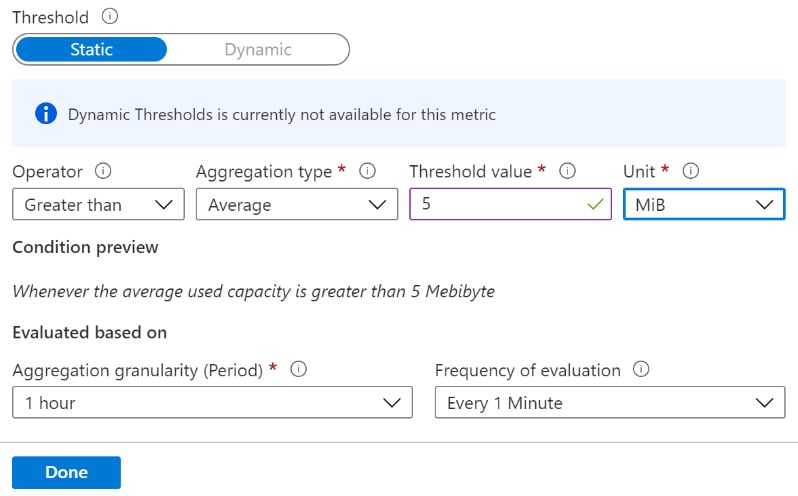

- On the Configure signal logic page, under Alert logic, set Operator to Greater than, Aggregation type to Average, and configure the threshold to 5 MiB. We need to provide the value in bytes:

Figure 2.32 – Configuring alert logic

Click Done to configure the trigger. The condition is added, and we'll be taken back to the Create alert rule page:

Figure 2.33 – Viewing a new alert condition

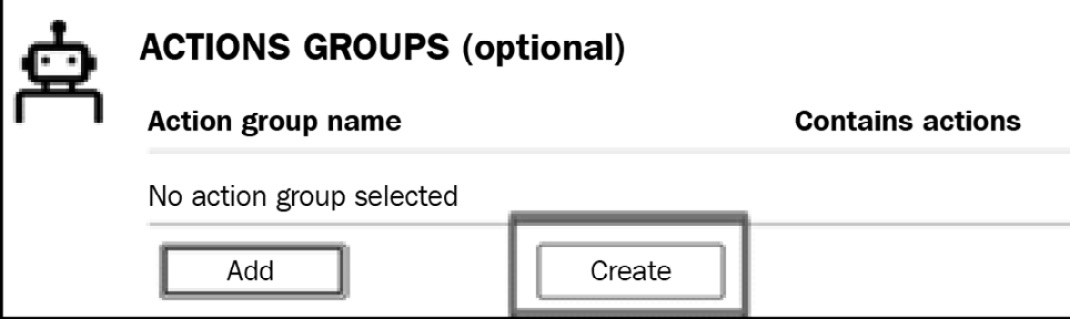

- The next step is to add an action to perform when the alert condition is reached. On the Create alert rule page, in the ACTIONS GROUPS section, click Create:

Figure 2.34 – Creating a new alert action group

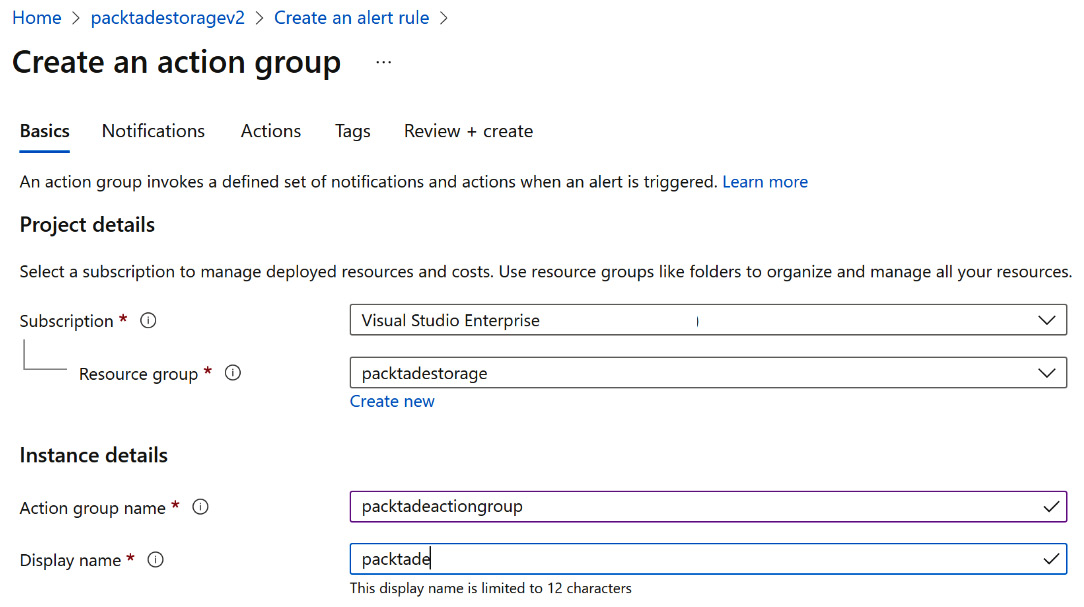

- On the Add action group page, provide the Action group name, Display name, and Resource group details:

Figure 2.35 – Adding a new alert action group

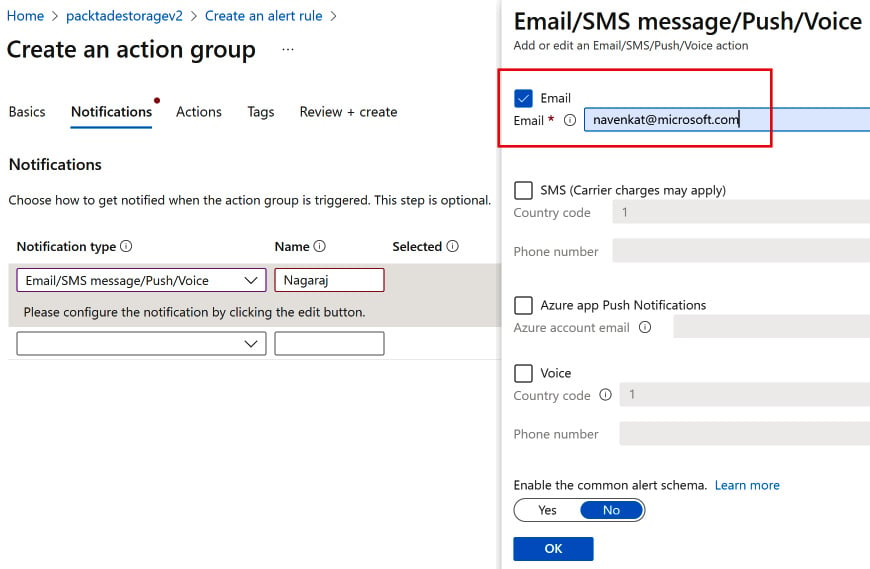

Figure 2.36 – Selecting the alert action

- Click on Create to create the action group. We are then taken back to the Create rule page. The Email action is listed in the Action Groups section.

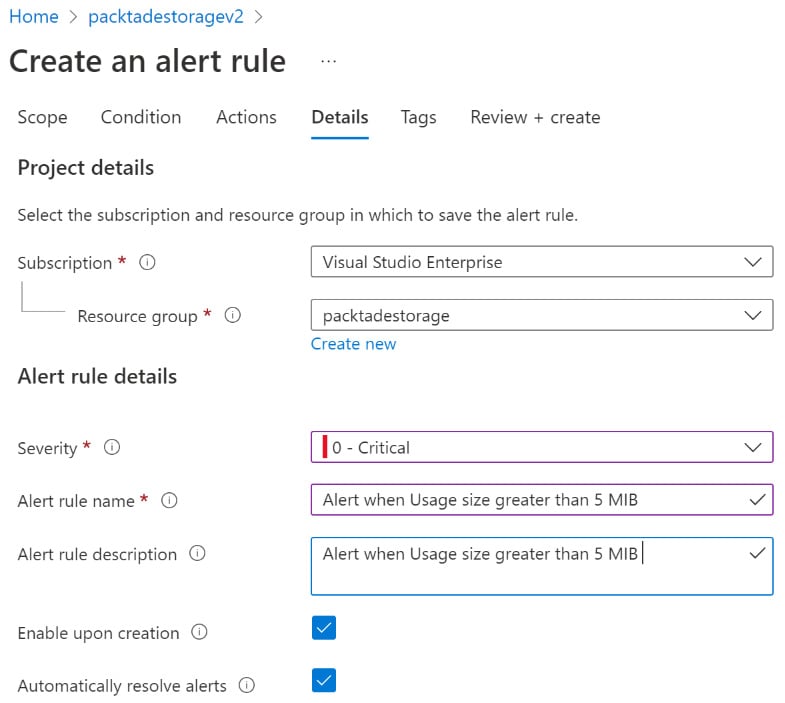

- The next step is to define the Severity, Alert rule name, and Alert rule description details:

Figure 2.37 – Creating an alert rule

- Click the Create alert rule button to create the alert.

- The next step is to trigger the alert. To do that, download

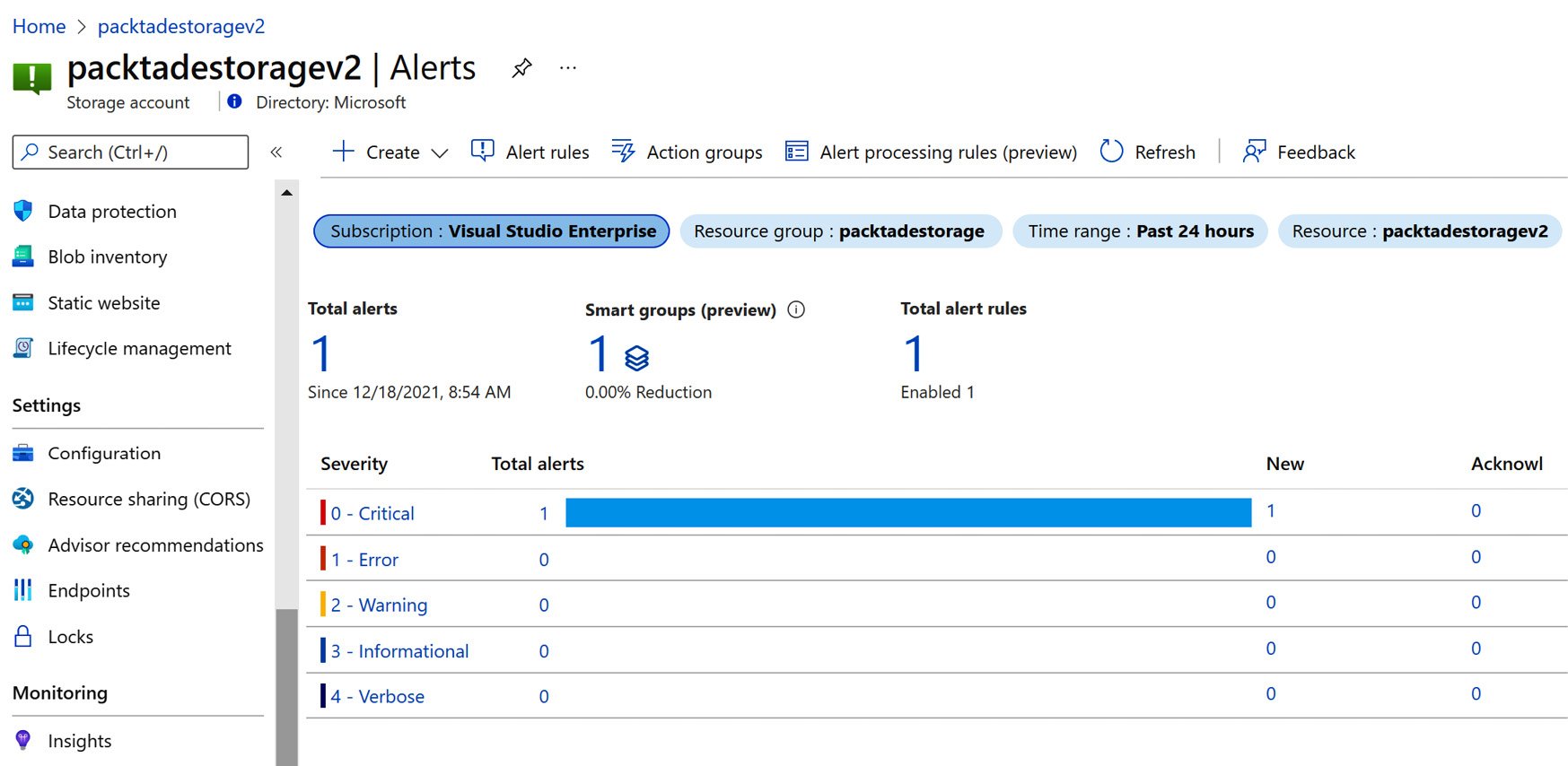

BigFile.csvfrom the https://github.com/PacktPublishing/Azure-Data-Engineering-Cookbook-2nd-edition/blob/main/Chapter2/BigFile.csv file to the Azure storage account by following the steps mentioned in the Creating containers and uploading files to Azure Blob storage using PowerShell recipe of Chapter 1, Creating and Managing Data in Azure Data Lake. The triggered alerts are listed on the Alerts page, as shown in the following screenshot:

Figure 2.38 – Viewing alerts

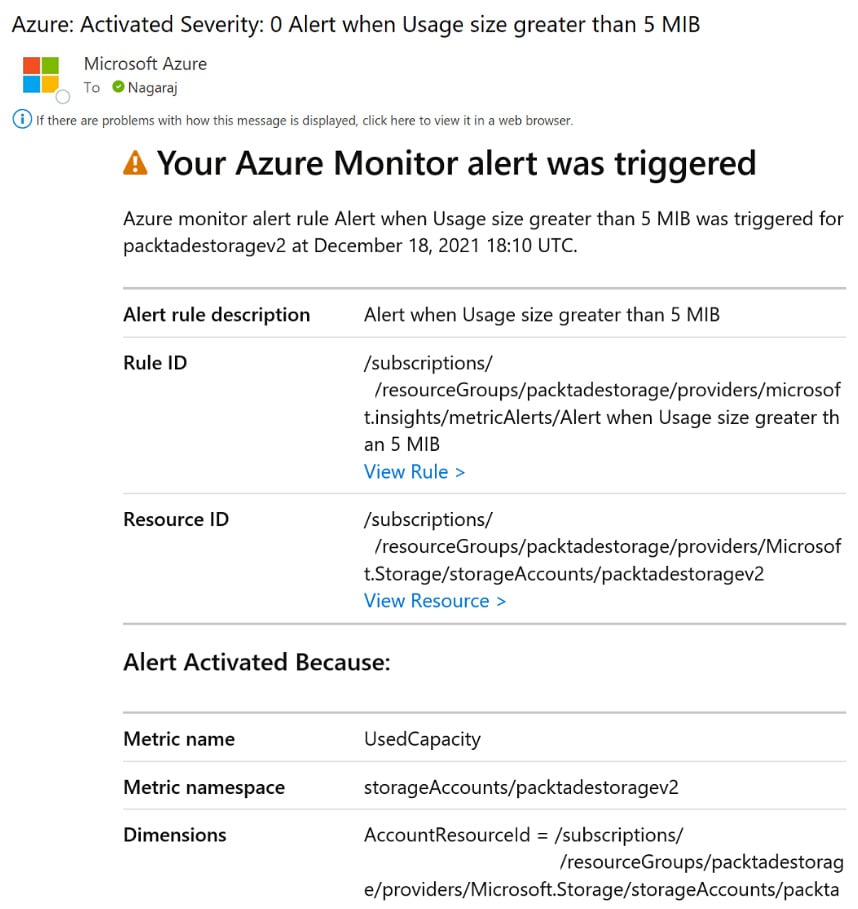

- An email is sent to the email ID specified in the email action group. The email appears as shown in the following screenshot:

Figure 2.39 – The alert email

How it works…

Setting up an alert is easy. At first, we need to define the alert condition (a trigger or signal). An alert condition defines the metrics and threshold that, when breached, trigger the alert. We can define more than one condition on multiple metrics for one alert.

We then need to define the action to be performed when the alert condition is reached. We can define more than one action for an alert. In our example, in addition to sending an email when the used capacity is more than 5 MB, we can configure Azure Automation to delete the old blobs/files in order to maintain the Azure storage capacity within 5 MB.

There are other signals, such as transactions, ingress, egress, availability, Success Server Latency, and Success E2E Latency, on which alerts can be defined. Detailed information on monitoring Azure storage is available at https://docs.microsoft.com/en-us/azure/storage/common/storage-monitoring-diagnosing-troubleshooting.