Operating in real time

To satisfy an embedded system's real-time requirements, the system must sense the state of its environment, compute a response, and output that response within a prescribed time interval. These timing constraints generally take two forms: periodic operation and event-driven operation.

Periodic operation

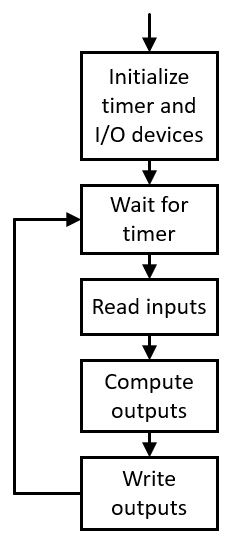

Embedded systems that perform periodic updates intend to remain in synchronization with the passage of time in the real world over long periods of time. These systems maintain an internal clock and use the passage of time as measured by the system clock to trigger the execution of each processing cycle. Most commonly, processing cycles repeat at fixed time intervals. Embedded systems typically perform processing at rates ranging from 10 to 1,000 updates per second, though particular applications may update at rates outside this range. Figure 1.1 shows the processing cycle of a simple periodically updated embedded system:

Figure 1.1 – Periodically updated embedded system

In the system of Figure 1.1, processing starts at the upper box, where initialization is performed for the processor itself and for the input/output (I/O) devices used by the system. The initialization process includes configuring a timer that triggers an event, typically an interrupt, at regularly spaced points in time. In the second box from the top, processing pauses while waiting for the timer to generate the next event. Depending on the capabilities of the processor, waiting may take the form of an idle loop that polls a timer output signal, or the system may enter a low power state waiting for the timer interrupt to wake the processor.

After the timer event occurs, the next step, in the third box from the top, consists of reading the current state of the inputs to the device. In the following box, the processor performs the computational algorithm and produces the values the device will write to the output peripherals. Output to the peripherals takes place in the final box at the bottom of the diagram. After the outputs have been written, processing returns to wait for the next timer event, forming an infinite loop.

Event-driven operation

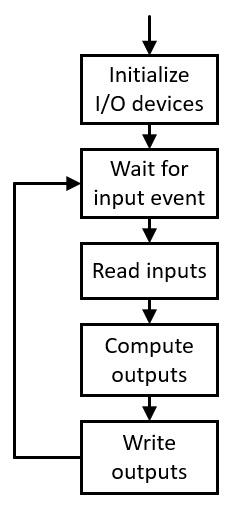

Embedded systems that respond to discrete events may spend the vast majority of their time in an idle state and only come to life when an input is received, at which time the system executes an algorithm to process the input data, generates output, writes the output to a peripheral device, and then goes back to the idle state. A pushbutton-operated television remote control is a good example of an event-driven embedded device. Figure 1.2 shows the processing steps for an event-driven embedded device:

Figure 1.2 – Event-driven embedded system

Most of the processing steps in an event-driven embedded system are similar to those of the periodic system, except the initiation of each pass through the computational algorithm is triggered by an input to the device. Each time an input event occurs, the system reads the input device that triggered the event, along with any other inputs that are needed. The processor computes the outputs, writes outputs to the appropriate devices, and returns to wait for the next event, again forming an infinite loop. The system may have inputs for many different events, such as presses and releases of each of the keys on a keypad.

Many embedded systems must support both periodic and event-driven behaviors. An automobile is one example. While driving, the drivetrain processors sense inputs, perform computations, and update outputs to manage the vehicle speed, steering, and braking at regular time intervals. In addition to these periodic operations, the system contains other input signals and sensors that indicate the occurrence of events, such as shifting into gear or the involvement of the vehicle in a collision.

For a small, microcontroller-based embedded system, the developer might write the entirety of the code, including all timing-related functions, input, and output via peripheral interfaces, and the algorithms needed to compute outputs given the inputs. Implementing the blocks of Figure 1.1 or Figure 1.2 for a small system might consist of a few hundred lines of C code or assembly language.

At the higher end of system complexity, where the processor might need to update various outputs at different rates and respond to a variety of event-type input signals, it becomes necessary to segment the code between the time-related activities, such as scheduling cyclic updates, and the code that performs the computational algorithms of the system. This segmentation becomes particularly critical in highly complex systems that contain hundreds of thousands or even millions of lines of code. Real-time operating systems provide this capability.

Real-time operating systems

When a system architecture is of sufficient complexity that the separation of time-related functionality from computational algorithms becomes beneficial, it is common to implement an operating system to manage lower-level functionality, such as scheduling time-based updates and managing responses to interrupt-driven events. This allows application developers to focus on the algorithms required by the system design, which includes their integration into the capabilities provided by the operating system.

An operating system is a multilayer suite of software providing an environment in which applications perform useful functions, such as managing the operation of a car engine. These applications execute algorithms consisting of processor instruction sequences and perform I/O interactions with the peripheral devices needed to complete their tasks.

Operating systems can be broadly categorized into real-time and general-purpose operating systems. A real-time operating system (RTOS) provides features to ensure that responses to inputs occur within a specified time limit, as long as some assumptions about how the application code behaves remain true. Real-time applications that perform tasks such as managing the operation of a car engine or a kitchen appliance typically run under an RTOS to ensure that the electrical and mechanical components they control receive responses to any change in inputs within a specified time.

Embedded systems often perform multiple functions simultaneously. The automobile is a good example, where one or more processors continuously monitor and control the operation of the powertrain, receive input from the driver, manage the climate control, and operate the sound system. One method of handling this diversity of tasks is to assign a separate processor to perform each function. This makes the development and testing of the software associated with each function straightforward, though a possible downside is that the design ends up with a plethora of processors, many of which don't have very much work to do.

Alternatively, a system architect may assign more than one of these functions to a single processor. If the functions assigned to the processor perform updates at the same rate, integration in this manner may be straightforward, particularly if the functions do not need to interact with each other.

In the case where multiple functions that execute at different rates are combined in the same processor, the complexity of the integration will increase, particularly if the functions must transfer data among themselves.

In the context of an RTOS, separate periodically scheduled functions that execute in a logically simultaneous manner are called tasks. A task is a block of code with an independent flow of execution that is scheduled in a periodic or event-driven manner by the operating system. Some operating systems use the term thread to represent a concept similar to a task. A thread is a flow of code execution, while the term task generally describes a thread of execution combined with other system resources required by the task.

Modern RTOS implementations support the implementation of an arbitrary number of tasks, each of which may execute at different update rates and at different priorities. The priority of an RTOS task determines when it is allowed to execute relative to other tasks that may be simultaneously ready to execute. Higher-priority tasks get the first chance to execute when the operating system is making scheduling decisions.

An RTOS may be preemptive, meaning it has the authority to pause the execution of a lower-priority task when a higher-priority task becomes ready to run. When this happens, which typically occurs when it becomes time for the higher-priority task to perform its next update, or when a blocked I/O operation initiated by the higher-priority task completes, the system saves the state of the lower-priority task and transfers control to the higher-priority task. After the higher-priority task finishes and returns to the waiting state, the system switches back to the lower-priority task and resumes its execution.

As we'll see in later chapters, there are several additional features available in popular RTOS implementations such as FreeRTOS. There are also some significant performance constraints that developers of applications running in an RTOS environment must be aware of to avoid problems such as higher-priority tasks entirely blocking the execution of lower-priority tasks, and the possibility of deadlock between communicating tasks.

In the next section, we will introduce the basics of digital logic and examine the capabilities of modern FPGA devices.