It is pertinent, and it is paramount for extracting and expounding the game-changing advantages of the Docker-inspired containerization movement over the widely used and fully matured virtualization paradigm. As elucidated earlier, virtualization is the breakthrough idea and game-changing trendsetter for the unprecedented adoption of cloudification, which enables the paradigm of IT industrialization. However, through innumerable real-world case studies, cloud service providers have come to the conclusion that the virtualization technique has its own drawbacks and hence the containerization movement took off powerfully.

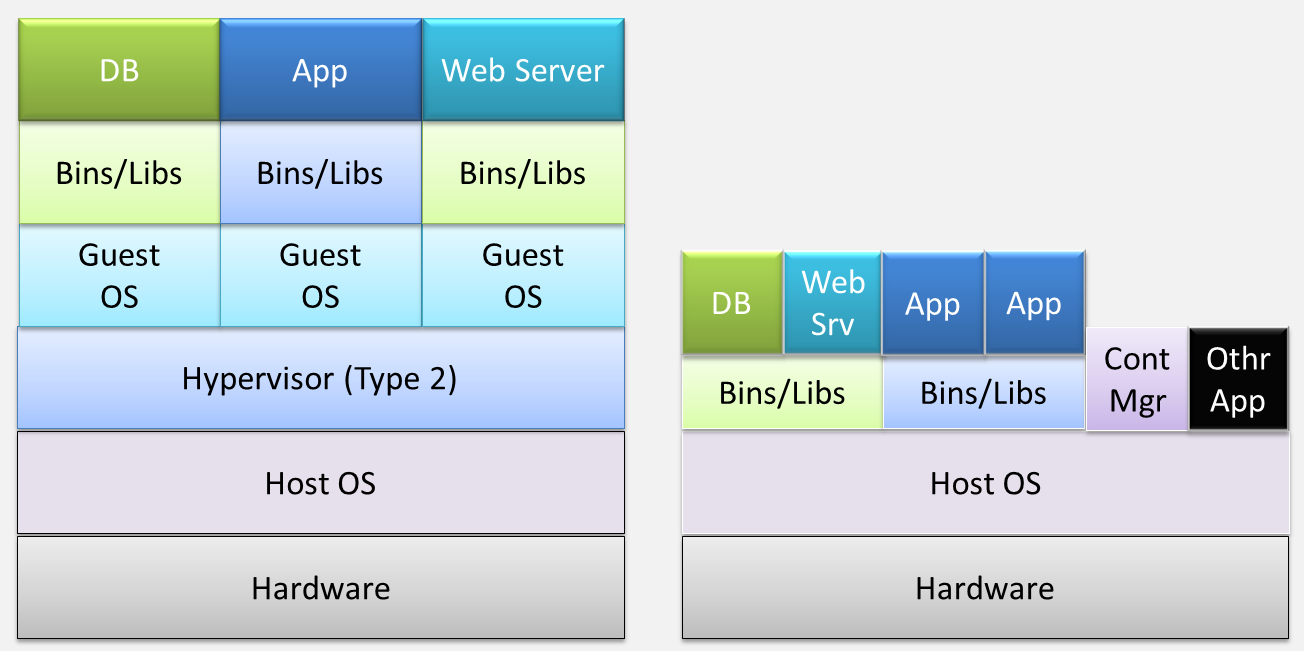

Containerization has brought in strategically sound optimizations through a few crucial and well-defined rationalizations and the insightful sharing of compute resources. Some of the innate and hitherto underutilized capabilities of the Linux kernel have been rediscovered. A few additional capabilities too are being embedded to strengthen the process and applicability of containerization. These capabilities have been praised for bringing in the much-wanted automation and acceleration, which will enable the fledgling containerization idea to reach greater heights in the days ahead. The noteworthy business and technical advantages of containerization include bare metal-scale performance, real-time scalability, higher availability, IT DevOps, software portability, and so on. All the unwanted bulges and flabs are being sagaciously eliminated to speed up the roll-out of hundreds of application containers in seconds. The following diagram on the left-hand side depicts the virtualization aspect, whereas the diagram on the right-hand side vividly illustrates the simplifications that are being achieved in containers:

As we all know, there are two main virtualization types. In Type 1 virtualization, the hypervisor provides the OS functionalities plus the VM provisioning, monitoring, and management capabilities and hence there is no need for any host OS. VMware ESXi is the leading Type 1 virtualization hypervisor. The production environments and mission-critical applications are run on the Type 1 virtualization.

The second one is the Type 2 virtualization, wherein the hypervisor runs on the host OS as shown in the preceding figure. This additional layer impacts the system performance and hence generally Type 2 virtualization is being used for development, testing, and staging environments. The Type 2 virtualization greatly slows down the performance because of the involvement of multiple modules during execution. Here, the arrival of Docker-enabled containerization brings forth a huge boost to the system performance.

In summary, VMs are a time-tested and battle-hardened software stack and there are a number of enabling tools to manage the OS and applications on it. The virtualization tool ecosystem is consistently expanding. Applications in a VM are hidden from the host OS through the hypervisor. However, Docker containers do not use a hypervisor to provide the isolation. With containers, the Docker host uses the process and filesystem isolation capabilities of the Linux kernel to guarantee the much-demanded isolation.

Docker containers need a reduced disk footprint as they don't include the entire OS. Setup and startup times are therefore significantly lower than in a typical VM. The principal container advantage is the speed with which application code can be developed, composed, packaged, and shared widely. Containers emerge as the most prominent and dominant platform for the speedier creation, deployment, and delivery of microservices-based distributed applications. With containers, there is a lot of noteworthy saving of IT resources as containers consume less memory space.

Great thinkers have come out with a nice and neat comparison between VMs and containers. They accentuate thinking in terms of a house (VM) and an apartment complex. The house, which has its own plumbing, electrical, heating, and protection from unwanted visitors, is self-contained. An apartment complex has the same resources as a house, such as electrical, plumbing, and heating, but they are shared among all the units. The individual apartments come in various sizes and you only rent what you need, not the entire complex. The apartment flats are containers, with the shared resources being the container host.

Developers can use simple and incremental commands to create a fixed image that is easy to deploy and can automate building those images using a Dockerfile. Developers can share those images easily using simple, Git-style push and pull commands to public or private Docker registries. Since the inception of the Docker technology, there is an unprecedented growth of third-party tools for simplifying and streamlining Docker-enabled containerization.