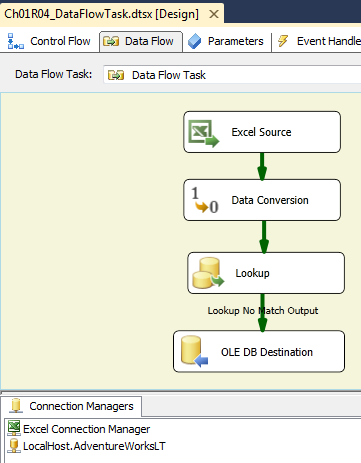

While the Control Flow tab under the Package Designer is where the main workflow of the package is manipulated, the Data Flow tab introduced in this recipe is the place where data is transformed and moved between a source and destination. The Data Flow tab under the Package Designer is used to create or edit such transformations and movements for each Data Flow task which is placed in the Control Flow tab.

It's possible to have several Data Flows in the Control Flow, but the order of execution for those Data Flows should be planned carefully. To be familiar with the Data Flow task, this recipe introduces a simple but very common scenario of incorporating the content of an Excel file into a database, a SQL Server database for example.

Open SQL Server Data Tools (SSDT).

Create a new project and provide a name and location for it.

Open the empty

package.dtsxcreated by default and rename it toP01_DataFlowTask.dtsx.

Imagine a case where SSIS needs to periodically integrate data produced by an external system, and this data needs to be prepared to fit the destination requirements such as schema and data values exactly. To accomplish this task, we need to read data from an Excel worksheet, perform data conversions, verify whether data already exists at the destination, and finally insert into an SQL table at the destination.

Drag-and-drop a Data Flow task from the SSIS Toolbox to Control Flow.

Open Data Flow for editing by double-clicking under the task or just right-click and select Edit.

Make sure that the Data Flow tab is selected in the Package Designer. At this step Data Flow is naturally empty.

Because we need to read some data from an Excel file, simply drag-and-drop the Excel Source component (under the Data Sources group) from SSIS Toolbox and place it into the Package Designer area. Note that SSIS Toolbox has different content listed; it has components in spite of tasks that exist when the Control Flow is selected in the Package Designer.

Double-click on the Excel Source component and click on the New button to create a connection to the source Excel file.

Set the Excel file's path to

C:\SSIS\Ch01_Getting Start with SSIS\FILES\R04_NewCustomers.xlsxand click on OK.In the Excel Source Editor, set the name of the Excel sheet to

Sheet1$.Select the Columns tab and choose the columns Firstname and Lastname, which will be used along the data flow.

Click on OK to finish editing Excel Source.

Drag-and-drop Data Conversion from SSIS Toolbox to the Package Designer.

Select the column's FirstName and LastName, and change the Length for each to 50 characters.

Drag-and-drop the Lookup component from SSIS Toolbox into the Package Designer.

Maintain all the properties with default values and create a connection to an SQL destination database (

AdventureWorksLT).In the list to select table or view, select the destination table 'SalesLT'.'Customer'.

Map the source converted columns Copy of FirstName and Copy of LastName to the destination SQL columns.

Click on OK to finish editing the lookup.

Drag-and-drop the OLE DB Destination component from SSIS Toolbox into the Package Designer in order to load data into SQL.

Link the output No Match Rows from Lookup to insert into destination only those records that don't yet exist at the destination.

Edit the destination component and set the SQL connection and also the destination table; maintain all the default values for the remaining controls.

Click on OK to finish editing the OLE DB Destination component .

Run the package by pressing F5.

This Data Flow reads some customer data (first name and last name) from an Excel file, applies some common transformations and inserts the data into an SQL table named SalesLT.Customer. Some transformations are usually applied to make source data fit the destination's requirements. In this example, data is converted and we verified whether the current data in the incoming rows already exists at the destination (the purpose is to avoid data duplication).

Detailed descriptions about each source (Transformation and Destination) used in this example will be explored in later chapters. In this recipe, we just need an overview of Data Flow.