Software architecture and design also keep evolving, based on the target environment and volume of the application's size.

Trend of microservices

Modular programming

When the application size is getting bigger, developers tried to divide by several modules. Each module should be independent and reusable, and should be maintained by different developer teams. Then, when we start to implement an application, the application just initializes and uses these modules to build a larger application efficiently.

The following example shows what kind of library Nginx (https://www.nginx.com) uses on CentOS 7. It indicates that Nginx uses OpenSSL, POSIX thread library, PCRE the regular expression library, zlib the compression library, GNU C library, and so on. So, Nginx didn't reinvent to implement SSL encryption, regular expression, and so on:

$ /usr/bin/ldd /usr/sbin/nginx linux-vdso.so.1 => (0x00007ffd96d79000) libdl.so.2 => /lib64/libdl.so.2 (0x00007fd96d61c000) libpthread.so.0 => /lib64/libpthread.so.0

(0x00007fd96d400000) libcrypt.so.1 => /lib64/libcrypt.so.1

(0x00007fd96d1c8000) libpcre.so.1 => /lib64/libpcre.so.1 (0x00007fd96cf67000) libssl.so.10 => /lib64/libssl.so.10 (0x00007fd96ccf9000) libcrypto.so.10 => /lib64/libcrypto.so.10

(0x00007fd96c90e000) libz.so.1 => /lib64/libz.so.1 (0x00007fd96c6f8000) libprofiler.so.0 => /lib64/libprofiler.so.0

(0x00007fd96c4e4000) libc.so.6 => /lib64/libc.so.6 (0x00007fd96c122000) ...

Package management

Java language and several lightweight programming languages such as Python, Ruby, and JavaScript have their own module or package management tool. For example, Maven (http://maven.apache.org) for Java, pip (https://pip.pypa.io) for Python, RubyGems (https://rubygems.org) for Ruby and npm (https://www.npmjs.com) for JavaScript.

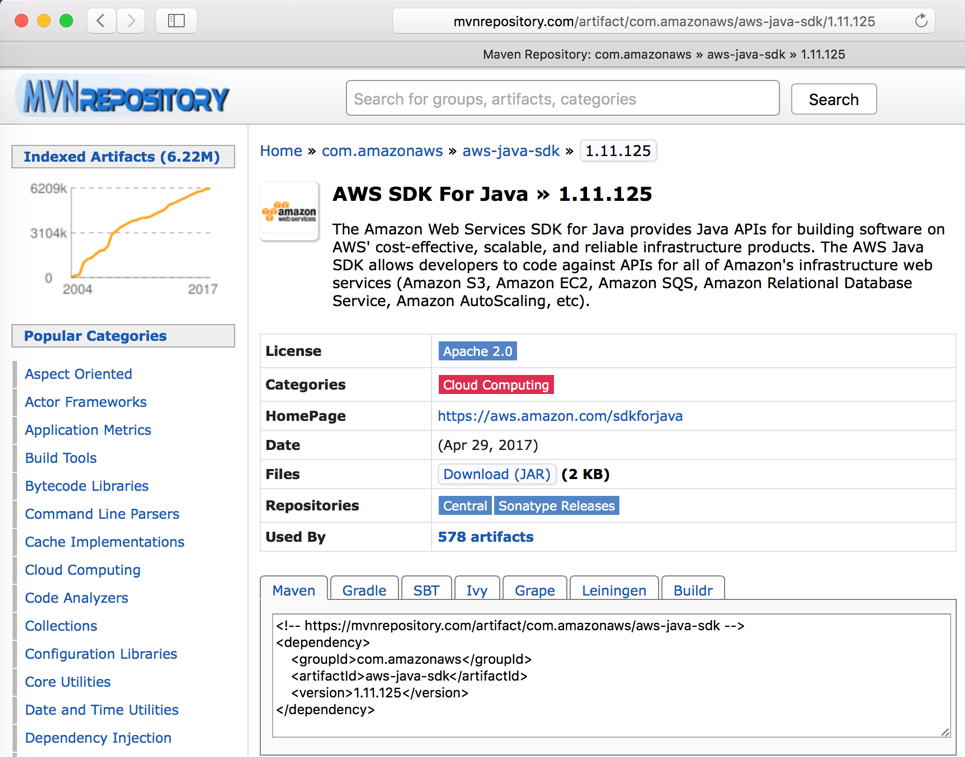

Package management tool allows you to register your module or package to the centralized or private repository, and also allows to download the necessary packages. The following screenshot shows Maven repository for AWS SDK:

When you add some particular dependencies to your application, Maven downloads the necessary packages. The following screenshot is the result you get when you add aws-java-sdk dependency to your application:

Modular programming helps you to increase software development speed and reduce it to reinvent the wheel, so it is the most popular way to develop a software application now.

However, applications need more and more combination of modules, packages, and frameworks, as and when we keep adding a feature and logic. This makes the application more complex and larger, especially server-side applications. This is because it usually needs to connect to a database such as RDBMS, as well as an authentication server such as LDAP, and then return the result to the user by HTML with the appropriate design.

Therefore, developers have adopted some software design patterns in order to develop an application with a bunch of modules within an application.

MVC design pattern

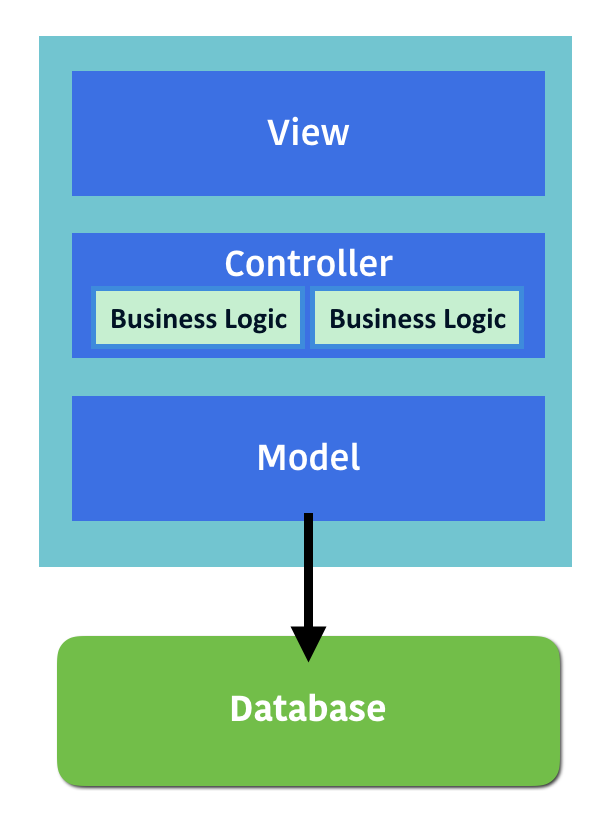

One of the popular application design patterns is Model View and Controller (MVC). It defines three layers. View layer is in charge of user interface (UI) input output (I/O). Model layer is in charge of data query and persistency such as load and store to database. Then, the Controller layer is in charge of business logic that is halfway between View and Model:

There are some frameworks that help developers to make MVC easier, such as Struts (https://struts.apache.org/), SpringMVC (https://projects.spring.io/spring-framework/), Ruby on Rails (http://rubyonrails.org/), and Django (https://www.djangoproject.com/). MVC is one of the successful software design pattern that is used for the foundation of modern web applications and services.

MVC defines a border line between every layer which allows many developers to jointly develop the same application. However, it causes side effects. That is, the size of the source code within the application keeps getting bigger. This is because database code (Model), presentation code (View), and business logic (Controller) are all within the same VCS repository. It eventually makes impact on the software development cycle, which gets slower again! It is called monolithic, which contains a lot of code that builds a giant exe/war program.

Monolithic application

There is no clear measurement of monolithic application definition, but it used to have more than 50 modules or packages, more than 50 database tables, and then it needs more than 30 minutes to build. When it needs to add or modify one module, it affects a lot of code, therefore developers try to minimize the application code change. This hesitation causes worse effects such that sometimes the application even dies because no one wants to maintain the code anymore.

Therefore, the developer starts to divide monolithic applications in to small pieces of application and connect via the network.

Remote Procedure Call

Actually, dividing an application in to small pieces and connecting via the network has been attempted back in the 1990s. Sun Microsystems introduced Sun RPC (Remote Procedure Call). It allows you to use the module remotely. One of popular Sun RPC implementers is Network File System (NFS). CPU OS versions are independent across NFS client and NFS server, because they are based on Sun RPC.

The programming language itself also supports RPC-style functionality. UNIX and C language have the rpcgen tool. It helps the developer to generate a stub code, which is in charge of network communication code, so the developer can use the C function style and be relieved from difficult network layer programming.

Java has Java Remote Method Invocation (RMI) which is similar to Sun RPC, but for Java, RMI compiler (rmic) generates the stub code that connects remote Java processes to invoke the method and get a result back. The following diagram shows Java RMI procedure flow:

Objective C also has distributed object and .NET has remoting, so most of the modern programming languages have the capability of Remote Procedure Call out of the box.

These Remote Procedure Call designs have the benefit to divide an application into multiple processes (programs). Individual programs can have separate source code repositories. It works well although machine resource (CPU, memory) was limited during 1990s and 2000s.

However, it was designed and intended to use the same programming language and also designed for client/server model architecture, instead of a distributed architecture. In addition, there was less security consideration; therefore, it is not recommended to use over a public network.

In the 2000s, there was an initiative web services that used SOAP (HTTP/SSL) as data transport, using XML as data presentation and service definition Web Services Description Language (WSDL), then used Universal Description, Discovery, and Integration (UDDI) as the service registry to look up a web services application. However, as the machine resources were not rich and due to the complexity of web services programming and maintainability, it is not widely accepted by developers.

RESTful design

Go to 2010s, now machine power and even the smartphone have plenty of CPU resource, in addition to network bandwidth of a few hundred Mbps everywhere. So, the developer starts to utilize these resources to make application code and system structure as easy as possible making the software development cycle quicker.

Based on hardware resources, it is a natural decision to use HTTP/SSL as RPC transport, but from having experience with web services difficulty, the developer makes it simple as follows:

- By making HTTP and SSL/TLS a standard transport

- By using HTTP method for Create/Load/Upload/Delete (CLUD) operation, such as GET/POST/PUT/DELETE

- By using URI as the resource identifier such as: user ID 123 as /user/123/

- By using JSON as the standard data presentation

It is called RESTful design, and that has been widely accepted by many developers and become de facto standard of distributed applications. RESTful application allows any programming language as it is HTTP-based, so the RESTful server is Java and client Python is very natural.

It brings freedom and opportunities to the developer that its easy to perform code refactoring, upgrade a library and even switch to another programming language. It also encourages the developer to build a distributed modular design by multiple RESTful applications, which is called microservices.

If you have multiple RESTful applications, there is a concern on how to manage multiple source code on VCS and how to deploy multiple RESTful servers. However, Continuous Integration, and Continuous Delivery automation makes a lower bar to build and deploy a multiple RESTful server application easier.

Therefore, microservices design is getting popular for web application developers.

Microservices

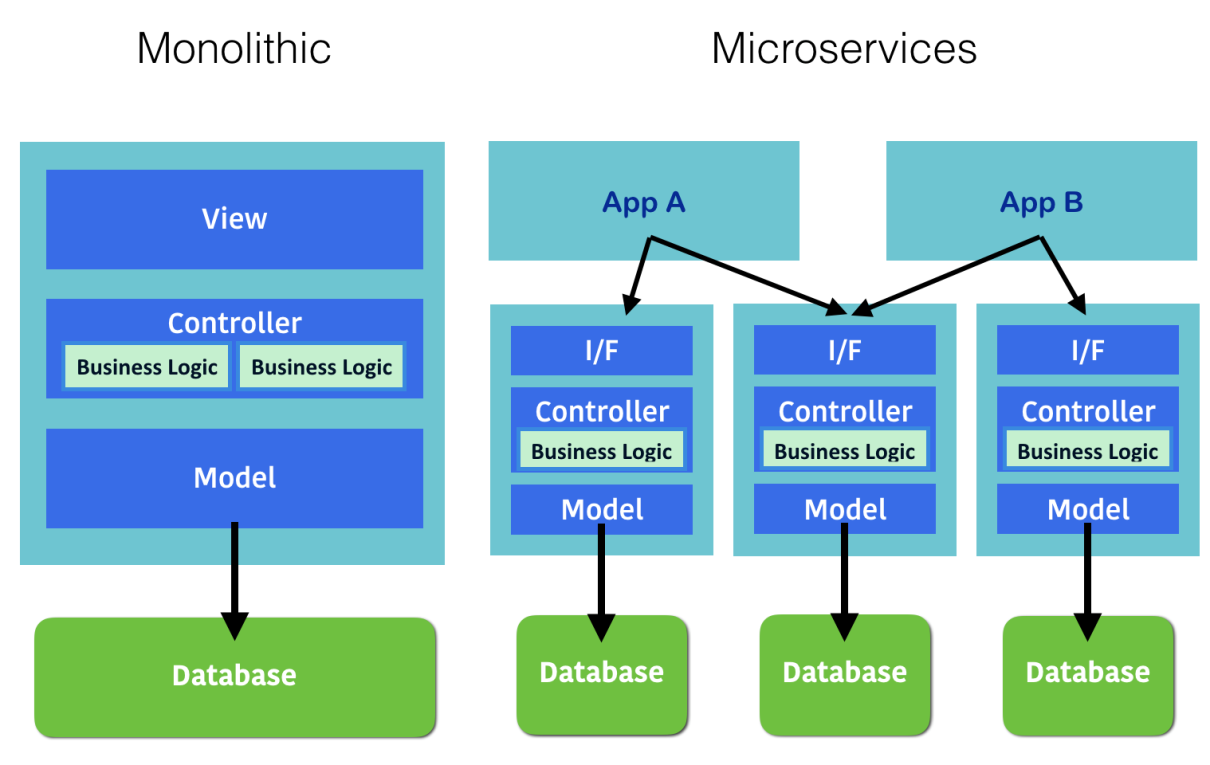

Although the name is micro, it is actually heavy enough compared to the applications from 1990s or 2000s. It uses full stack of HTTP/SSL server and contains entire MVC layers. The microservices design should care about the following topics:

- Stateless: This doesn't store user session to the system, which helps to scale out easier.

- No shared datastore: The microservice should own the datastore such as database. It shouldn't share with the other application. It helps to encapsulate the backend database that is easy to refactor and update the database scheme within a single microservice.

- Versioning and compatibility: The microservice may change and update the API but should define a version and it should have backward compatibility. This helps to decouple between other microservices and applications.

- Integrate CI/CD: The microservice should adopt CI and CD process to eliminate management effort.

There are some frameworks that can help to build the microservice application such as Spring Boot (https://projects.spring.io/spring-boot/) and Flask (http://flask.pocoo.org). However, there are a lot of HTTP-based frameworks, so the developer can feel free to try and choose any preferred framework or even programming language. This is the beauty of the microservice design.

The following diagram is a comparison between monolithic application design and microservices design. It indicates that microservice (also MVC) design is the same as monolithic, which contains interface layer, business logic layer, model layer, and datastore.

But the difference is that the application (service) is constructed by multiple microservices and that different applications can share the same microservice underneath:

The developer can add the necessary microservice and modify an existing microservice with the rapid software delivery method that won't affect an existing application (service) anymore.

It is a breakthrough to an entire software development environment and methodology that is getting widely accepted by many developers now.

Although Continuous Integration and Continuous Delivery automation process helps to develop and deploy multiple microservices, the number of resources and complexity, such as Virtual Machine, OS, library, and disk volume and network can't compare with monolithic applications.

Therefore, there are some tools and roles that can support these large automation environments on the cloud.