After the extensive information gathering from virtualization as a technology, and server consolidation as a technique to take advantage of virtualization, let's move on to the next section of this chapter. Here we will discuss Hyper-V architecture, and will go deeper to understand how different Hyper-V architectural components work together to provide hardware-assisted virtualization.

Before we jump in to discuss the core elements of Hyper-V architecture, let's first quickly see the definition of a hypervisor, and its available types, to better understand the Hyper-V architecture as a hypervisor.

Hypervisor is a term used to describe the software stack, or sometimes operating system feature, that allows us to create virtual machines by utilizing the same physical server's resources. Based on the hypervisor type, some hypervisors run on the operating system layer, and some go underneath the operating system and directly interact with the hardware resources, such as processor, RAM, and NIC. We will understand these different types of hypervisors shortly—in the coming topics.

Hypervisor is not a new term that rose with VMware or Microsoft. If you see the history of this term, it takes you back to the year 1965, when IBM first upgraded the code for its 360 mainframe system's computing platform to support memory virtualization. By evolving this technique, they provided great enhancements to computing as a technology, by addressing different architectural limitations of mainframes.

Now let's discuss the various available hypervisor types, which may be categorized as shown next.

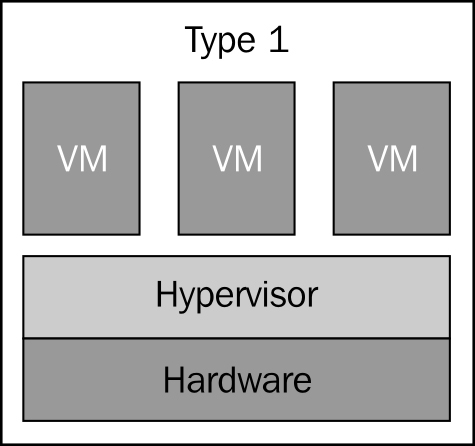

Type 1, or bare metal, hypervisors run on the server hardware. They get more control over the host hardware, thus providing better performance and security. And guest virtual machines run on top of the hypervisor layer. There are a couple of hypervisors available on the market that belong to this hypervisor family, for example, Microsoft Hyper-V, VMware vSphere ESXi Server, and Citrix XenServer.

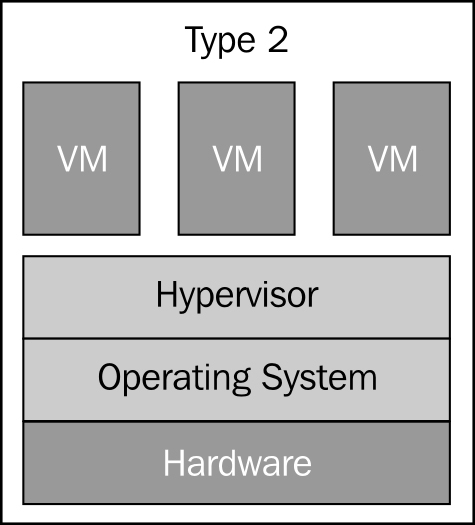

These second type of hypervisors run on top of the operating system as an application installed on the server. And that's why they are often referred to as hosted hypervisors. In type 2 hypervisor environments, the guest virtual machines run on top of the hypervisor layer.

The preceding diagrams illustrate the difference between type 1 and type 2 hypervisors, where you can see that in the type 1 hypervisor architecture, the hypervisor is the first layer right after the base hardware. This allows the hypervisor to have more control and better access to the hardware resources.

While looking at the type 2 hypervisor architecture, we can see that the hypervisor is installed on top of the operating system layer, which doesn't allow the hypervisor to directly access the hardware. This inability to have direct access to the host's hardware increases overhead for the hypervisor, and thus the resources that you may run on the type 1 hypervisor are more while those on the type 2 hypervisor are less for the same hardware.

The second major disadvantage of type 2 hypervisors is that this hypervisor runs as a Windows NT service; so if this service is killed, the virtualization platform will not be available anymore. The examples of Type 2 hypervisor are Microsoft Virtual Server, Virtual PC, and VMware Player/VMware Workstation.

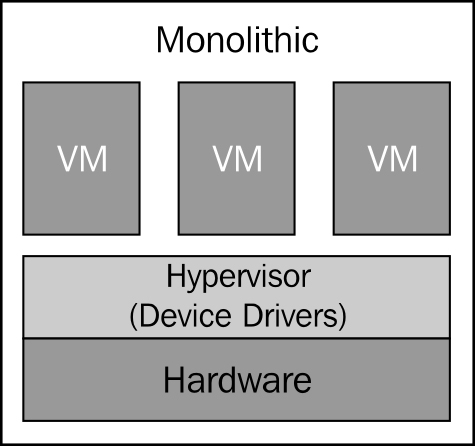

The monolithic hypervisor is a subtype of the type 1 hypervisors. This type of hypervisor holds hypervisor-aware device drivers for guest-operating systems. There are some benefits of using monolithic hypervisors, but there are also a couple of disadvantages in using them. The benefit of using monolithic hypervisors is that they don't need a parent or controlling operating system, and thus they have direct control over the server hardware.

The first disadvantage of using monolithic hypervisors is that not every single hardware vendor may have device drivers ready for these types of hypervisors. This is because there are number of different motherboards and other devices. Therefore, to find a compatible hardware vendor who supports specific monolithic hypervisors could be a potentially hard task to do, before choosing the right hardware for your hypervisor. The same thing can also be counted as an advantage, because each of these drivers for the monolithic hypervisors are tested and verified by the hypervisor manufacturer.

The second disadvantage of a monolithic hypervisor is that it allows the hypervisor to get closer access to kernel (Ring 1) and hardware resources, which may open the door for the malicious activities taking advantage of this excessive privilege. The openness to this threat goes against the trusted computing base (TCB) concept. VMware vSphere ESXi Server is an example of this type of bare metal hypervisor.

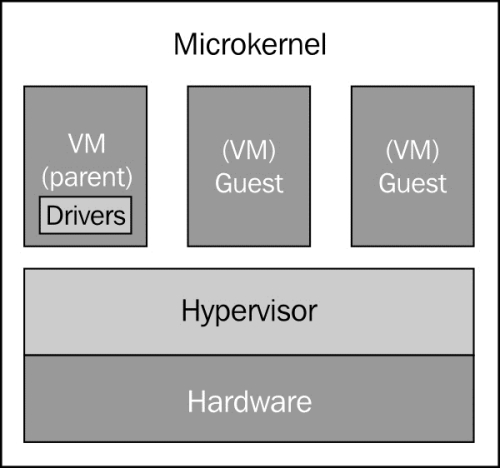

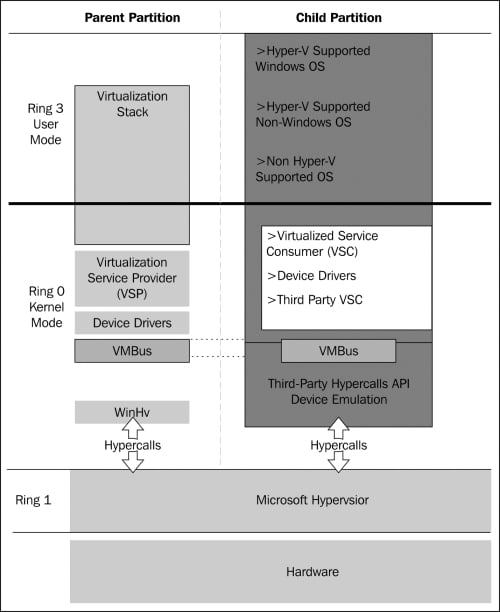

In this type of hypervisor, you have device drivers in the kernel mode (Ring 0), and also in the user mode (Ring 3) of the trusted computing base (OS). Along with this, only the CPU and memory scheduling happens in Ring 1.

The advantage of this type of hypervisor is that since the majority of hardware manufactures provide compatible device drivers for operating systems, with the microkernel hypervisor, finding compatible hardware is not a problem.

A microkernel hypervisor requires device drivers to be installed for the physical hardware devices in the operating system, which is running under the parent partition of a hypervisor. This means that we don't need to install the device drivers for each guest operating system running as a child partition, because when these guest operating systems need to access physical hardware resources on the host computer, they simply do this by communicating through the parent partition. One of the features of using microkernel-based hypervisors is that these hypervisors don't hurt the concept of TCB; thus, they work within the limited, privileged boundaries. The hardware-assisted virtualization Hyper-V that Microsoft implemented is an example of a microkernel-based hypervisor.

Tip

What is a trusted computing base?

You can find information about TCB at http://csrc.nist.gov/publications/history/dod85.pdf.

Now let's take a look at the Hyper-V architecture diagram, as follows:

As you can see in the preceding diagram, Hyper-V behaves as a type 1 hypervisor, which runs on top of hardware. Running on top of the hypervisor are one parent partition and one or more child partitions. A partition is simply a unit of isolation within the hypervisor that is allocated physical memory address space and virtual processors. Now let's discuss the parent and child partitions.

The Parent partition is the partition that has all the access to hardware devices, and control over the local virtualization stack. The parent partition has the rights to create child partitions and manage all the components related to them.

As we said in the preceding section, the child partition gets created by the parent partition, and all the guest virtual machine related components run under the child partition.

After seeing the two major elements of any hypervisor virtualization stack, we will now see some more elements related to Hyper-V virtual stack.

When we install the Hyper-V role on supported hardware, right after we start the server to complete the installation of Hyper-V role, the parent partition gets created and the parent partition hypervisor itself goes underneath the operating system layer, and now Windows Server 2012 operating system runs on top of the hypervisor's parent partition layer. With the basic definition, we understood that the parent partition is the brain of Hyper-V virtual stack management, and all the components get installed in child partitions. The parent partition also makes sure that the hypervisor has adequate access to all the hardware resources; and while accessing these hardware resources, the trusted computing base concept will be used. In addition to all the tasks the parent partition performs, when you start your Hyper-V server, the parent partition is the first partition to get created; and while running the virtual machines on the Hyper-V server, it is the parent partition that provisions the child partitions on the hypervisor or Hyper-V server. The parent partition also acts as the middleman in between the virtual machines (child partitions) and hardware for accessing the resources.