There are plenty of misconceptions about the topic of virtualization, especially among nontechnical IT folk. The CIOs who have not felt the strategic impact of virtualization (be it a good or a bad experience) tend to carry this misconceptions. Although virtualization looks similar on the cover to a physical world, it is completely re-architected under the hood.

So let's take a look at the first misconceptions: what exactly is virtualization? Because it is an industry trend, virtualization is often generalized to include other technologies that are not virtualized. This is a typical strategy by IT vendors who have similar technology. A popular technology often branded under virtualization is Partitioning; once it is parked under the umbrella of virtualization, both should be managed in the same way. Since both are actually different, customers who try to manage both with a single piece of management software struggle to do well.

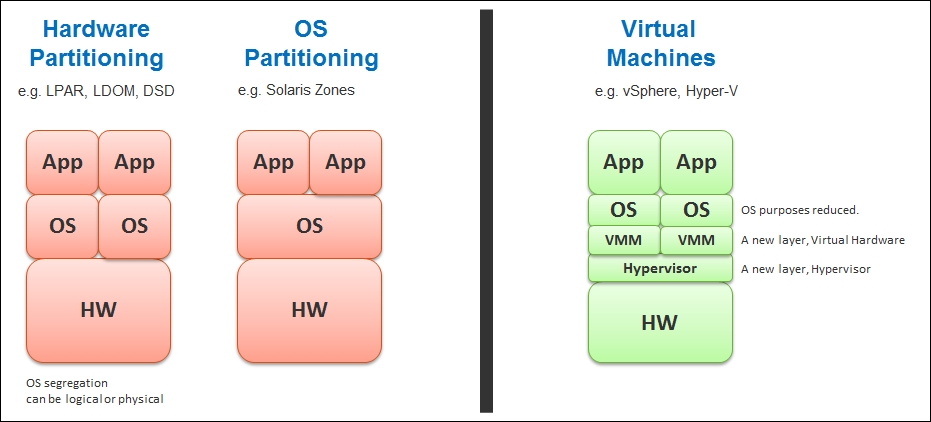

Partitioning and virtualization are two very different architectures in computer engineering, resulting in major differences in functionalities. They are shown in the following figure:

Virtualization versus Partitioning

With partitioning, there is no hypervisor that virtualizes the underlying hardware. There is no software layer separating the Virtual Machine (VM) and the physical motherboard. There is, in fact, no VM. This is why some technical manuals in the partitioning technology do not even use the term VM. The manuals use the term domain or partition instead.

There are two variants in the partitioning technology, the hardware level and the OS level, which are covered in the following bullet points:

In the hardware-level partitioning, each partition runs directly on the hardware. It is not virtualized. This is why it is more scalable and has less of a performance hit. Because it is not virtualized, it has to have an awareness of the underlying hardware. As a result, it is not fully portable. You cannot move the partition from one hardware model to another. The hardware has to be built for a purpose to support that specific version of the partition. The partitioned OS still needs all the hardware drivers and will not work on other hardware if the compatibility matrix does not match. As a result, even the version of the OS matters, as it is just like the physical server.

In the OS partitioning, there is a parent OS that runs directly on the server motherboard. This OS then creates an OS partition, where other "OS" can run. I use the double quotes as it is not exactly the full OS that runs inside that partition. The OS has to be modified and qualified to be able to run as a Zone or Container. Because of this, application compatibility is affected. This is very different to a VM, where there is no application compatibility issue as the hypervisor is transparent to the Guest OS.

We covered the difference from an engineering point of view. However, does it translate into different data center architecture and operations? Take availability, for example. With virtualization, all VMs become protected by HA (High Availability)—100 percent protection and that too done without VM awareness. Nothing needs to be done at the VM layer, no shared or quorum disk and no heartbeat network. With partitioning, the protection has to be configured manually, one by one for each LPAR or LDOM. The underlying platform does not provide that. With virtualization, you can even go beyond five 9s and move to 100 percent with Fault Tolerance. This is not possible in the partitioning approach as there is no hypervisor that replays the CPU instructions. Also, because it is virtualized and transparent to the VM, you can turn on and off the Fault Tolerance capability on demand. Fault tolerance is all defined in the software.

Another area of difference between partitioning and virtualization is Disaster Recovery (DR). With the partitioning technology, the DR site requires another instance to protect the production instance. It is a different instance, with its own OS image, hostname, and IP address. Yes, we can do a SAN boot, but that means another LUN is required to manage, zone, replicate, and so on. DR is not scalable to thousands of servers. To make it scalable, it has to be simpler. Compared to partitioning, virtualization takes a very different approach. The entire VM fits inside a folder; it becomes like a document and we migrate the entire folder as if the folder is are one object. This is what vSphere Replication in Site Recovery Manager does. It does a replication per VM; no need to worry about SAN boot. The entire DR exercise, which can cover thousands of virtual servers, is completely automated and with audit logs automatically generated. Many large enterprises have automated their DR with virtualization. There is probably no company that has automated DR for their entire LPAR or LDOM.

I'm not saying partitioning is an inferior technology. Every technology has its advantages and disadvantages, and addresses different use cases. Before I joined VMware, I was a Sun Microsystems SE for five years, so I'm aware of the benefit of UNIX partitioning. I'm just trying to dispel the myth that partitioning equals virtualization.

As both technologies evolve, the gaps get wider. As a result, managing a partition is different than managing a VM. Be careful when opting for a management solution that claims to manage both. You will probably end up with the most common denominator.