Containerization is an ability to build and package applications as shippable containers. Containers run in isolation in a user-mode using a shared kernel. A kernel is the heart of the operating system which accepts the user inputs and converts/translates them as processing instructions for CPU. In a shared kernel mode containers do the same as what VMs do to physical machines. They isolate the applications from the underlying OS needs. Let's see a few key implementations of this technology.

Some of the key implementations of containers are as follows:

- The word container has been around since 1982 with the introduction of chroot by Unix, which introduced process isolation. Chroot creates a virtual root directory for a process and its child processes, the process running under chroot cannot access anything outside the environment. Such modified environments are also called chroot jails.

- In 2000, a new isolation mechanism for FreeBSD (a free Unix like OS) was introduced by R&D Associates, Inc.'s owner, Derrick T. Woolworth, it was named jails. Jails are isolated virtual instances of FreeBSD under a single kernel. Each jail has its own files, processes, users, and super accounts. Each jail is sealed from other jails.

- Solaris introduced its OS virtualization platform called zones in the year 2004 with Solaris 10. One or more applications can run within a zone in isolation. Inter-zone communication was also possible using network APIs.

- In 2006, Google launched process containers, a technology designed for limiting, accounting, and isolating resource usage. It was later renamed to control groups (cgroups) and merged into the Linux kernel 2.6.24.

- In 2008, Linux launched its first out-of-the-box implementation of containers called Linux containers (LXC) a derivative of OpenVZ (OpenVZ developed an extension to Linux with the same features earlier). It was implemented using cgroups and namespaces. The cgroups allow management and prioritization for CPU, memory, block I/O, and network. Namespaces provided isolation.

Solomon Hykes, CTO of dotCloud a PaaS (Platform as a Service) company, launched Docker in the year 2013, which reintroduced containerization. Before Docker, containers were just isolated processes and application portability as containers across discrete environments was never guaranteed. Docker introduced application packaging and shipping with containers. Docker isolated applications from infrastructure, which allowed developers to write and test the applications on traditional desktop OS and then easily package and ship it to production servers with less trouble.

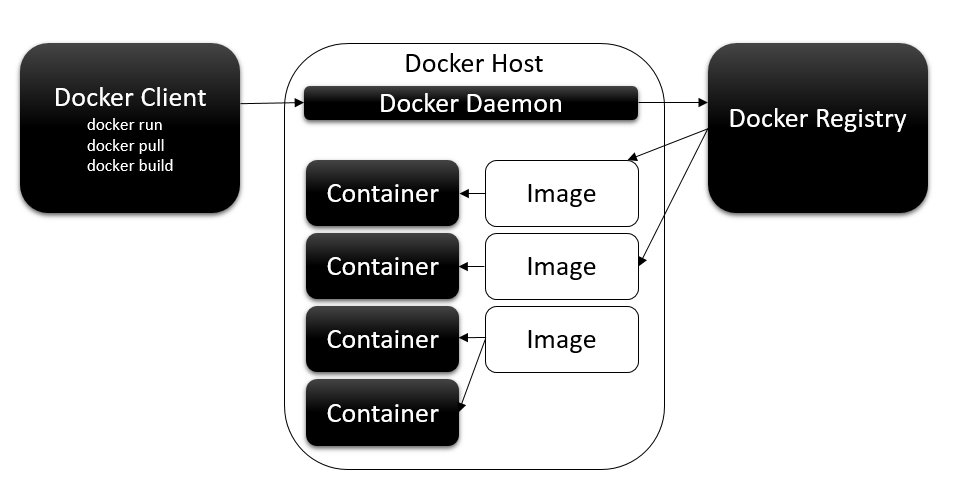

Docker uses client-server architecture. The Docker daemon is the heart of the Docker platform, and it should be present on every host, as it acts as a server. The Docker daemon is responsible for creating the containers, managing their life cycle, creating and storing images, and many other key things around containers. Applications are designed and developed as containers on developer's desktop and packaged as Docker images. Docker images are read-only templates that encapsulate the application and its dependent components. Docker also provides a set of base images that contain a pretty thin OS to start application development. Docker containers are nothing but instances of Docker images. Any number of containers can be created from an image within a host. Containers run directly on the Linux kernel in an isolated environment.

The Docker repository is the storage for Docker images. Docker provides both public and private repositories. Docker's public image repository is called Docker Hub, anyone can search the images in the public repository from Docker CLI or a web browser, download the image, and customize as per the application's needs. Since public repositories are not well suited for enterprise scenarios, which demand more security, Docker provides private repositories. Private repositories restrict access to your images for users within your enterprise; unlike Docker Hub, private repositories are not free. Docker registry is a repository for custom user images, users can pull any publicly available image or push to store and share across other users. The Docker daemon manages a registry per host too, when asked for an image the daemon first searches within the local registry and then the public repositories aka Docker Hub. This mechanism eliminates downloading images from the public repository each time.

Docker uses several Linux features to deliver the functionality. For example, Docker uses namespaces for providing isolation, cgroups for resource management, and union filesystem for making the containers extremely lightweight and fast. Docker client is the command-line interface, which is the only user interface for interacting with the Docker daemon. The Docker client and daemon can run out of a single system serving as both client and server. When on the server, users can use the client to communicate with the local server. Docker also provides an API that can be used to interact with remote Docker hosts. This can be seen in the following image:

The Docker development life cycle can be explained with the help of the following steps:

- Docker container development starts with downloading a base image from Docker Hub. Ubuntu and Fedora are a few images available from Docker Hub. An application can be containerized post application development too. It is not necessary to always start with Dockerizing the application.

- The image is customized as per application requirement using a few Docker-specific instructions. These sets of instructions are stored in a file called Dockerfile. When deployed the Docker daemon reads the Dockerfile and prepares the final image.

- The image can then be published to a public/private repository.

- When users run the following command on any Docker host, the Docker daemon searches for images on the local machine first and then on Docker Hub if the images are not found locally. A Docker container is created if the image is found. Once the container is up and running,

[command]is called on the running container:

$ docker run -i -t [imagename] [command]

Docker made enterprises life easy, applications can now be contained and easily shipped across hosts and distributed with less friction between teams. Docker Hub today offers 450,000 images publicly available for reuse. A few of the famous ones are ngnix web server, Ubuntu, Redis, swarm, MySQL, and so on. Each one is downloaded by more than 10 million users. Docker Engine has been downloaded 4 billion times so far and still growing. Docker has enormous community support with 2,900 community contributors and 250 meet up groups. Docker is now available on Windows and Macintosh. Microsoft officially supports Docker through its public cloud platform Azure.

eBay, a major e-commerce giant, uses Docker to run their same day delivery business. eBay uses Docker in their continuous integration (CI) process. Containerized applications can be easily moved from a developer's laptop to test and then production machines seamlessly. Docker also enables the application to run alike on developer machines and also on production instances.

ING, a global finance services organization, faced nightmares with constant changes required to its monolithic applications built using legacy technologies. Implementing each change involved a laborious process of going through 68 documents to move a change to production. ING integrated Docker into its continuous delivery platform, which provided more automation capabilities for test and deployment, optimized utilization, reduced hardware costs, and saved time.

Up to Docker release 0.9, containers were built using LXC, which is a Linux centric technology. Docker 0.9 introduced a new driver called libcontainer alongside LXC. The libcontainer is now a growing open source, open governed, non-profit library. The libcontainer is written using Go language for creating containers using Linux kernel API without relying on any close coupled features such as user-spaces or LXC. This means a lot to companies trending towards containerization. Docker has now moved out of being a Linux centric technology, in the future we might also see Docker adapting other platforms discussed previously, such as Solaris Zones, BSD jails, and so on. Libcontainer is openly available with contributions from major tech giants such as Google, Microsoft, Amazon, Red Hat, VMware, and so on. Docker being at its core is responsible for developing the core runtime and container format. Public cloud vendors such as Azure and AWS support Docker on their cloud platforms as first class citizens.