We will create a Node application in the same way we created our first app in Chapter 1, Getting started with Puppeteer. We are going to create a folder called OurFirstTestProject (you will find this directory inside the Chapter2 directory mentioned in the Technical requirements section) and then execute npm init -y inside that folder:

> npm init -y

The response should be something like this:

{

"name": "OurFirstTestProject",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC"

}

Now it's time to install the packages we are going to use:

- Puppeteer 7

- Mocha (any version)

- Chai (any version)

Let's run the following commands:

> npm install puppeteer@">=7.0.0 <8.0.0"

> npm install mocha

> npm install chai

For this first demo, we are going to use the site https://www.packtpub.com/ as a test case. Let's keep our test simple. We want to test that the page title says Packt | Programming Books, eBooks & Videos for Developers.

Important Note

The site we are using for this test might have changed over time. Before testing this code, go to https://www.packtpub.com/ and check whether the title is still the same. That's why, in the following chapters, we will be downloading sites locally, so we avoid these possible issues.

We mentioned that we would use describe to group our tests. But separating tests into different files will also help us to get our code organized. You can choose between having one or many describe functions per file. Let's create a file called home.tests.js. We are going to put all tests related to the home page there.

Although you can create the files anywhere you want, Mocha grabs all the tests in the test folder by default, so we will to create the test folder and then create the home.test.js file inside that folder.

We are going to have the following:

home.tests.js with the home tests- A

describe function with the header tests

- An

it function testing "Title should have Packt name"

- Another

it function testing "Title mention the word Books"

The structure should look like this:

const puppeteer = require('puppeteer');

const expect = require('chai').expect;

const should = require('chai').should();

describe('Home page header', () => {

it('Title should have Packt name', async() => {

});

it('Title should mention Books', async() => {

});

});

Let's unpack this code:

- We are importing Puppeteer in line 1.

- Lines 2 are 3 are about importing the different types of assertion styles Chai provides. As you can see,

expect is not being called with parentheses whereas should is. We don't need to know why now. But, just to be clear, that's not a mistake.

- How about Mocha? Are we missing Mocha? Well, Mocha is the test runner. It will be the executable we will call later in

package.json. We don't need it in our code.

- It's interesting to see that both

describe and it are just simple functions that take two arguments: a string and a function. Can you pass a function as an argument? Yes, you can!

- The functions we are passing to the

it functions are async. We can't use the await keyword in functions that are not marked as async. Remember that Puppeteer relies a lot on async programming.

Now we need to launch a browser and set up everything these tests need to work. We could do something like this:

it('Title should have Packt name', async() => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.packtpub.com/');

// Our test code

await browser.close();

});

Tip

Don't try to learn the Puppeteer API now. We are going to explain how all of these commands work in Chapter 3, Navigating through a website.

This code will run perfectly. However, there are two things that could do with optimization:

- We would be repeating the same code over and over.

- If something fails in the middle of the test, the browser won't get closed, leaving lots of open browsers.

To avoid these problems, we can use before, after, beforeEach, and afterEach. If we add these functions to our tests, this would be the execution order:

beforebeforeEachit('Title should have Packt name')afterEachbeforeEachit('Title should mention Books')afterEachafter

It's not a rule of thumb, but we can do something like this in our case:

before: Launch the browser.beforeEach: Open a page and navigate to the URL.- Run the test.

afterEach: Close the page.after: Close the browser.

These hooks, which is what Mocha calls these functions, would look like this:

let browser;

let page;

before(async () => {

browser = await puppeteer.launch();

});

beforeEach(async () => {

page = await browser.newPage();

await page.goto('https://www.packtpub.com/');

});

afterEach(async () => {

await page.close();

});

after(async () => {

await browser.close();

});

One thing to mention here is that we could do what's called Fire and Forget when closing the page or the browser. Fire and forget means that we don't want to await the result of page.close() or browser.close(). So, we could do this:

afterEach(() => page.close());

after(() => browser.close());

That's not something I love doing because if something fails, you would like to know where and why. But as this is just cleanup code for a test, it's not production code, we can afford that risk.

Now our test has a browser opened, a page with the URL we want to test read. We just need to test the title:

it('Title should have Packt name', async() => {

const title = await page.title();

title.should.contain('Packt');

});

it('Title should should mention Books', async() => {

expect((await page.title())).to.contain('Books');

});

I used two different styles here.

In the first case, I'm assigning the result of the title async function to a variable, and then using should.contain to check whether the title contains the word "Packt". In the second case, I just evaluated ((await page.title()). I added some extra parentheses there for clarification. You won't see them in the final example.

The second difference is that in the first case, I'm using the should style, whereas in the second case, I'm using the expect style. The result will be the same. It's just about which style you feel more comfortable with or feels more natural to you. There is even a third style: assert.

We have everything we need to run our tests. Remember how npm init created a package.json file for us? It's time to use it. Let's set the test command. You should have something like this:

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

We need to tell npm to run Mocha when we execute npm test:

"scripts": {

"test": "mocha"

},

Time to run our tests! Let's run npm test in the terminal:

npm test

And we should have our first error:

1) Home page header

"before each" hook for "Title should have Packt name":

Error: Timeout of 2000ms exceeded. For async tests and hooks, ensure "done()" is called; if returning a Promise, ensure it resolves.

That's bad, but not that bad. Mocha validates by default that our tests should take less than 2,000 ms. That sounds OK for an isolated unit test. But UI tests might take longer than 2 seconds. That doesn't mean that UI tests shouldn't have a timeout. Speed is a feature, so we should be able to enforce some expected timeout. We can change that by adding the --timeout command-line argument to the launch setting we set up in the package.config file. I think 30 seconds could be a reasonable timeout. As it expects the value in milliseconds, it should be 30000. Let's make that change in our package.config file:

"scripts": {

"test": "mocha --timeout 30000"

},

Tip

The command-line argument is not the only way to set up the timeout. You can call this.Timeout (30000) inside the describe function or configure the timeout using a config file (https://mochajs.org/#configuring-mocha-nodejs).

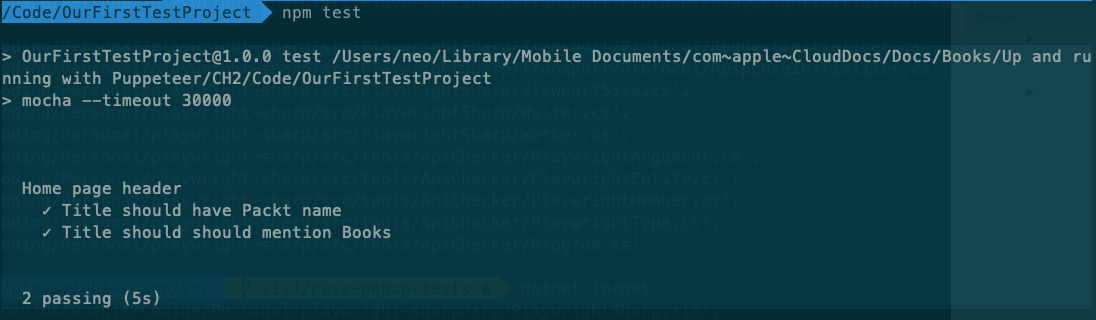

Once we set up the timeout, we can try our tests again by running npm test:

Test Result

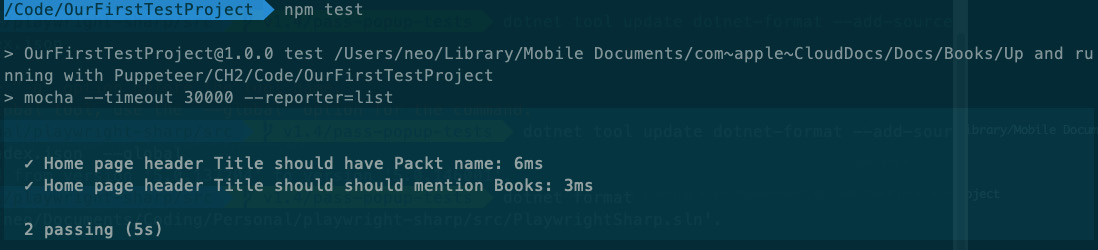

Mocha not only ran our tests but also printed a pretty decent report. We have there all the tests Mocha ran, the final result, and the elapsed time. Here is where many test runners offer different options. For instance, Mocha has a --reporter flag. If you go to https://mochajs.org/, you will see all the available reporters. We could use the list reporter, which shows the elapsed time of each test. We can add it to our package.config file:

"scripts": {

"test": "mocha --timeout 30000 --reporter=list"

},

With this change, we can get a better report:

Test Result using the list reporter

This project looks fine. If you had only a few tests, this would be enough. But if we are going to have lots of tests using many pages, this code won't scale. We need to organize our code so that we can be more productive and reuse more code.

Free Chapter

Free Chapter