Since you are reading this, you likely have a reason to consider unit testing as an additional development skill to learn. Whether you are motivated by personal interest or driven by external stimulus, you probably wonder if it will be worth the effort. But properly applied unit testing is perhaps the most important technique the agile world has to offer. A well-written test suite is usually half the battle for a successful development process, and the following section will explain why.

The most obvious reason to write unit tests is to build up a safety net to guard your software from regression. There are various grounds for changing the existing code, whether it be to fix a bug or to add supplemental functionality. But understanding every aspect of the code you are about to change is difficult to achieve. So, a new bug sneaks in easily. And it might take a while before it gets noticed.

Think of a method returning some kind of sorted list that works as expected. Due to additional requirements, such as filtering the result, a developer changes the existing code. Inadvertently, these changes introduce a bug that only surfaces under rare circumstances. Hence, simple sanity tests may not reveal any problems and the developer feels confident to check in the new version. If the company is lucky, the problem will be detected by the quality assurance team, but chances are that it slips through to the customer. Boom!

This is because it's hardly possible to check all corner cases of a nontrivial software from a user's point of view, let alone if done manually. Besides an annoyed customer, this leads to a costly turnaround consisting of, for example, filing a bug report, reproducing and debugging the problem, scheduling it for repair, implementing the fix, testing, delivering, and, finally, deploying the corrected version. But who will guarantee that the new version won't introduce another regression?

Sounds scary? It is! I have seen teams that were barely able to deliver new functionality as they were about to drown in a flood of bugs. And hot fixes produced to resolve blocking situations on the customer side introduced additional regression all the time. Sounds familiar? Then, it might be time for a change.

Good unit tests can be written with a small development overhead and verify, in particular, all the corner case behavior of a component. Thus, the developer's said mistake would have been captured by a test. At the earliest possible point in time and at the lowest possible price. But humans make mistakes: what if a corner case is overlooked and a bug turns up? Even then, you are better off because fixing the issue sustainably means simply writing an additional test that reproduces the problem by a failing verification. Change the code until all tests pass and you get rid of the fault forever.

The influence a consistent testing approach will have on the code quality is less apparent. Once you have a safety net in place, changing the existing code to make it more readable, and hence easier to enhance, isn't risky anymore. If you are introducing a regression, your tests will tell you immediately. So, the code morphs from a never touch a running system shrine to a lively change embracing place.

Matured test-first practices will implicitly improve your code with respect to most of the common quality metrics. Testing first is geared to produce small, coherent, and loosely coupled components combined with a high coverage and verification of the component's behavior. The production of clean code is an inherent step of the test-driven development mantra explained further ahead.

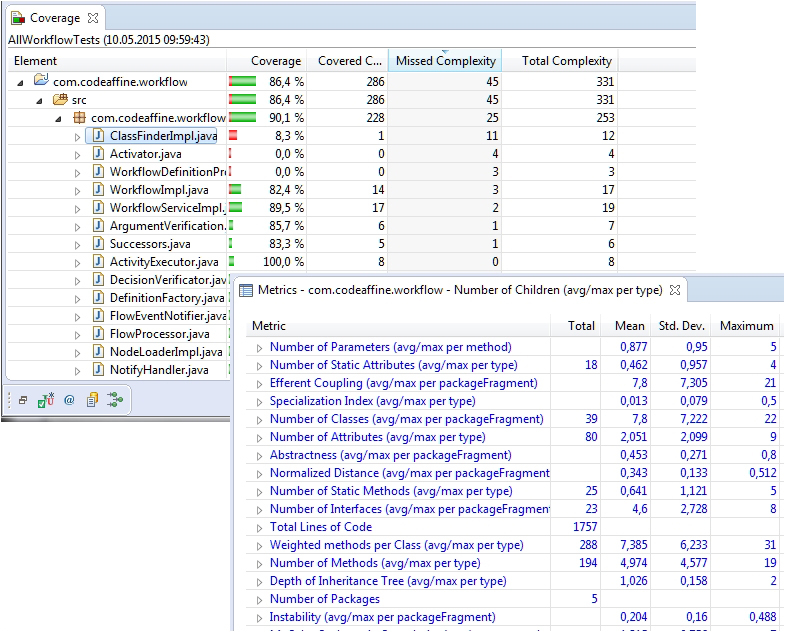

The following image shows two screenshots of measurements taken from a small, real-world project of the Xiliary GitHub repository (https://github.com/fappel/xiliary). Developed completely driven by tests, we couldn't care less about the project's metrics before writing this chapter. But not very surprisingly, the numbers look quite okay.

Note

Don't worry if you're not familiar with the meaning of the metrics. All you need to know at the moment is that they would appear in red if exceeding the tool's default thresholds.

So, in case you wonder about the three red spots with low coverage numbers, note that two of those classes are covered by particular integration tests as they are adapters to third-party functionality (a more detailed explanation of integration tests follows in the upcoming Understanding the nature of a unit test section). The remaining class is at an experimental or prototypical stage and will be replaced in the future.

Note

Note that we'll deepen our knowledge of code coverage in Chapter 2, Writing Well-structured Tests, and in Chapter 8, Running Tests Automatically within a CI Build.

Metrics of a TDD project

Programs built on good code quality stand out from systems that merely run, because they are easier to maintain and usually impress with a higher feature evolution rate.

At first glance, the math seems to be simple. Writing additional testing code means more work, which consumes more time, which leads to lower development speed. Right? But would you like to drive a car whose individual parts did not undergo thorough quality assurance? And what would be gained if the car had to spend most of its lifetime in the service shop rather than on the road, let alone the possibility of a life-threatening accident?

The initial production speed might be high, but the overall outcome would be poor and might ruin the car manufacturer in the end. It is not that much different with the development of nontrivial software systems. We elaborated already on the costs of bugs that manage to sneak through to the customer. So, it is a naïve assessment calculating development speed like that.

As a developer, you stand between two contradictory goals: on the one hand, you have to be quick on the draw to meet your deadlines. On the other hand, you must not commit too many sins to be able to also meet subsequent deadlines. The term sin refers to work that should be done before a particular job can be considered complete or proper. This is also denoted as technical debt, [TECDEP]. And here comes the catch. Keeping the balance often does not work out, and once the technical debt gets too high, the system collapses. From that point in time, you won't meet any deadlines again.

So, yes, writing tests causes an overhead. But if done well, it ensures that subsequent deadlines are not endangered by technical debt. The development pace might be initially at a slightly lower rate with testing, but it won't decrease and is, therefore, higher when watching the overall picture.

By the way, if you know your tools and techniques, the overhead isn't that much at all. At least, I am usually not hired for being particularly slow. When you think of it, running a component's unit tests is done in the time of a wink. On the flip side, checking its behavior manually involves launching the application, clicking to the point where your code actually gets involved, and after that, you click and type yourself again through certain scenarios you consider important. Does the latter sound like an efficient modus operandi?

A good test suite at hand can be an additional source of information about what your system components are really capable of and one that doesn't outdate unlike design docs, which usually do. Of course, this is a kind of low-level specification that only a developer is apt to write. But if done well, a test's name tells you about the functionality under test with respect to specific initial conditions and the test's verifications about the expected outcome produced by the execution of this functionality.

This way, a developer who is about to change an existing component will always have a chance to check against the accompanying tests to understand what a component is really all about. So, the truth is in the tests! But this underscores that tests have to be treated as first-class citizens and have to be written and adjusted with care. A poorly written test might confuse a programmer and hinder the progressing rate significantly.

Everybody likes to be in a winning team. But once you are stuck in a bug trail longer than the Great Wall of China and a technical debt higher than Mount Everest, fear creeps in. At that time, the implementation of new features can cause avalanches of lateral damage and developers get reluctant to changes. What follow are debates about consolidation phases or even rewriting large parts of the system from scratch before they dare to think about new functionality. Of course, this is an economic horror scenario from the management's point of view, and that's how the development team member's confidence and courage say good bye.

Again, this does not happen as easily with a team that has build its software upon components backed up with well-written unit tests. We learned earlier why unit tested systems neither have many bugs nor too much technical debt. Introducing additional functionality is possible without expecting too much lateral damage since the existing tests beware of regressions. Combined with module-spanning integration tests, you get a rock-solid foundation in which developers learn to trust.

I have seen more than once how restructuring requirements of nontrivial systems were achieved without doing any harm to dependent components. All that was necessary was to take care not to break existing tests and cover changed code passages with new or adjusted tests. So, if you are unluckily more or less familiar with some of the scenarios described in this section, you should read on and learn how to get confidence and courage back in your team.