Once feature sets get finalized in our last section, what follows is to estimate the parameters of the selected models, for which we can use either MLlib or R here, and we need to arrange the distributed computing.

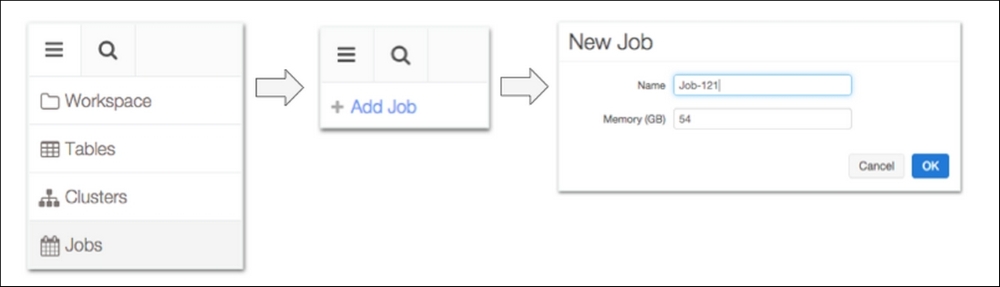

To simplify, we can utilize Databricks' Job feature. Specifically, within the Databricks environment, we can go to Jobs and then create jobs, as shown in the following image:

Then, users can select notebooks to run, specify clusters, and schedule jobs. Once scheduled, users can also monitor the running and then collect the results.

In section, Methods for a holistic view, we prepared some codes for each of the three models selected. Now, we need to modify them with the final set of features selected in the last section so as to create our final notebooks.

In other words, we have one dependent variable prepared and 17 features selected out from our PCA and feature selection work. Therefore, we need to insert all them into the codes developed in section II to finalize...