Let's take a look at the various components of Mahout.

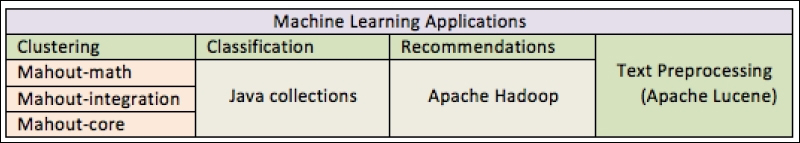

The following table represents the high-level design of a Mahout implementation. Machine learning applications access the API, which provides support for implementing different machine learning techniques, such as clustering, classification, and recommendations.

Also, if the application requires preprocessing (for example, stop word removal and stemming) for text input, it can be achieved with Apache Lucene. Apache Hadoop provides data processing and storage to enable scalable processing.

Also, there will be performance optimizations using Java Collections and the Mahout-Math library. The Mahout-integration library contains utilities such as displaying the data and results.

MapReduce is a programming paradigm to enable parallel processing. When it is applied to machine learning, we assign one MapReduce engine to one algorithm (for each MapReduce engine, one master is assigned).

Input is provided as Hadoop sequence files, which consist of binary key-value pairs. The master node manages the mappers and reducers. Once the input is represented as sequence files and sent to the master, it splits data and assigns the data to different mappers, which are other nodes. Then, it collects the intermediate outcome from mappers and sends them to related reducers for further processing. Lastly, the final outcome is generated.