ML is a subfield of Artificial Intelligence (AI), a topic of computer science born in the 1950s with the goal of trying to get computers to think or provide a level of automated intelligence similar to that of us humans.

Early success in AI was achieved by using an extensive set of defined rules, known as symbolic AI, allowing expert decision making to be mimicked by computers. This approach worked well for many domains but had a big shortfall in that in order to create an expert, you needed one. Not only this, but also their expertise needed to be digitized somehow, which normally required explicit programming.

ML provides an alternative; instead of having to handcraft rules, it learns from examples and experience. It also differs from classical programming in that it is probabilistic as opposed to being discrete. That is, it is able to handle fuzziness or uncertainty much better than its counterpart, which will likely fail when given an ambiguous input that wasn't explicitly identified and handled.

I am going to borrow an example used by Google engineer Josh Godron in an introductory video to ML to better highlight the differences and value of ML.

Suppose you were given the task of classifying apples and oranges. Let's first approach this using what we will call classical programming:

Our input is an array of pixels for each image, and for each input, we will need to explicitly define some rules that will be able to distinguish an apple from an orange. Using the preceding examples, you can solve this by simply counting the number of orange and green pixels. Those with a higher ratio of green pixels would be classified as an apple, while those with a higher ratio of orange pixels would be classified as an orange. This works well with these examples but breaks if our input becomes more complex:

The introduction of new images means our simple color-counting function can no longer sufficiently differentiates our apples from our oranges, or even classify apples. We are required to reimplement the function to handle the new nuances introduced. As a result, our function grows in complexity and becomes more tightly coupled to the inputs and less likely able to generalize to other inputs. Our function might resemble something like the following:

func countColors(_ image:UIImage) -> [(color:UIColor, count:Int)]{

// lots of code

}

func detectEdges(_ image:UIImage) -> [(x1:Int, y1:Int, x2:Int, y2:Int)]

{

// lots of code

}

func analyseTexture(_ image:UIImage) -> [String]

{

// lots of code

}

func fitBoundingBox(_ image:UIImage) -> [(x:Int, y:Int, w:Int, h:Int)]

{

// lots of code

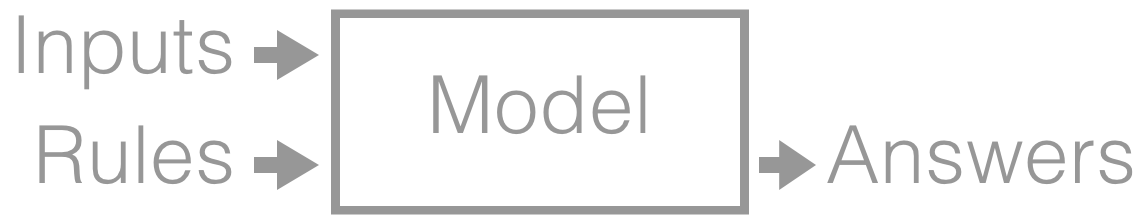

}This function can be considered our model, which models the relationship of the inputs with respect to their labels (apple or orange), as illustrated in the following diagram:

The alternative, and the approach we're interested in, is getting this model created to automatically use examples; this, in essence, is what ML is all about. It provides us with an effective tool to model complex tasks that would otherwise be nearly impossible to define by rules.

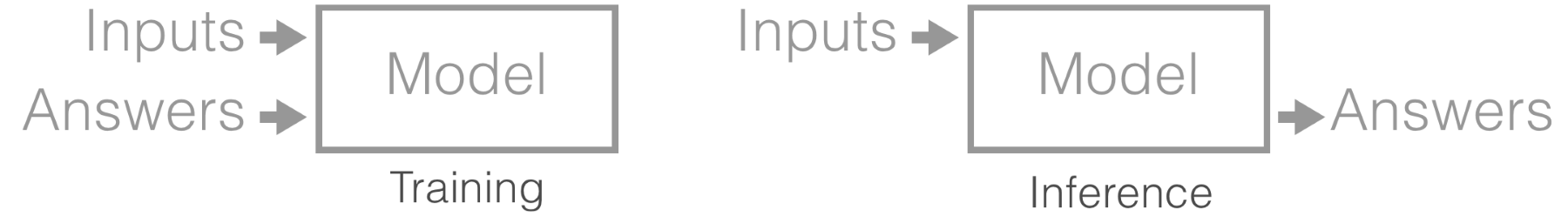

The creation phase of the ML model is called training and is determined by the type of ML algorithm selected and data being fed. Once the model is trained, that is, once it has learned, we can use it to make inferences from the data, as illustrated in the following diagram:

The example we have presented here, classifying oranges and apples, is a specific type of ML algorithm called a classifier, or, more specifically, a multi-class classifier. The model was trained through supervision; that is, we fed in examples of input with their associated labels (or classes). It is useful to understand the types of ML algorithms that exist along with the types of training, which is the topic of the next section.