CUDA is a heterogeneous programming model that includes provisions for both CPU and GPU. The CUDA C/C++ programming interface consists of C language extensions so that you can target portions of source code for parallel execution on the device (GPU). It is based on industry-standard C/C++ and provides a library of C functions that can be executed on the host (CPU) so that it can interact with the device.

In CUDA, there are two processors that work with each other. The host is usually referred to as the CPU, while the device is usually referred to as the GPU. The host is responsible for calling the device functions. As we've already mentioned, part of the code that runs on the GPU is called device code, while the serial code that runs on the CPU is called host code.

Let's start by writing our first CUDA code in C. The intention is to take a systematic step-wise approach, start with some sequential code, and convert it into CUDA-aware code by adding some additional keywords. As we mentioned earlier, there is no necessity to learn a new language—all we need to do is add some keywords to the existing language so that we can run it in a heterogeneous environment with CPU and GPU.

Let's take a look at our first piece of code. All this code does is print Hello World! from both the host and device:

#include<stdio.h>

#include<stdlib.h>

__global__ void print_from_gpu(void) {

printf("Hello World! from thread [%d,%d] \

From device\n", threadIdx.x,blockIdx.x);

}

int main(void) {

printf("Hello World from host!\n");

print_from_gpu<<<1,1>>>();

cudaDeviceSynchronize();

return 0;

}

Let's try to compile and run the preceding snippet:

- Compile the code: Place the preceding code into a file called hello_world.cu and compile it using the NVIDIA C Compiler (nvcc). Note that the extension of the file is .cu, which tells the compiler that this file has GPU code inside it:

$ nvcc -o hello_world hello_world.cu

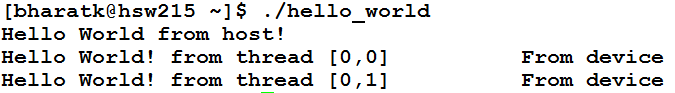

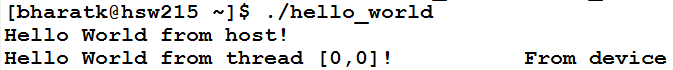

- Execute the GPU code: We should receive the following output after executing the GPU code:

By now, you might have already observed that the CUDA C code isn't used very differently and only requires that we learn some additional constructs to tell the compiler which function is GPU code and how to call a GPU function. It isn't like we need to learn a new language altogether.

In the preceding code, we added a few constructs and keywords, as follows:

- __global__: This keyword, when added before the function, tells the compiler that this is a function that will run on the device and not on the host. However, note that it is called by the host. Another important thing to note here is that the return type of the device function is always "void". Data-parallel portions of an algorithm are executed on the device as kernels.

- <<<,>>>: This keyword tells the compiler that this is a call to the device function and not the host function. Additionally, the 1,1 parameter basically dictates the number of threads to launch in the kernel. We will cover the parameters inside angle brackets later. For now, the 1,1 parameter basically means we are launching the kernel with only one thread, that is, sequential code with a thread since we are not doing anything important in the code apart from printing.

- threadIdx.x, blockIdx.x: This is a unique ID that's given to all threads. We will cover this topic more in the next section.

- cudaDeviceSynchronize(): All of the kernel calls in CUDA are asynchronous in nature. The host becomes free after calling the kernel and starts executing the next instruction afterward. This should come as no big surprise since this is a heterogeneous environment and hence both the host and device can run in parallel to make use of the types of processors that are available. In case the host needs to wait for the device to finish, APIs have been provided as part of CUDA programming that make the host code wait for the device function to finish. One such API is cudaDeviceSynchronize, which waits until all of the previous calls to the device have finished.