If the perfect Distributed Database System (DDBS) were to be described, it would certainly be a database that was highly scalable, provided perfectly consistent data, and didn’t require too much attention in regard to management (tasks such as backup, migrations, and managing the network). Unfortunately, the CAP theorem, formulated by Eric Brewer, states that that’s not possible.

Note

To date, there is no database solution that can provide the ideal combination of features such as total data consistency, high availability, and scalability all together.

For details, check: Towards robust distributed systems. PODC. 7. 10.1145/343477.343502 (https://www.researchgate.net/publication/221343719_Towards_robust_distributed_systems).

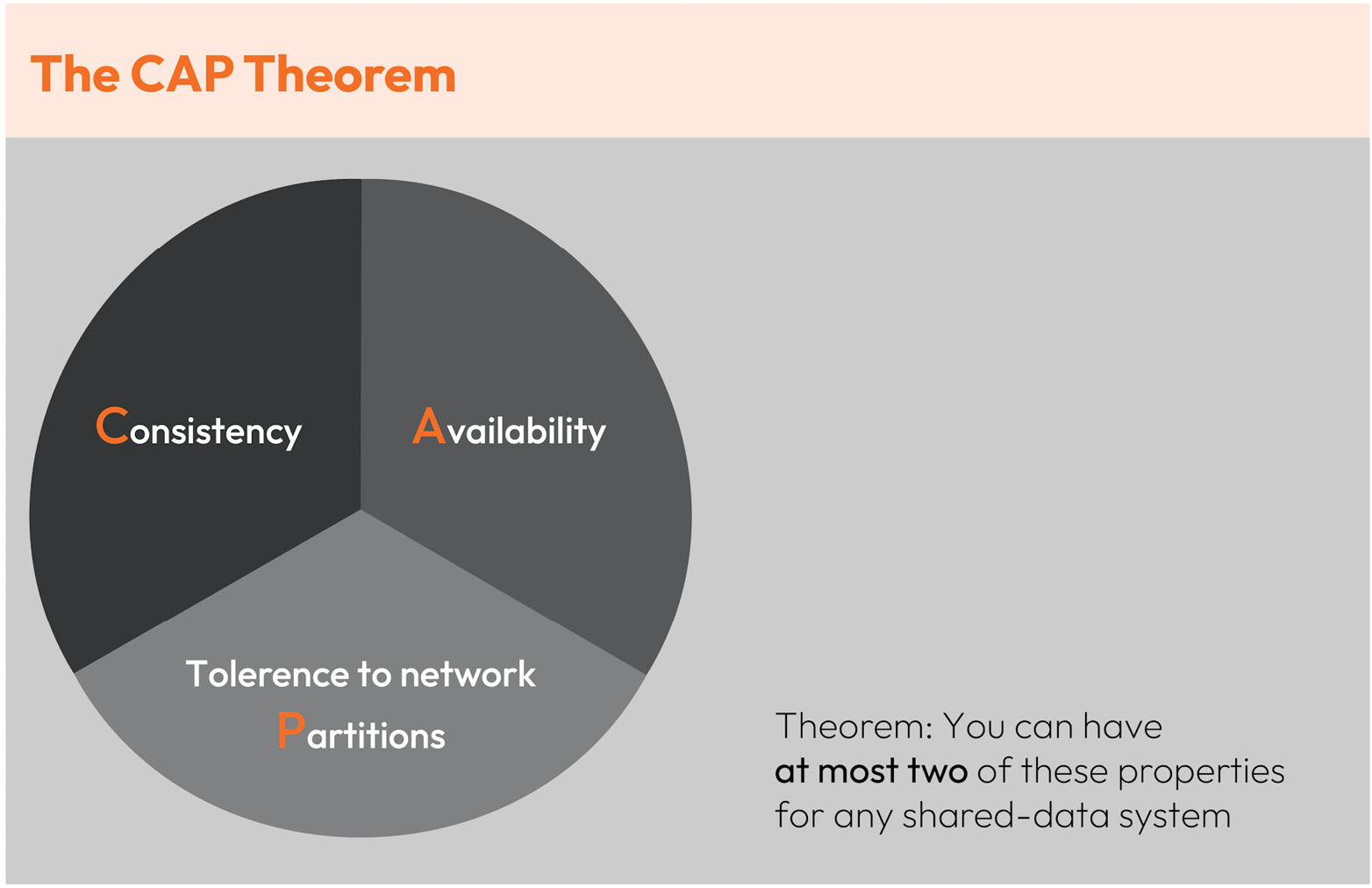

The CAP theorem is a way of understanding the trade-offs between different properties of a DDBS. Eric Brewer, at the 2000 Symposium on Principles of Distributed Computing (PODC), conjectured that when creating a DDBS, “you can have at most two of these properties for any shared-data system,” referring to the properties consistency, availability, and tolerance to network partitions.

Figure 1.2 – Representation inspired by Eric Brewer’s keynote presentation

Note

Towards Robust Distributed Systems. For more information on Eric Brewer’s work, refer to Brewer, Eric. (2000), presentation: https://people.eecs.berkeley.edu/~brewer/cs262b-2004/PODC-keynote.pdf.

The three characteristics described in the CAP theorem can be described as follows:

- Consistency: The guarantee that every node in a distributed cluster returns the same, most recent, successful write.

- Availability: Every non-failing node returns a response for all read and write requests in a reasonable amount of time.

- Partition tolerance: The system continues to function and uphold its consistency guarantees despite network partitions. In other words, the service is running despite crashes, disk failures, database, software, and OS upgrades, power outages, and other factors.

In other words, the DDBSes we can pick and choose from would only be CA (consistent and highly available), CP (consistent and partition-tolerant), or AP (highly available and partition-tolerant).

Tip

As stressed in the book Fundamentals of Software Architecture: An Engineering Approach, good software architecture requires dealing with trade-offs. This is yet another trade-off to take into consideration (https://www.amazon.com/Fundamentals-Software-Architecture-Engineering-Approach-ebook/dp/B0849MPK73/).

By considering the CAP theorem, we can then apply this new knowledge to back us up in decision-making processes in regard to choosing between SQL and NoSQL. For example, traditional DBMSes thrive when (mostly) providing the Atomicity, Consistency, Isolation, and Durability (ACID) properties; however, in regard to distributed systems, it may be necessary to give up consistency and isolation in order to achieve higher availability and better performance. This is commonly known as sacrificing consistency for availability.

Almost 12 years after the idea of CAP was proposed, Seth Gilbert and Nancy Lynch at MIT published some research, a formal proof of Brewer’s conjecture. However, another expert on database system architecture and implementation has also done some research on scalable and distributed systems, adding, to the existing theorem, the consideration of the consistency and latency trade-off.

In 2012, Prof. Daniel Abadi published a study stating CAP has become “increasingly misunderstood and misapplied, causing significant harm” leading to unnecessarily limited Distributed Database Management System (DDBMS) creation, as CAP only presents limitations in the face of certain types of failures – not during normal operations.

Abadi’s paper Consistency Tradeoffs in Modern Distributed Database System Design proposes a new formulation, Performance and Consistency Elasticity Capabilities (PACELC), which argues that the trade-offs between consistency and performance can be managed through the use of elasticity. The following question quoted in the paper clarifies the main idea: “If there is a partition (P), how does the system trade off availability and consistency (A and C); else (E), when the system is running normally in the absence of partitions, how does the system trade off latency (L) and consistency (C)?”

According to Abadi, a distributed database could be both highly consistent and highly performant, but only under certain conditions – only when the system can adjust its consistency level based on network conditions through the use of elasticity.

At this point, the intricacies of building database systems, particularly distributed ones, have been made crystal clear. As professionals tasked with evaluating and selecting DDBSes and designing solutions on top of them, having a fundamental understanding of the concepts discussed in these studies serves as a valuable foundation for informed decision-making.

Free Chapter

Free Chapter