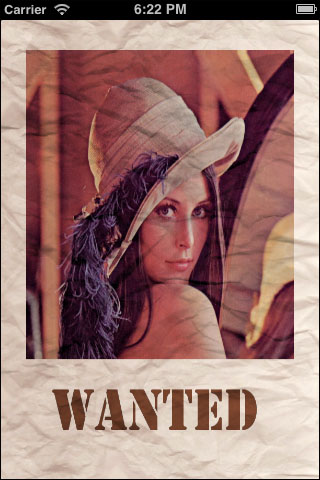

In this recipe, we'll discuss how you can use your C++ classes from the Objective-C code. We'll create a simple application that prints a pretty postcard using the image with a face. We will also learn how to measure the processing time of your methods, so that you can track their efficiency.

The following screenshot shows the resulting postcard:

The source code for this recipe is in the Recipe05_PrintingPostcard folder in the code bundle that accompanies this book, where you may find implementation of the PostcardPrinter class and images that are going to be used in our application. You can use the iOS Simulator to work on this recipe.

The following is how we can implement a postcard printing application:

Take the application skeleton from the previous recipe.

Add header and implementation files of your C++ class to the Xcode project.

Add the calling code for the postcard printing.

Add time measurements and logging for the printing function.

Let's implement the described steps:

We will first create a new project for our application, and to save time, you can use code from the previous recipe.

Next, we need to add

PostcardPrinter.hppheader file with the following class declaration:class PostcardPrinter { public: struct Parameters { cv::Mat face; cv::Mat texture; cv::Mat text; }; PostcardPrinter(Parameters& parameters); virtual ~PostcardPrinter() {} void print(cv::Mat& postcard) const; protected: void markup(); void crumple(cv::Mat& image, const cv::Mat& texture, const cv::Mat& mask = cv::Mat()) const; void printFragment(cv::Mat& placeForFragment, const cv::Mat& fragment) const; void alphaBlendC3(const cv::Mat& src, cv::Mat& dst, const cv::Mat& alpha) const; Parameters params_; cv::Rect faceRoi_; cv::Rect textRoi_; };Then, we need to implement all the methods of the

PostcardPrinterclass. We'll consider onlyprint,crumple, andprintFragmentmethods, because others are trivial.void PostcardPrinter::printFragment(Mat& placeForFragment, const Mat& fragment) const { // Get alpha channel vector<Mat> fragmentPlanes; split(fragment, fragmentPlanes); CV_Assert(fragmentPlanes.size() == 4); Mat alpha = fragmentPlanes[3]; fragmentPlanes.pop_back(); Mat bgrFragment; merge(fragmentPlanes, bgrFragment); // Add fragment with crumpling and alpha crumple(bgrFragment, placeForFragment, alpha); alphaBlendC3(bgrFragment, placeForFragment, alpha); } void PostcardPrinter::print(Mat& postcard) const { postcard = params_.texture.clone(); Mat placeForFace = postcard(faceRoi_); Mat placeForText = postcard(textRoi_); printFragment(placeForFace, params_.face); printFragment(placeForText, params_.text); } void PostcardPrinter::crumple(Mat& image, const Mat& texture, const Mat& mask) const { Mat relief; cvtColor(texture, relief, CV_BGR2GRAY); relief = 255 - relief; Mat hsvImage; cvtColor(image, hsvImage, CV_BGR2HSV); vector<Mat> planes; split(hsvImage, planes); subtract(planes[2], relief, planes[2], mask); merge(planes, hsvImage); cvtColor(hsvImage, image, CV_HSV2BGR); }Now we're ready to use our class from Objective-C code. We will also measure how long it takes to print the postcard and log this information to the console. Let's use the following implementation of the

viewDidLoadmethod:- (void)viewDidLoad { [super viewDidLoad]; PostcardPrinter::Parameters params; // Load image with face UIImage* image = [UIImage imageNamed:@"lena.jpg"]; UIImageToMat(image, params.face); // Load image with texture image = [UIImage imageNamed:@"texture.jpg"]; UIImageToMat(image, params.texture); cvtColor(params.texture, params.texture, CV_RGBA2RGB); // Load image with text image = [UIImage imageNamed:@"text.png"]; UIImageToMat(image, params.text, true); // Create PostcardPrinter class PostcardPrinter postcardPrinter(params); // Print postcard, and measure printing time cv::Mat postcard; int64 timeStart = cv::getTickCount(); postcardPrinter.print(postcard); int64 timeEnd = cv::getTickCount(); float durationMs = 1000.f * float(timeEnd - timeStart) / cv::getTickFrequency(); NSLog(@"Printing time = %.3fms", durationMs); if (!postcard.empty()) imageView.image = MatToUIImage(postcard); }

You can now build and run your application to see the result.

You can see that the PostcardPrinter class is an ordinary C++ class, and it actually could be used in any desktop application. We won't discuss its implementation in details, as it is not iOS-specific and is implemented using simple OpenCV functions. We will only mention that the crumpling effect is implemented by changing intensity values of images, and this is done in HSV color space. We'll first calculate the value of relief using texture, and then subtract it from the intensity plane of the image (value channel in HSV).

In viewDidLoad, we first load the images. You can note that the params.texture member is converted to RGB color space to comply with the input format of PostcardPrinter. But the params.text is loaded with the alpha channel, which is later used to avoid font aliasing. The last Boolean argument in the UIImageToMat function indicates that we need this image to be converted with alpha:

UIImageToMat(image, params.text, true);

Also note that C++ code can be seamlessly called from the Objective-C code. That's why in viewDidLoad, we simply create a PostcardPrinter object and then call its methods.

Finally, note how time measurements are added. OpenCV's getTickCount and getTickFrequency functions are used. The following line of code can be used to calculate time in seconds, so we multiply it with 1000 to get the printing time in milliseconds.

float(timeEnd - timeStart) / cv::getTickFrequency();

Depending on your device, the time will vary, but it shouldn't exceed half a second, which is good enough for our use case. Later, we will not use the getTickCount function directly, rather we'll use helper macros:

#define TS(name) int64 t_##name = cv::getTickCount()

#define TE(name) printf("TIMER_" #name ": %.2fms\n", \

1000.*((cv::getTickCount() - t_##name) / cv::getTickFrequency()))It actually does the same measurement, but it greatly improves readability of the code under inspection. It allows us to measure the working time in a simple manner as shown in the following code:

// Print postcard

cv::Mat postcard;

TS(PostcardPrinting);

postcardPrinter.print(postcard);

TE(PostcardPrinting);Our PostcardPrinter class is quite simple, but you can use it to start a full-featured application. You can add more textures, effects, and fonts, so users have more freedom while designing their postcards. In the next recipe, we apply a cool vintage effect to the photo, so it looks like a real poster from the XIX century. Its OpenCV implementation is quite simple, so we leave it for self-study.

The text for this recipe was rendered using the GIMP application, which is a free alternative to Photoshop. We encourage you to get familiar with this tool (or with Photoshop), if you're going to implement advanced photo effects. You can design beautiful textures and icons for your application using GIMP.

iOS provides several frameworks that can be used for accelerating image processing operations. The two most important ones are Accelerate (http://bit.ly/3848_Accelerate) and CoreImage (http://bit.ly/3848_CoreImage). We will work with Accelerate in the Using the Accelerate framework (Advanced) recipe, but we'll provide only high-level information on CoreImage because of space limitations.

The CoreImage framework provides you with a set of functions that allow you to enhance, filter, blend images, and even detect faces. This API is native for iOS, and it may use GPU for acceleration. So, you may find CoreImage useful for your purposes. In fact, its functionality intersects with that of OpenCV, and some photo processing applications can be developed even without linking to OpenCV. But if you want to manually tweak your effects and performance, OpenCV is the right choice.