This tool will allow us to create a base project on which we can start to develop our own tool.

The official repository of this tool is https://github.com/abirtone/STB.

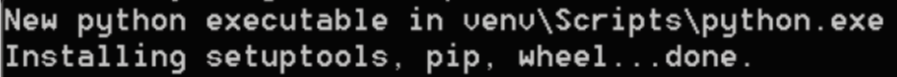

For the installation, we can do it by downloading the source code and executing the setup.py file, which will download the dependencies that are in the requirements.txt file.

We can also do it with the pip install stb command.

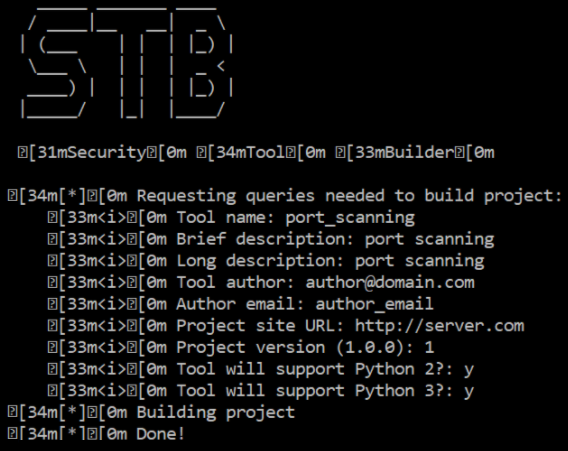

When executing the stb command, we get the following screen that asks us for information to create our project:

With this command, we have an application skeleton with a setup.py file that we can execute if we want to install the tool as a command in the system. For this, we can execute:

python setup.py install

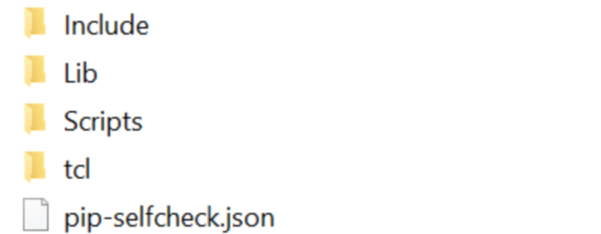

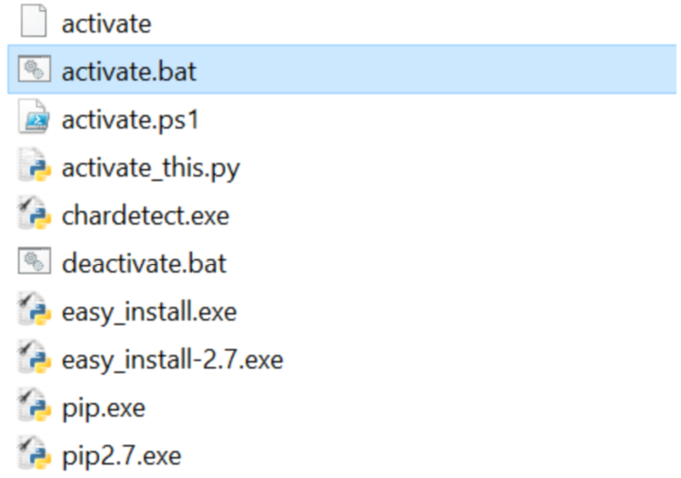

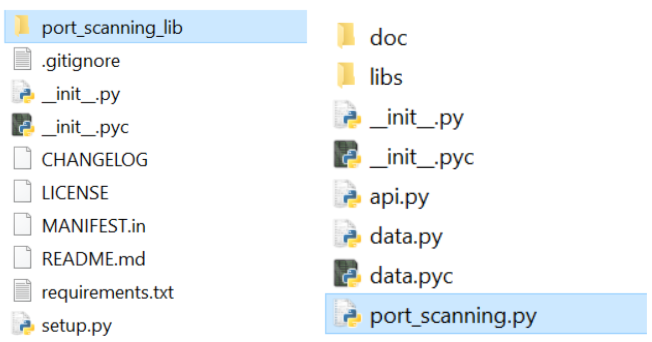

When we execute the previous command, we obtain the next folder structure:

This has also created a port_scanning_lib folder that contains the files that allow us to execute it:

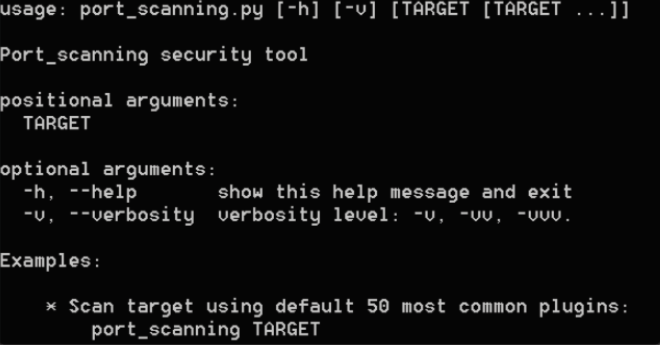

python port_scanning.py –h

If we execute the script with the help option (-h), we see that there is a series of parameters we can use:

We can see the code that has been generated in the port_scanning.py file:

parser = argparse.ArgumentParser(description='%s security tool' % "port_scanning".capitalize(), epilog = examples, formatter_class = argparse.RawTextHelpFormatter)

# Main options

parser.add_argument("target", metavar="TARGET", nargs="*")

parser.add_argument("-v", "--verbosity", dest="verbose", action="count", help="verbosity level: -v, -vv, -vvv.", default=1)

parsed_args = parser.parse_args()

# Configure global log

log.setLevel(abs(5 - parsed_args.verbose) % 5)

# Set Global Config

config = GlobalParameters(parsed_args)

Here, we can see the parameters that are defined and that a GlobalParameters object is used to pass the parameters that are inside the parsed_args variable. The method to be executed is found in the api.py file.

For example, at this point, we could retrieve the parameters entered from the command line:

# ----------------------------------------------------------------------

#

# API call

#

# ----------------------------------------------------------------------

def run(config):

"""

:param config: GlobalParameters option instance

:type config: `GlobalParameters`

:raises: TypeError

"""

if not isinstance(config, GlobalParameters):

raise TypeError("Expected GlobalParameters, got '%s' instead" % type(config))

# --------------------------------------------------------------------------

# INSERT YOUR CODE HERE # TODO

# --------------------------------------------------------------------------

print config

print config.target

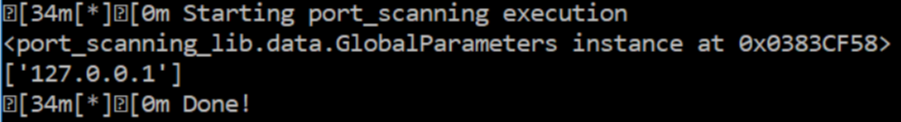

We can execute the script from the command line, passing our ip target as a parameter:

python port_scanning.py 127.0.0.1

If we execute now, we see how we can obtain the first introduced parameter in the output:

Free Chapter

Free Chapter