Getting an overview of Google Cloud

As we discussed in the previous section, some of Google Cloud's key market differentiators are its democratized big data and AI innovations and its open source friendly ecosystem. Data-driven businesses that already work with open source tools and frameworks such as Kubernetes, TensorFlow, Apache Spark, and Apache Beam will find Google to be a well-suited cloud provider as these services are first-class citizens on Google Cloud Platform (GCP). Although you can technically deploy open source software on any cloud platform using VMs, Google has gone to some greater lengths than its competitors have by offering several of these services through a cloud-native experience with its PaaS offerings.

GCP also has one of the world's largest high-speed software-defined networks. At the time of writing, Google Cloud is available in over 200 countries and territories, with 24 cloud regions and 144 network edge locations. It would come as no surprise, however, if by the time you're reading this that these numbers have increased.

In this section, we're going to explore how GCP is structured into regions and zones, what its core services are, and how it approaches the security of the platform and its resource hierarchy.

Regions and zones

GCP is organized into regions and zones. Regions are independent and broad geographic areas, such as europe-west1 or us-east4, while zones are more specific locations that may or may not correspond to a single physical data center, but which can be thought of as single failure domains. Networked locations within a region typically have round-trip network latencies under 5ms, often under 1ms.

Most regions have three or more zones, which are specified with a letter suffix added to the region name. For example, region us-east4 has three zones: us-east4-a, us-east4-b, and us-east4-c. Mapping a zone to a physical location is not the same for every organization. In other words, there's not a location Google calls "zone A” within the us-east4 region. There are typically at least three different physical locations, and what a zone letter corresponds to will be different for each organization since these mappings are done independently and dynamically. The main reason for this is to ensure there's a resource balance within a region.

Certain services in Google Cloud can be deployed as multi-regional resources (spanning several regions). Throughout this book, we will look at the options and design considerations for regional or multi-regional deployments for various services.

Core Google Cloud services

In this section, we'll present a bird's-eye overview of the core GCP services across four different major categories: compute, storage, big data, and machine learning. The purpose is not to present an in-depth description of these services (which will happen in the chapters in section two as we learn how to design solutions), but to provide a quick rundown of the various services in Google Cloud and what they do. Let's take a look at them:

- Compute

Compute Engine: A service for deploying IaaS virtual machines.

Kubernetes Engine: A platform for deploying Kubernetes container clusters.

App Engine: A compute platform for applications written in Java, Python, PHP, Go, or Node.js.

Cloud Functions: A serverless compute platform for executing code written in Java, Python, Go, or Node.js.

- Storage

Bigtable: A fully managed NoSQL database service for large analytical and operational workloads with high volumes and low latency.

Cloud Storage: A highly durable and global object storage.

Cloud SQL: A fully managed relational database service for MySQL, PostgreSQL, and SQL Server.

Cloud Spanner: A fully managed and highly scalable relational database with strong consistency.

Cloud Datastore: A highly scalable NoSQL database for web and mobile applications.

- Big Data

BigQuery: A highly scalable data warehouse service with serverless analytics.

Pub/Sub: A real-time messaging service.

Data Fusion: A fully managed, cloud-native data integration service.

Data Catalog: A fully managed, highly scalable data discovery service.

Dataflow: A serverless stream and batch data processing program based on Apache Beam.

Dataproc: A data analytics service for building Apache Spark, Apache Hadoop, Presto, and other OSS clusters.

- Machine Learning

Natural Language API: A service that provides natural language understanding.

Vision API: A service with pre-trained machine learning models for classifying images and detecting objects and faces, as well as printed and handwritten text.

Speech API: A service that converts audio into text by applying neural network models.

Translation API: A service that translates text between thousands of language pairs.

AutoML: A suite of machine learning products for developing machine learning models with little to no coding.

AI Platform: A code-based development platform with an integrated tool chain that can help you run your own machine learning applications.

Naturally, similar services to the ones presented here could be deployed to virtual machines with the full power of customization that comes with them. However, a self-hosted IaaS-based service is evidently not managed nor offered any service-level guarantees (beyond that of the virtual machines themselves) by the cloud provider, although they are viable options when migrating applications – sometimes the only option – as will be discussed in later chapters.

Multi-layered security

Security is a legitimate concern for many organizations when considering cloud adoption. For many, it may feel like they're taking a strong business risk in handing over their data and line-of-business applications to some other company to host within their data centers. Cloud providers know about such concerns very well, which is why they have gone to great lengths to ensure that they're designing security into their technical infrastructure. After all, the major cloud providers in the market (Google, Amazon, and Microsoft) are themselves consumer technology companies that have to deal with security attacks and penetration attempts on a daily basis. In fact, these organizations have years of experience safeguarding their infrastructure against Denial-of-Service (DoS) attacks and even things such as social engineering. In fact, an argument could be made that security concerns over hosting private data and intellectual property by a third party is somewhat offset by the fact that the third party's infrastructure is likely to be more secure and impenetrable than a typical private data center facility. The infrastructure security personnel of a cloud service provider certainly outnumbers that of a typical organization, offering more manpower and more concentrated effort toward preventing and mitigating security incidents at the infrastructure layer.

The following are some of the measures taken by Google with its multi-layered security approach to cloud infrastructure:

Because of the sheer scale of Google, it can deliver a higher level of security at the lower layers of the infrastructure than most of its customers could even afford to. Several measures are taken to protect users against unauthorized access, and Google's systems are monitored 24 hours a day, 365 days a year by a global operations team.

With that being said, it is important to recognize that security in the cloud is a shared responsibility. Customers are still responsible for securing their data properly, even for PaaS and SaaS services.

Security is a fundamental skill for cloud architects, and incorporating security into any design will be a central theme throughout this book.

Resource hierarchy

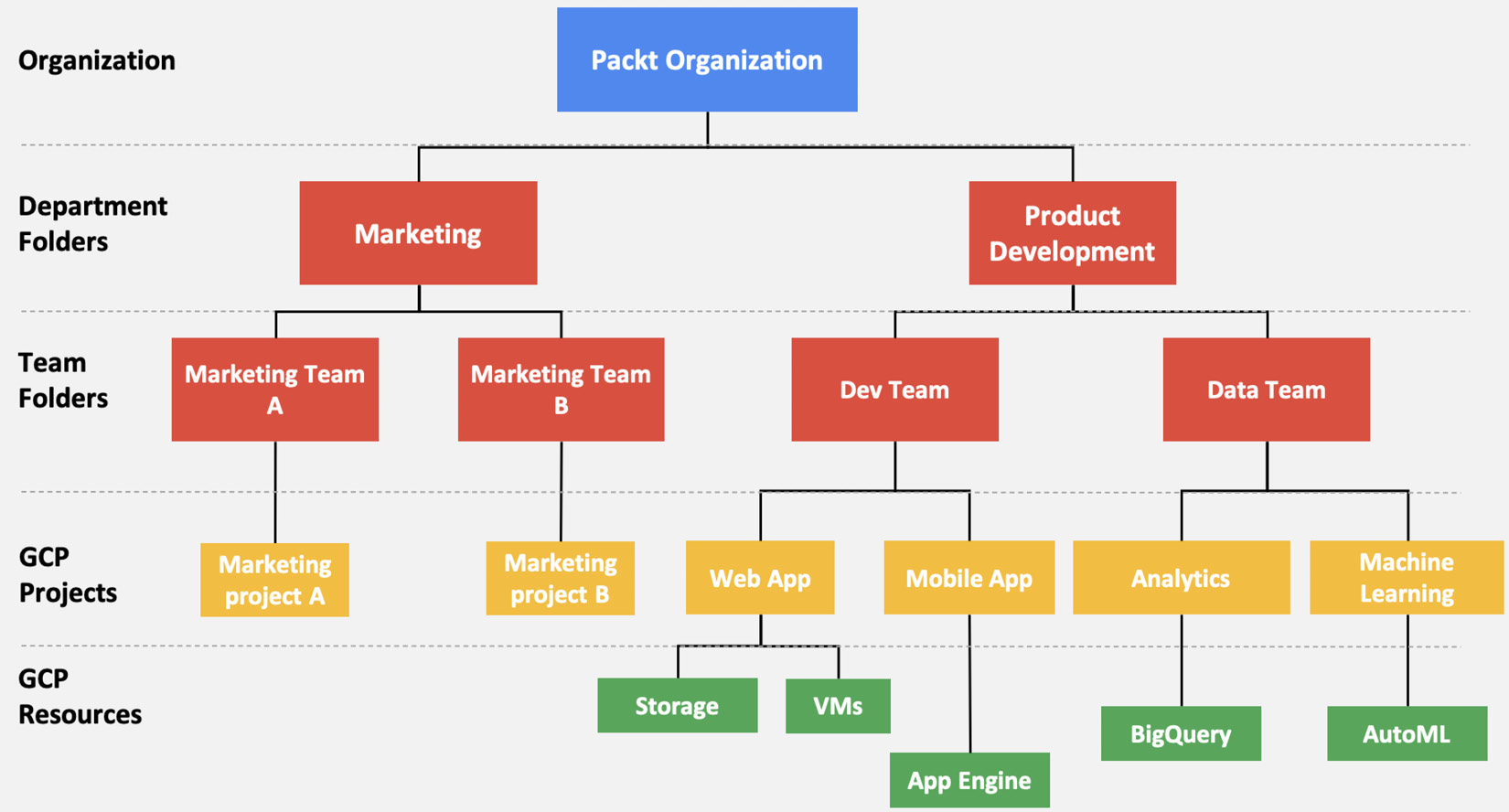

Resources in GCP are structured similar to how you would structure artifacts when working in any other types of projects.

The project level is where you enable and manage GCP capabilities such as specific APIs to be used, billing, and other Google services, and is also where you can add or remove collaborators. Any resources that are created are connected to a project and belong to exactly one project. A project can have multiple owners and users, and multiple projects can be organized into folders. Folders can nest other folders (sub-folders) up to 10 levels deep, and contain a combination of projects and folders.

One common way to define a folder hierarchy is to have each folder represent a department within the company, with sub-folders representing different teams within the department, each with their own sub-folders representing different applications managed by the team. These will then contain one or more projects that will host the actual cloud resources. At the top level of the hierarchy is the organization node.

If this sounds a little confusing, then take a look at the following diagram, which helps illustrate an example hierarchy. Hopefully, this will make things a little clearer to you:

Figure 1.3 – Resource hierarchy in GCP

At every level in this hierarchy (and down to the cloud resources for certain types of resources), Identity and Access Management (IAM) policies can be defined, which are inherited by the nodes down the hierarchy. For example, a policy applied at the organization node level will be automatically inherited by all folders, projects, and resources under it.

Folders can also be used to isolate requirements for different environments, such as production and development. You are not required to organize projects into folders, but it is a recommended best practice that will greatly facilitate management (and access management in particular) for your projects. An organization node is also not a requirement, and not necessarily something you have to obtain if, for example, you have a GCP project for your own personal use and experimentation.

One important thing to note is that an access policy, when applied at a level in the hierarchy, cannot take away access that's been granted at a lower level. For example, a policy applied at project A granting user John editing access will take effect even if, at the organization node (the project's parent level), view-only access is granted. The less restrictive (that is, more permissive) access is applied in this case, and user John will be able to edit resources under project A (but still not under other projects belonging to the same organization).