Exploring technologies and use cases

Let’s talk technology! Even though all these technologies can run one or multiple containers, they behave differently. In the next chapters of this book, we will deep-dive into each technology and its use cases. But first, let’s introduce them!

Azure App Service for containers

Azure App Service were originally designed to host your web application or web APIs on a fully managed platform called Azure App Service. With the popularity of containers, the capability for running them on Azure App Service was introduced. Originally, only Linux containers were supported, but in 2020, Windows container support was added.

Getting up and running with containers on App Service requires you to point to a registry where your container image is located, and there you go!

At the time of writing this book, Web App for Containers officially only supports running single-container workloads. However, multi-container workloads are currently in preview.

If you are already using Azure App Service for other solutions and now need a single container workload to run on the same technologies that you are already used to, Azure App Service are worth exploring. In the next chapter, we will do a technical deep-dive into how you can get started, what other technical features Azure App Service for containers provides, and what you need to know before deploying them.

Azure Functions for containers

You might associate the term serverless with Azure Functions. And if your heart starts beating faster when you hear about these, you may well ask yourself why not run your containers on Azure Functions? To be fair, Azure Functions is not a platform designed to host your enterprise solution on containers. In fact, it’s the other way around. Let’s explain.

If you are familiar with Azure Functions, you might have noticed that from the outside, the management experience is very similar to Azure App Service. In fact, the technologies in the backend are very similar. The main difference is that Azure Functions is serverless while Azure App Service are not.

The main question is, why would you want to run your code in Azure Functions as a custom Docker container? The answer is quite simple and one of the benefits we have already discussed in a previous section: managing libraries, dependencies, and runtimes. Azure Functions only has certain runtimes available; with a custom container, you can use one that is not part of the default Azure Functions service. You could say that containers are an extension on top of Azure Functions and can be used when you are limited by the capabilities of Azure Functions itself. Where normally you would select a platform to run your containers on, you can now use containers to make the platform work better for you. Containers to the rescue!

Please keep in mind that, at the time of writing, running containers on Azure Functions is only supported for Linux and requires a premium or dedicated app service plan.

In Chapter 3, we will explore the technical capabilities of containers on Azure Functions and discuss how you would go about deploying these.

Azure Container Instances

Microsoft’s first serverless container platform is Azure Container Instances. This platform is all about running containers and consuming resources on demand. Even though Azure Container Instances might look and sound like another average container platform, the key to success here is the available integrations with other Azure services.

Azure Container Instances is all about not managing the infrastructure. However, this also means that there is no control over the infrastructure. That is not a bad thing, but it is something that needs to be considered before deploying your containers to Azure Container Instances.

Let’s get back to the integration part of things. As Azure Container Instances is serverless and event-driven by nature, we can trigger it from other Azure services. Perhaps you have a workflow defined in an Azure logic app and need to quickly spin up, run a container, and work with the outcome (a calculation for example); this can be configured in a matter of a few clicks. More complex tasks such as integration with Azure Functions, Azure Queue, and Azure Kubernetes Service are also supported.

And that is something we do need to mention – the integration with Azure Kubernetes Service. Let’s say you have workloads that run on Azure Kubernetes Service but one of the characteristics of your solution is that there happen to be unpredictable bursts in resource requirements. This means we need more containers, more CPU, more memory, and we need it now! Azure Container Instances integrates with Azure Kubernetes Service to provide a form of bursting. If your Azure Kubernetes Service can’t keep up with demand, you can have it automatically burst to Azure Container Instances for the duration of the peak moment and remove it again once it is no longer needed. All this and you are only billed per second once your Azure Container Instances instance is running.

We’d call that a perfect addition to an infrastructure that requires flexibility and resiliency.

In Chapter 4, we will dive into all that ACI has to offer.

Azure Container Apps

Where do we start? Well, at the time of writing, Azure Container Apps is still in preview and was announced at Microsoft Ignite 2021. It’s essentially ACI on steroids or the new-found sibling of Azure Kubernetes Service. Azure Container Apps provides a series of Microsoft and community best practices wrapped into a single service that you can run containers on. Azure Container Apps is designed for organizations who need container orchestration but Azure Kubernetes might be something of an overkill.

Out-of-the-box Azure Container Apps comes with support for open source services such as Kubernetes Event Driven Autoscaling (KEDA), Distributed Application Runtime (Dapr), and a fully managed Ingress controller.

This means we can just focus on building the containers and run them, as long as we keep in mind to play by the Azure Container Apps rules. It’s great to get accustomed to writing code fit for containers and following best practices without having to worry about infrastructure management. It’s really a stepping stone to building enterprise architectures with containers on Azure.

Chapter 5, will be the main chapter where we will discover what Azure Container Apps has to offer.

Azure Kubernetes Service

First, we had the Azure Container Service, where we could choose between Docker Swarm, Kubernetes, and Distributed Cloud Operating System (DC/OS), but that service was retired. Kubernetes has been the de facto standard for container orchestration for some time, and Microsoft built a managed solution around that called Azure Kubernetes Service. The cool thing is that Microsoft follows the upstream Kubernetes project and adds additional services and integrations with Azure on top of that.

What you get are all the good things that Kubernetes has to offer but with a Microsoft Azure sauce on top of it. This means that everything you can run on Kubernetes, you can run on Azure Kubernetes Service.

Contrary to popular belief, it’s not just for enterprises. Azure Kubernetes Service can already be leveraged for relatively small environments if done correctly.

Azure Kubernetes Service essentially makes running Kubernetes a lot easier. We no longer have to worry about managing and configuring etcd (a high-available key-value store for all cluster data), Kubernetes APIs, and the kubelet – that is now all done for us. Essentially, you get the control plane for free, but you are still responsible for upgrading your Kubernetes versions and your node images, including security patches. However, Microsoft Azure makes this process extremely easy by providing these features with the click of a button.

Azure Kubernetes Service is the answer to the limitations of the previously mentioned services. If your use cases go beyond what those services can do, the answer is usually Azure Kubernetes Service.

With the ability to scale to thousands of nodes, the extensibility of Kubernetes, and the solutions and add-ons that the cloud-native community provides, there is usually no question left unanswered and no challenge left unresolved. This might sound like a very big promise, but give us the time and opportunity to explain in Chapter 6.

Azure Container Registry

Those container images have to come from somewhere. The common technology across all the features mentioned in the previous paragraphs is Azure Container Registry (ACR). Technically, it doesn’t host your containers, but it is the resource you will use to host or even build your container images.

You may even have heard of Docker Hub, which is a public container registry. ACR is basically the same but lives in Microsoft Azure. It can be both a public and private registry. It even has geo-replication support built in.

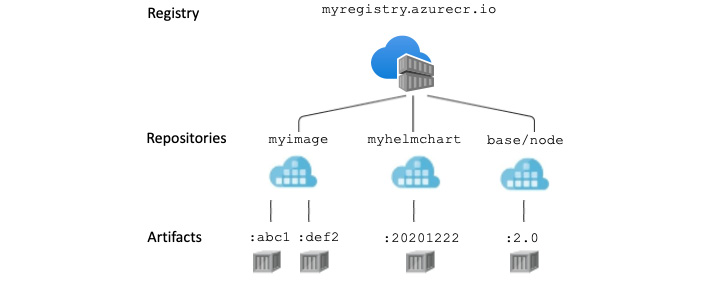

Figure 1.2 – ACR elements

Let’s break this diagram down and take a look at repositories, what they contain, and what additional features ACR provides in general.

Repositories

When we work with container images – for example, when we build a new one – the docker command will be something like docker build imagename:tagvalue. When you see image name, you can think of that as the repository name. Any container image you push to the container registry with the same image name but a different tag value will end up in the same repository. An example would be docker build MyContainerApp:v1.

You are also able to use namespaces. These are a helpful feature for you to easily identify related repositories. If we use the preceding example, imagename:tagvalue, we are able to add a namespace using a forward slash-delimited name. So, imagename could now look like development/app1/imagename:tagvalue. You can see that we have added development/app1. We can add this to another container image that falls under app1 to help us identify that this container image is part of app1. One thing to note here is that even though we have the namespaces, ACR manages all container images independently. They are not a hierarchy.

Important note

When tagging container images, it is recommended to follow your versioning policy. Do not be dependent on the latest tag, as some services do not support that in continuous integration and continuous delivery/deployment scenarios.

ACR tasks

You’re probably familiar with building container images using Docker on your local machine, but did you know ACR actually comes with a suite of features called Azure Container Registry Tasks (ACR Tasks) that allows you to build container images using the cloud? You are able to not only build Linux or Windows containers but also ARM containers too. ACR Tasks allows you to extend your development cycle to the cloud – for example, using ACR Tasks to build containers during a DevOps pipeline.

You are able to trigger ACR Tasks automatically in a few ways: through a source code update, a base image update, and a schedule. You are also able to trigger on demand, known as quick tasks.

Quick tasks

Most developers want to write code, build an application, and then test it locally before even doing a commit to source control. With containers, you would need a tool such as Docker Desktop installed to be able to build your container image locally. Docker Desktop is a great tool, but you are only able to build container images based off your hardware. So, if you are using a Windows machine, you are able to build a Windows image. If you install Windows Subsystem for Linux (WSL2), then you are also able to build Linux container images, but it also uses a lot of system resources. The more complex your solution becomes, the more powerful your local machine needs to be to build and run it. To overcome that, you can use ACR quick tasks to build the container image in the cloud. You are also able to run the container image inside ACR Tasks, but at the time of writing, it does not work well with complex container images, and you will have more success and flexibility testing your container on the target infrastructure.

If your DevOps build agents are not running on a machine or container that is capable of creating a container image, then offloading the building of the container image to the cloud using ACR quick tasks is an ideal solution. You just need to log in to your Azure subscription in your pipeline and use the az acr build command instead of docker build.

Currently in preview at the time of writing this book is the ability to build and push a container image directly from the source code, without a Docker file. This new feature uses an open source tool called Cloud Native Buildpacks (https://buildpacks.io/).

Important note

Note that DevOps build agents are not specifically Azure DevOps build agents, but in general, a large number of DevOps solutions (Jenkins, Octopus Deploy, and GitLab) support running on containers.

Automated tasks

You are able to connect your public or private Git repository and optionally branch in both GitHub and Azure DevOps to an ACR task. By default, when you configure an ACR task to monitor your Git repository, it will run with every commit. You are able to configure it to run on a Pull request as well. When you update code in your repository, the ACR task is triggered via a Webhook it creates and will build the container image and push it to the container registry ready for use. This is extremely useful when doing automated testing in your pipeline.

Container images, just like virtual machines, need to be kept up to date. Now you could do this manually, but that would mean you need to update your base images, then your main images, and so on, which is a lot of work. A base image is the starting image of your container image. It would normally be something like an Ubuntu version with perhaps some added applications. Then, your code is added on top to make your application container image.

ACR Tasks has your back. You are able to create a task that automatically detects when a base image has been updated in your registry or a public registry, such as Docker Hub. Once the task detects that the base image has been updated, it will then create a new version of your container image and push it to the correct repository.

You may need to run a maintainer task to clean up your repository of old container images or test a build and push it to your registry. For this, ACR Tasks has scheduled tasks. There’s not much more we can say about them, apart from that they are really helpful when you need to remove old container images or feature build images, as the purge command comes with a filter option that uses a regex.

Multi-step tasks

You may have some requirements to test your application before it is pushed to a container registry. Multi-step tasks have you covered here. With multi-step tasks, build and push tasks are separated. You have the ability to create a task that can build your application container image and then run it. It can then build and run another container image that has your testing tools inside. This testing container will perform your tests against your running application. If they pass the tests, then the image can be pushed to the container registry in the next part. If they fail the test, the image is not pushed to the container registry.

Multi-step tasks allow you to have more granular control over image building and testing to ensure only good images are pushed to the container registry.