To understand the difference between monolithic and microservice-based applications, let us reflect on a real-world example. Imagine that a company runs an online hotel booking business. All reservations are made and paid for by the customers via a corporate web service.

The traditional monolithic architecture for this kind of web application would have bundled all the functionality into one single, complex software that might have included the following:

- Customer dashboard

- Customer identity and access management

- Search engine for hotels based on criteria

- Billing and integration with payment providers

- Reservation system for hotels

- Ticketing and support chat

A monolithic application will be tightly coupled (bundled) with all the business and user logic and must be developed and updated at once. That means if a change to a billing code has to be made, the entire application will have to be updated with the changes. After that, it should be carefully tested and released to the production environment. Each (even a small) change could potentially break the whole application and impact business by making it unavailable for a longer time.

With a microservices architecture, this very same application could be split into several smaller pieces communicating with each other over the network and fulfilling its own purpose. Billing, for example, can be performed by four smaller services:

- Currency converter

- Credit card provider integration

- Bank wire transfer processing

- Refund processing

Essentially, microservices are a group of small applications where each is responsible for its own small task. These small applications communicate with each other over the network and work together as a part of a larger application.

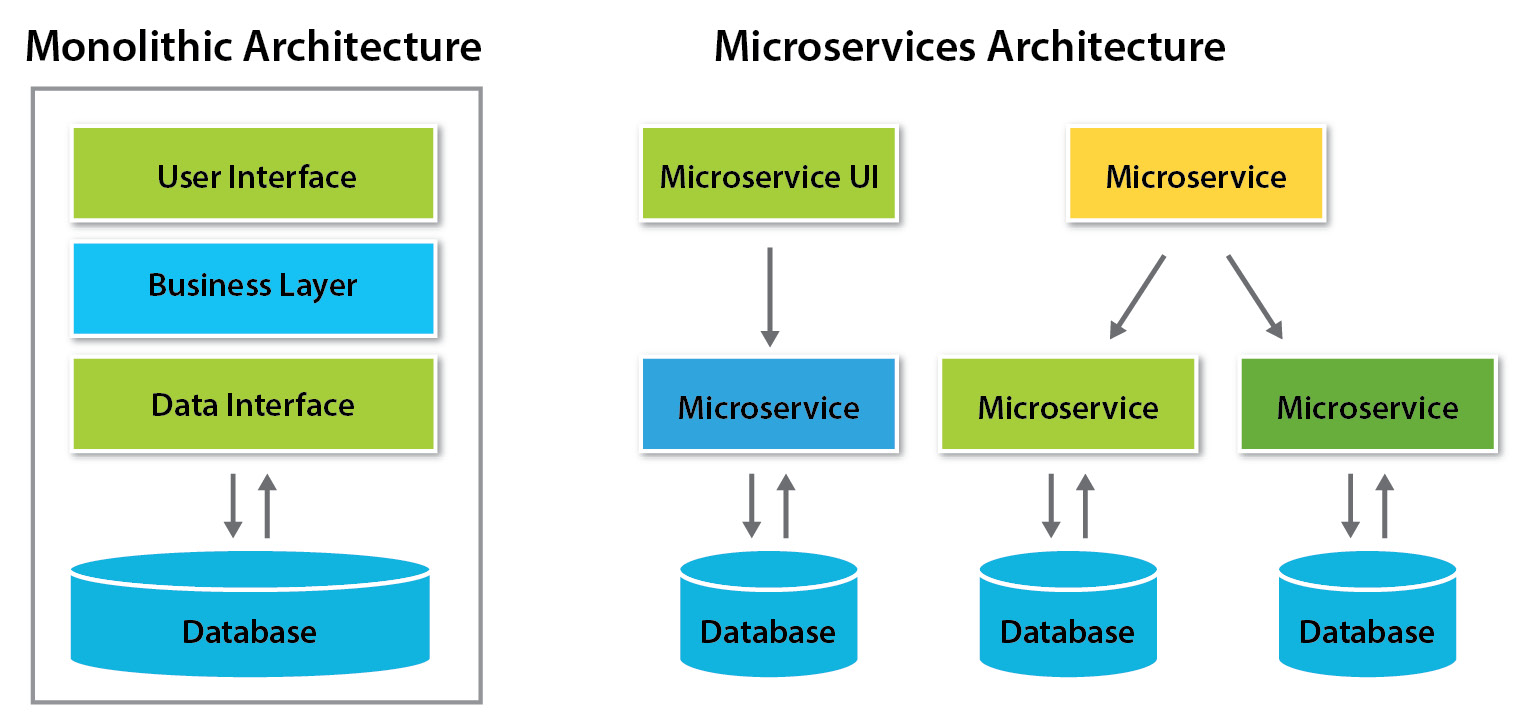

The following figure demonstrates the differences between monolithic and microservice architectures:

Figure 1.4 – Comparison of monolithic and microservice architectures

This way, all other parts of the web application can also be split into multiple smaller independent applications (microservices) communicating over the network. The advantages of this approach include the following:

- Each microservice can be developed by its own team

- Each microservice can be released and updated separately

- Each microservice can be deployed and scaled independently of others

- A single microservice outage will only impact a small part of the overall functionality of the app

Microservices are an important part of cloud-native architectures, and we will review in detail the benefits as well as the challenges associated with microservices in Chapter 9, Understanding Cloud Native Architectures. For the moment, let’s get back to containers and why they need to be orchestrated.

When each microservice is packaged into a container, the total number of containers can easily reach tens or even hundreds for especially large and complex applications. In such a complex distributed environment, things can quickly get out of our control.

A container orchestration system is what helps us to keep control over a large number of containers. It simplifies the management of containers by grouping application containers into deployments and automating operations such as the following:

- Scaling microservices depending on the workload

- Releasing new versions of microservices and their updates

- Scheduling containers based on host utilizations and requirements

- Automatically restarting containers that fail or failing over the traffic

As of today, there are many container and workload orchestration systems available, including these:

- Kubernetes

- OpenShift (also known as Open Kubernetes Distribution (OKD))

- Hashicorp Nomad

- Docker Swarm

- Apache Mesos

As you already know from the book title, we will only focus on Kubernetes and there won’t be any sort of comparison made between these five. In fact, Kubernetes has overwhelmingly higher market shares and over the years, has become the de facto platform for orchestrating containers. With a high degree of confidence, you can concentrate on learning about Kubernetes and forget about the others, at least for the moment.

Free Chapter

Free Chapter