Overview of this book

Kubernetes is the backbone of modern containerized infrastructure, but scaling it efficiently remains a challenge. Kubernetes Autoscaling equips cloud professionals with this comprehensive guide to dynamically scaling applications and infrastructure using the powerful combination of Kubernetes Event-Driven Autoscaler (KEDA) and Karpenter, AWS’s next-generation cluster autoscaler.

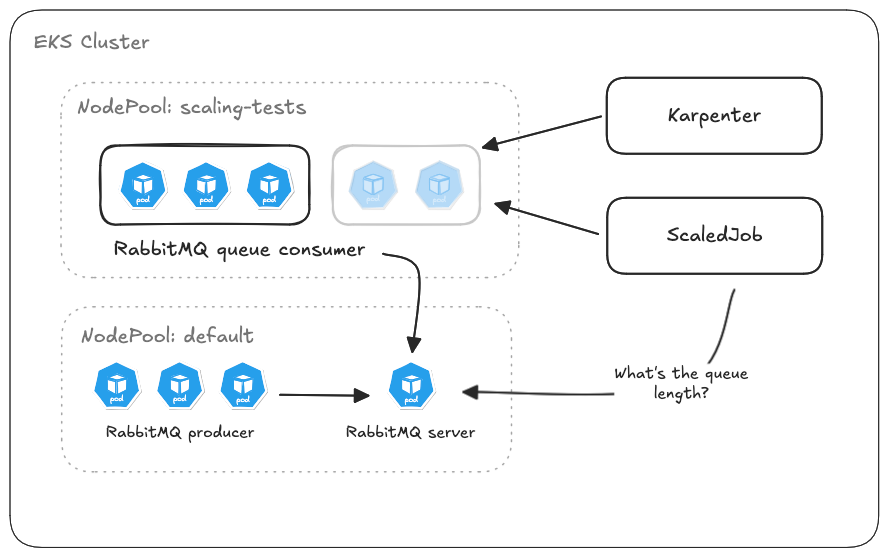

You’ll begin with autoscaling fundamentals, move through HPA and VPA, and then get hands-on KEDA for event-driven workloads and Karpenter for data plane scaling. With the help of real-world use cases, best practices, and detailed patterns, you’ll deploy resilient, scalable, and cost-effective Kubernetes clusters across production environments.

By the end of this book, you’ll be able to implement practical autoscaling strategies to improve performance, reduce cloud costs, and eliminate over-provisioning.

Free Chapter

Free Chapter