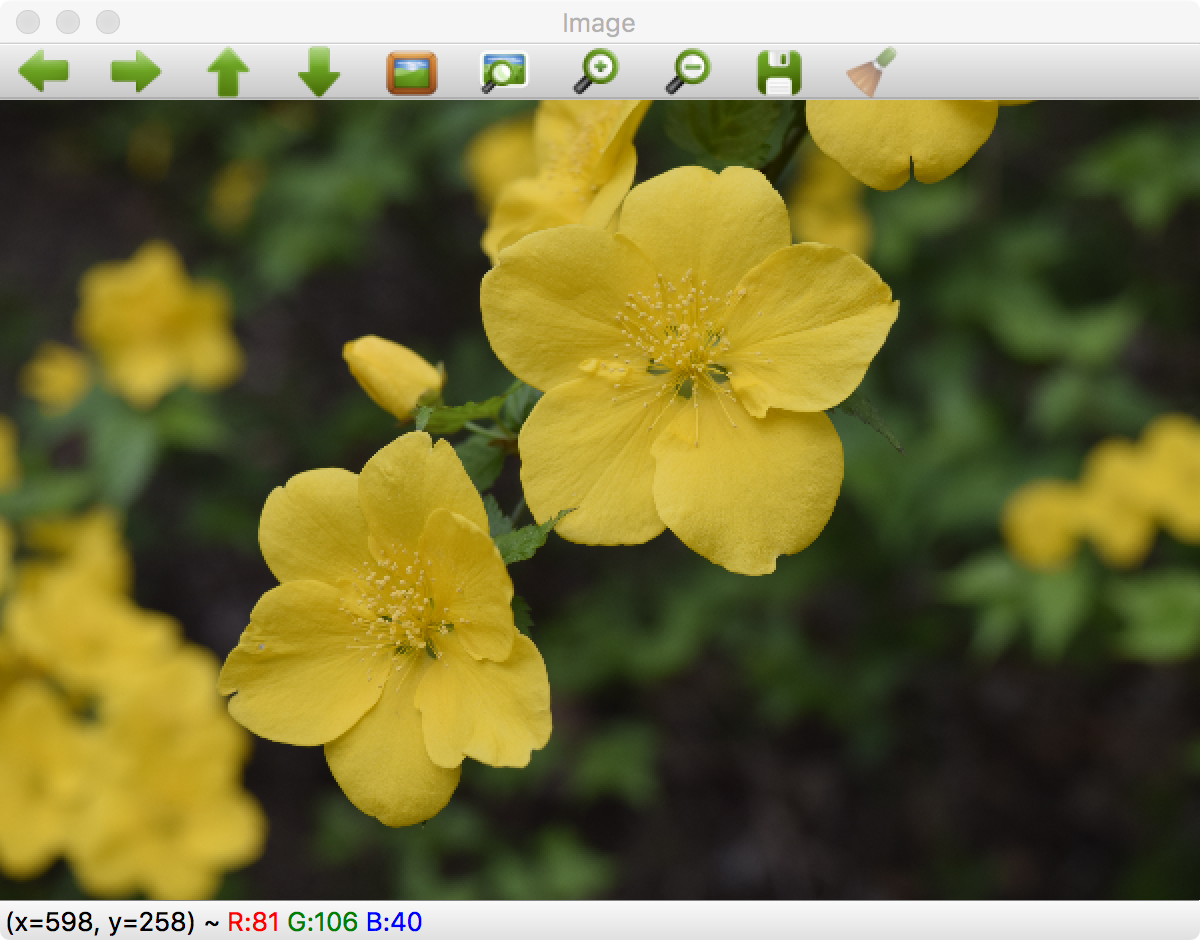

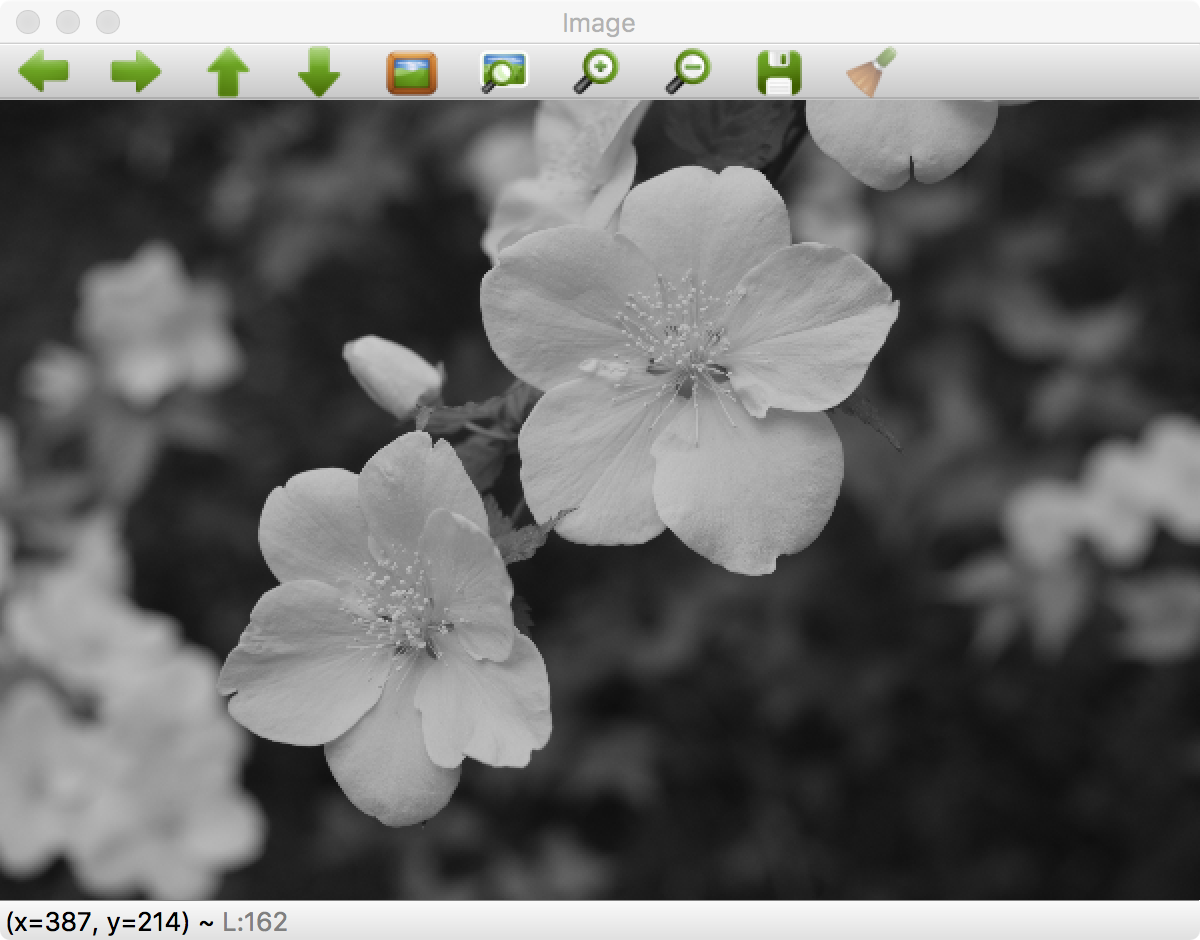

In this section, we will see basic image operations for reading and writing images. We will also see how images are represented digitally.

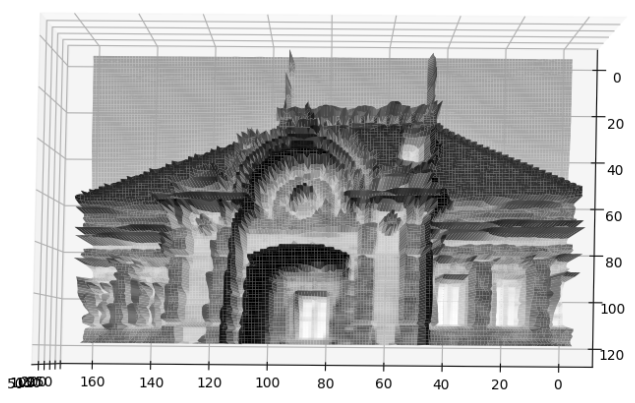

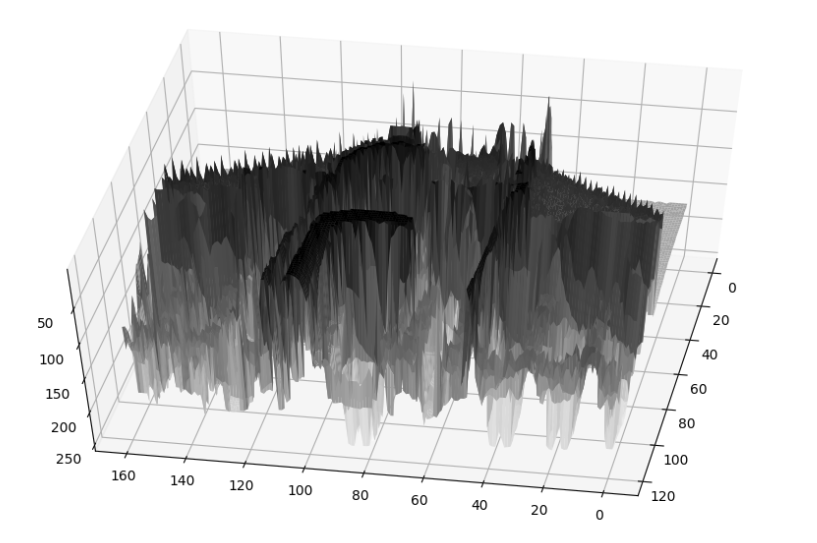

Before we proceed further with image IO, let's see what an image is made up of in the digital world. An image is simply a two-dimensional array, with each cell of the array containing intensity values. A simple image is a black and white image with 0's representing white and 1's representing black. This is also referred to as a binary image. A further extension of this is dividing black and white into a broader grayscale with a range of 0 to 255. An image of this type, in the three-dimensional view, is as follows, where x and y are pixel locations and z is the intensity value:

This is a top view, but on viewing sideways we can see the variation in the intensities that make up the image:

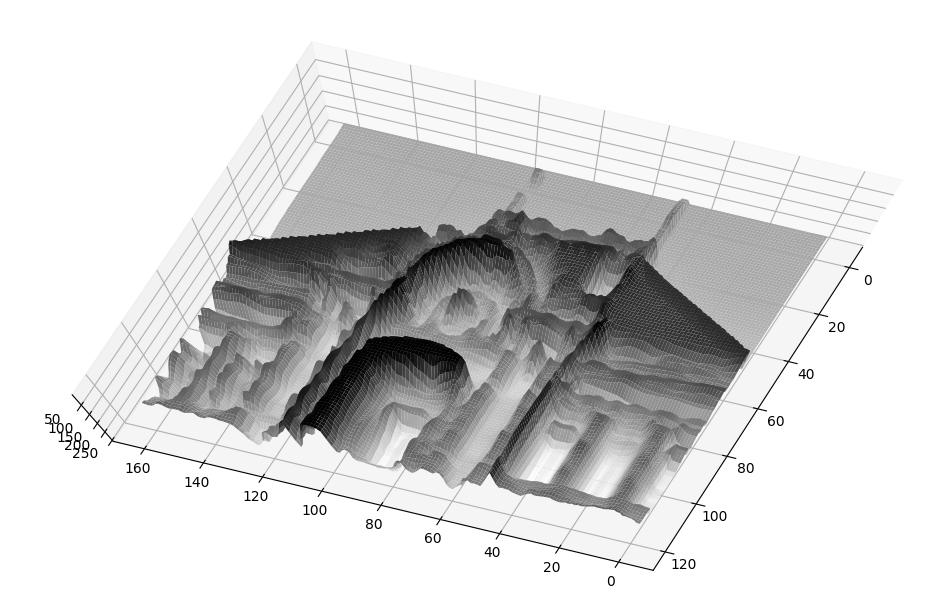

We can see that there are several peaks and image intensities that are not smooth. Let's apply smoothing algorithm, the details for which can be seen in Chapter 3, Image Filtering and Transformations in OpenCV:

As we can see, pixel intensities form more continuous formations, even though there is no significant change in the object representation. The code to visualize this is as follows (the libraries required to visualize images are described in detail in the Chapter 2, Libraries, Development Platforms, and Datasets, separately):

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

import cv2

# loads and read an image from path to file

img = cv2.imread('../figures/building_sm.png')

# convert the color to grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# resize the image(optional)

gray = cv2.resize(gray, (160, 120))

# apply smoothing operation

gray = cv2.blur(gray,(3,3))

# create grid to plot using numpy

xx, yy = np.mgrid[0:gray.shape[0], 0:gray.shape[1]]

# create the figure

fig = plt.figure()

ax = fig.gca(projection='3d')

ax.plot_surface(xx, yy, gray ,rstride=1, cstride=1, cmap=plt.cm.gray,

linewidth=1)

# show it

plt.show()

This code uses the following libraries: NumPy, OpenCV, and matplotlib.

In the further sections of this chapter we will see operations on images using their color properties. Please download the relevant images from the website to view them clearly.