In this section, we will take a look at sharing data between your Docker container and your desktop. We're going to cover some necessary security settings to allow access. We will then run the self test to make sure that we've got those security settings correct, and finally, we're going to run our actual Docker file.

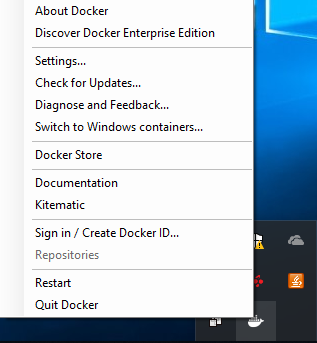

Now, assuming you have Docker installed and running, you need to get into the Docker settings from the cute little whale in the Settings... menu. So, go to the lower right on your taskbar, right-click the whale, and select Settings...:

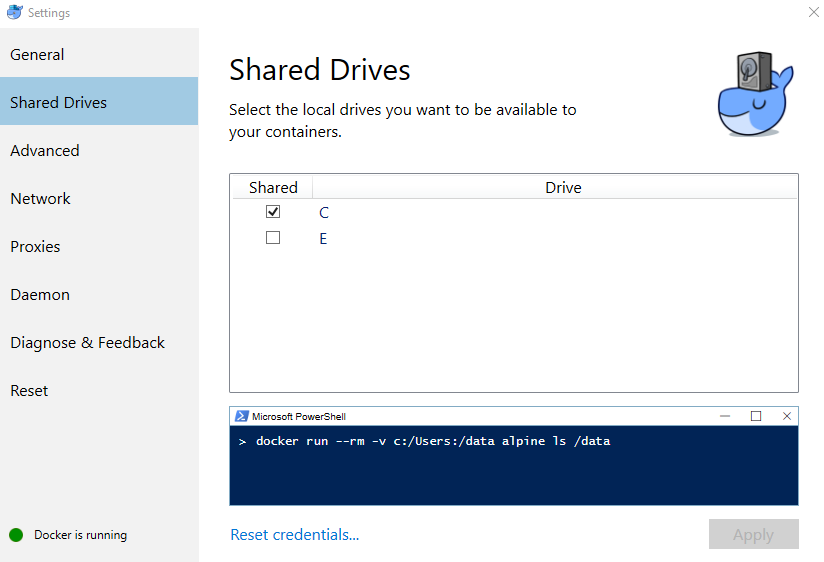

There are a few security settings we need to get right in order for our VOLUME to work so that our Docker container can look at our local hard drive. I've popped this setting up from the whale, and we're going to select and copy the test command we'll be using later, and click on Apply:

Now, this is going to pop up with a new window asking for a password so that we are allowing Docker to map a shared drive back to our PC so that our PC's hard drive is visible from within the container. This share location is where we're going to be working and editing files so that we can save our work.

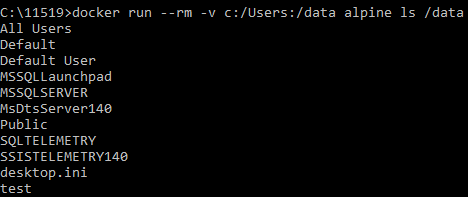

Now that we have the command that we copied from the dialog, we're going to go ahead and paste it into the Command Prompt, or you can just type it in where we're going to run a test container, just to make sure that our Docker installation can actually see local hard drives:

C:\11519>docker run --rm -v c:/Users:/data alpine ls /data

So, you can see that with the -v switch, we're saying see c:/Users:, which is actually on our local PC, and then /data, which is actually on the container, which is the volume and the alpine test machine. What you can see is that it's downloading the alpine test container, and then running the ls command, and that we have access:

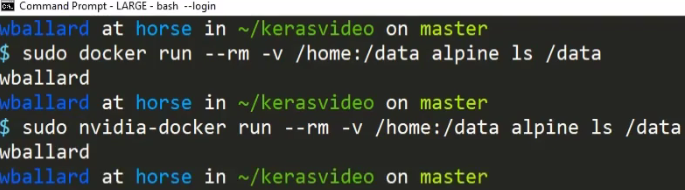

Note that if you are running on Linux, you won't have to do any of these steps; you just have to run your Docker command with sudo, depending upon which filesystem you're actually sharing. Here, we're running both docker and nvidia-docker to make sure that we have access to our home directories:

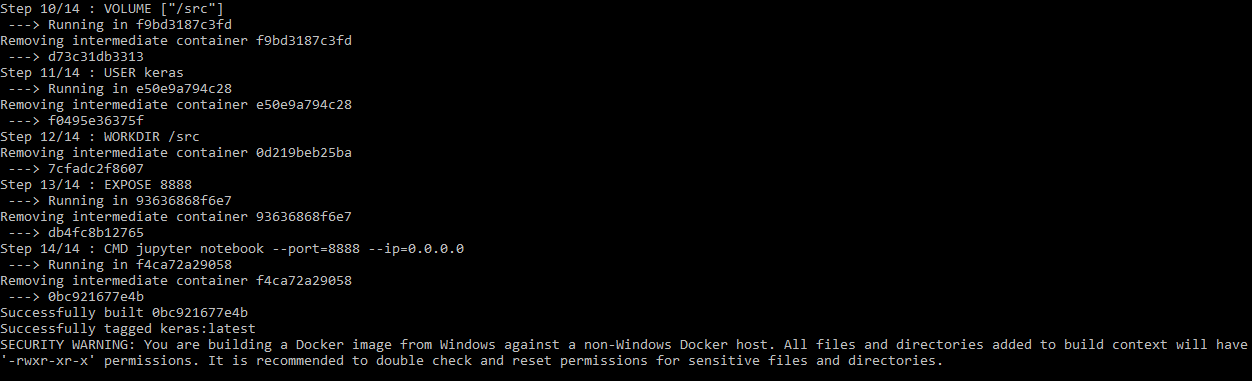

Now, we're actually going to build our container with the docker build command. We're going to use -t in order to give it a name called keras, and then go ahead and run the following command:

C:\11519>docker build -t keras .

This will actually run relatively quickly because I have in fact built it before on this computer, and a lot of the files are cached:

Conveniently, the command to build on Linux is the exact same as on Windows with Docker. However, you may choose to build with nvidia-docker if you're working with GPU support on your Linux host. So, what does docker build do? Well, it takes the Docker file and executes it, downloading the packages, creating the filesystem, running commands, and then saving all of those changes against a virtual filesystem so that you can reuse that later. Every time you run the Docker container, it starts from the state you were at when you ran the build. That way, every run is consistent.

Now that we have our Docker container running, we'll move on to the next section where we'll set up and run a REST service with the Jupyter Notebook.