Machine learning can be divided into many subcategories; two broad categories are supervised and unsupervised learning. These categories contain some of the most popular and widely used machine learning methods. In this section, we present them, as well as some toy example uses of supervised and unsupervised learning.

Supervised and unsupervised learning

Supervised learning

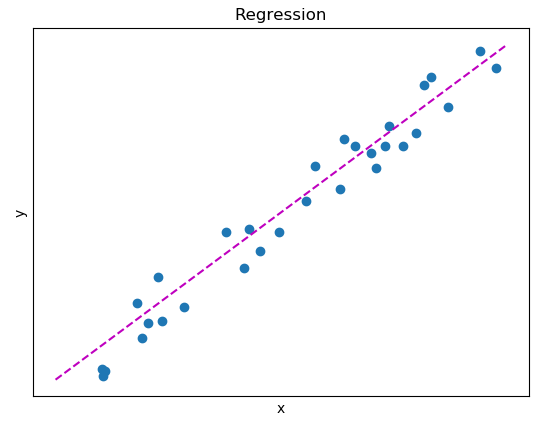

In examples such as those in the previous section, the data consisted of some features and a target; no matter whether the target was quantitative (regression) or categorical (classification). Under these circumstances, we call the dataset a labeled dataset. When we try to produce a model from a labeled dataset in order to make predictions about unseen or future data (for example, to diagnose a new tumor case), we make use of supervised learning. In simple cases, supervised learning models can be visualized as a line. This line's purpose is to either separate the data based on the target (in classification) or to closely follow the data (in regression).

The following figure illustrates a simple regression example. Here, y is the target and x is the dataset feature. Our model consists of the simple equation y=2x-5. As is evident, the line closely follows the data. In order to estimate the y value of a new unseen point, we calculate its value using the preceding formula. The following figure shows a simple regression with y=2x-5 as the predictive model:

In the following figure, a simple classification problem is depicted. Here, the dataset features are x and y, while the target is the instance color. Again, the dotted line is y=2x-5, but this time we test whether the point is above or below the line. If the point's y value is lower than expected (smaller), then we expect it to be orange. If it is higher (greater), we expect it to be blue. The following figure is a simple classification with y=2x-5 as the boundary:

Unsupervised learning

In both regression and classification, we have a clear understanding of how the data is structured or how it behaves. Our goal is to simply model that structure or behavior. In some cases, we do not know how the data is structured. In those cases, we can utilize unsupervised learning in order to discover the structure, and thus information, within the data. The simplest form of unsupervised learning is clustering. As the name implies, clustering techniques attempt to group (or cluster) data instances. Thus, instances that belong to the same cluster share many similarities in their features, while they are dissimilar to instances that belong in separate clusters. A simple example with three clusters is depicted in the following figure. Here, the dataset features are x and y, while there is no target.

The clustering algorithm discovered three distinct groups, centered around the points (0, 0), (1, 1), and (2, 2):

Dimensionality reduction

Another form of unsupervised learning is dimensionality reduction. The number of features present in a dataset equals the dataset's dimensions. Often, many features can be correlated, noisy, or simply not provide much information. Nonetheless, the cost of storing and processing data is correlated with a dataset's dimensionality. Thus, by reducing the dataset's dimensions, we can help the algorithms to better model the data.

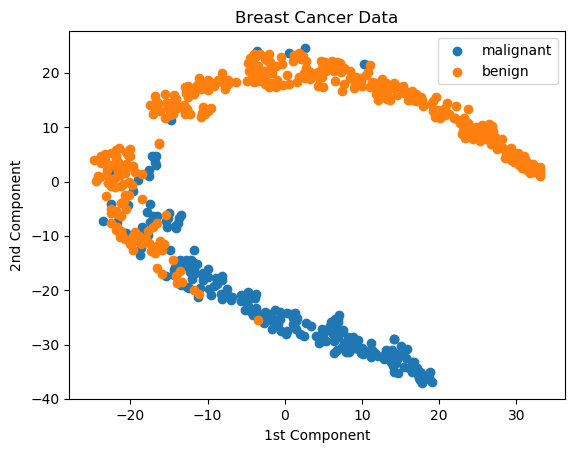

Another use of dimensionality reduction is for the visualization of high-dimensional datasets. For example, using the t-distributed Stochastic Neighbor Embedding (t-SNE) algorithm, we can reduce the breast cancer dataset to two dimensions or components. Although it is not easy to visualize 30 dimensions, it is quite easy to visualize two.

Furthermore, we can visually test whether the information contained within the dataset can be utilized to separate the dataset's classes or not. The next figure depicts the two components on the y and x axis, while the color represents the instance's class. Although we cannot plot all of the dimensions, by plotting the two components, we can conclude that a degree of separability between the classes exists: