Overview of this book

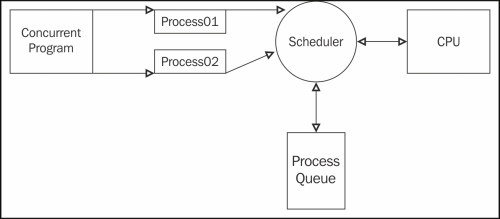

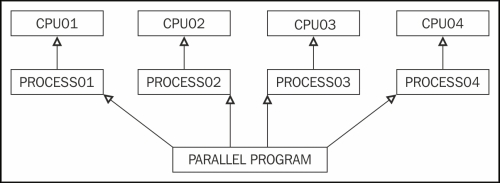

Starting with the basics of parallel programming, you will proceed to learn about how to build parallel algorithms and their implementation. You will then gain the expertise to evaluate problem domains, identify if a particular problem can be parallelized, and how to use the Threading and Multiprocessor modules in Python.

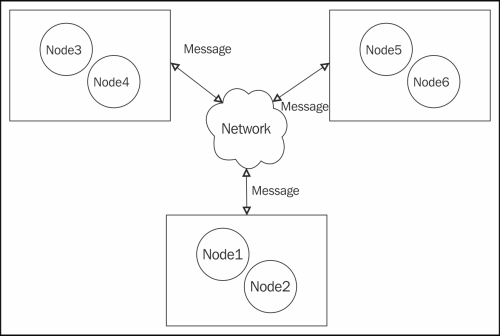

The Python Parallel (PP) module, which is another mechanism for parallel programming, is covered in depth to help you optimize the usage of PP. You will also delve into using Celery to perform distributed tasks efficiently and easily. Furthermore, you will learn about asynchronous I/O using the asyncio module. Finally, by the end of this book you will acquire an in-depth understanding about what the Python language has to offer in terms of built-in and external modules for an effective implementation of Parallel Programming.

This is a definitive guide that will teach you everything you need to know to develop and maintain high-performance parallel computing systems using the feature-rich Python.

Free Chapter

Free Chapter