"Data! Data! Data!" he cried impatiently. "I can't make bricks without clay." | ||

| --Sherlock Holmes - The Adventure of the Copper Beeches | ||

Everything you do with a computer is managing data. Data comes in many different shapes and flavors. It's the music you listen, the movie you stream, the PDFs you open. Even the chapter you're reading at this very moment is just a file, which is data.

Data can be simple, an integer number to represent an age, or complex, like an order placed on a website. It can be about a single object or about a collection of them.

Data can even be about data, that is, metadata. Data that describes the design of other data structures or data that describes application data or its context.

In Python, objects are abstraction for data, and Python has an amazing variety of data structures that you can use to represent data, or combine them to create your own custom data. Before we delve into the specifics, I want you to be very clear about objects in Python, so let's talk a little bit more about them.

As we already said, everything in Python is an object. But what really happens when you type an instruction like age = 42 in a Python module?

Tip

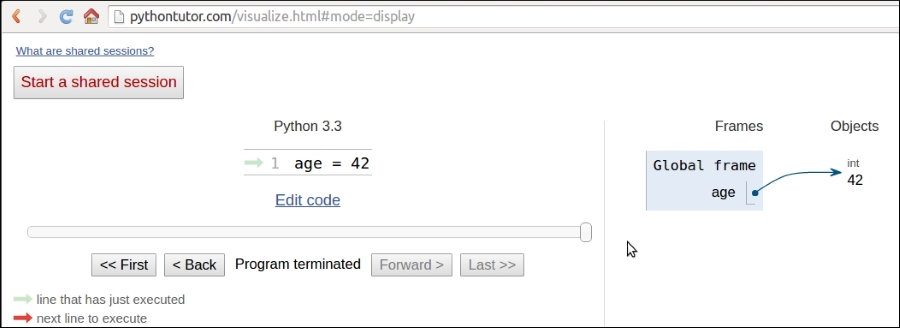

If you go to http://pythontutor.com/, you can type that instruction into a text box and get its visual representation. Keep this website in mind, it's very useful to consolidate your understanding of what goes on behind the scenes.

So, what happens is that an object is created. It gets an id, the type is set to int (integer number), and the value to 42. A name age is placed in the global namespace, pointing to that object. Therefore, whenever we are in the global namespace, after the execution of that line, we can retrieve that object by simply accessing it through its name: age.

If you were to move house, you would put all the knives, forks, and spoons in a box and label it cutlery. Can you see it's exactly the same concept? Here's a screenshot of how it may look like (you may have to tweak the settings to get to the same view):

So, for the rest of this chapter, whenever you read something such as name = some_value, think of a name placed in the namespace that is tied to the scope in which the instruction was written, with a nice arrow pointing to an object that has an id, a type, and a value. There is a little bit more to say about this mechanism, but it's much easier to talk about it over an example, so we'll get back to this later.

A first fundamental distinction that Python makes on data is about whether or not the value of an object changes. If the value can change, the object is called mutable, while if the value cannot change, the object is called immutable.

It is very important that you understand the distinction between mutable and immutable because it affects the code you write, so here's a question:

>>> age = 42 >>> age 42 >>> age = 43 #A >>> age 43

In the preceding code, on the line #A, have I changed the value of age? Well, no. But now it's 43 (I hear you say...). Yes, it's 43, but 42 was an integer number, of the type int, which is immutable. So, what happened is really that on the first line, age is a name that is set to point to an int object, whose value is 42. When we type age = 43, what happens is that another object is created, of the type int and value 43 (also, the id will be different), and the name age is set to point to it. So, we didn't change that 42 to 43. We actually just pointed age to a different location: the new int object whose value is 43. Let's see the same code also printing the IDs:

>>> age = 42 >>> id(age) 10456352 >>> age = 43 >>> id(age) 10456384

Notice that we print the IDs by calling the built-in id function. As you can see, they are different, as expected. Bear in mind that age points to one object at a time: 42 first, then 43. Never together.

Now, let's see the same example using a mutable object. For this example, let's just use a Person object, that has a property age:

>>> fab = Person(age=39) >>> fab.age 39 >>> id(fab) 139632387887456 >>> fab.age = 29 # I wish! >>> id(fab) 139632387887456 # still the same id

In this case, I set up an object fab whose type is Person (a custom class). On creation, the object is given the age of 39. I'm printing it, along with the object id, right afterwards. Notice that, even after I change age to be 29, the ID of fab stays the same (while the ID of age has changed, of course). Custom objects in Python are mutable (unless you code them not to be). Keep this concept in mind, it's very important. I'll remind you about it through the rest of the chapter.

Let's start by exploring Python's built-in data types for numbers. Python was designed by a man with a master's degree in mathematics and computer science, so it's only logical that it has amazing support for numbers.

Numbers are immutable objects.

Python integers have unlimited range, subject only to the available virtual memory. This means that it doesn't really matter how big a number you want to store: as long as it can fit in your computer's memory, Python will take care of it. Integer numbers can be positive, negative, and 0 (zero). They support all the basic mathematical operations, as shown in the following example:

>>> a = 12 >>> b = 3 >>> a + b # addition 15 >>> b - a # subtraction -9 >>> a // b # integer division 4 >>> a / b # true division 4.0 >>> a * b # multiplication 36 >>> b ** a # power operator 531441 >>> 2 ** 1024 # a very big number, Python handles it gracefully 17976931348623159077293051907890247336179769789423065727343008115 77326758055009631327084773224075360211201138798713933576587897688 14416622492847430639474124377767893424865485276302219601246094119 45308295208500576883815068234246288147391311054082723716335051068 4586298239947245938479716304835356329624224137216

The preceding code should be easy to understand. Just notice one important thing: Python has two division operators, one performs the so-called true division (/), which returns the quotient of the operands, and the other one, the so-called integer division (//), which returns the floored quotient of the operands. See how that is different for positive and negative numbers:

>>> 7 / 4 # true division 1.75 >>> 7 // 4 # integer division, flooring returns 1 1 >>> -7 / 4 # true division again, result is opposite of previous -1.75 >>> -7 // 4 # integer div., result not the opposite of previous -2

This is an interesting example. If you were expecting a -1 on the last line, don't feel bad, it's just the way Python works. The result of an integer division in Python is always rounded towards minus infinity. If instead of flooring you want to truncate a number to an integer, you can use the built-in int function, like shown in the following example:

>>> int(1.75) 1 >>> int(-1.75) -1

Notice that truncation is done towards 0.

There is also an operator to calculate the remainder of a division. It's called modulo operator, and it's represented by a percent (%):

>>> 10 % 3 # remainder of the division 10 // 3 1 >>> 10 % 4 # remainder of the division 10 // 4 2

Boolean algebra is that subset of algebra in which the values of the variables are the truth values: true and false. In Python, True and False are two keywords that are used to represent truth values. Booleans are a subclass of integers, and behave respectively like 1 and 0. The equivalent of the int class for Booleans is the bool class, which returns either True or False. Every built-in Python object has a value in the Boolean context, which means they basically evaluate to either True or False when fed to the bool function. We'll see all about this in Chapter 3, Iterating and Making Decisions.

Boolean values can be combined in Boolean expressions using the logical operators and, or, and not. Again, we'll see them in full in the next chapter, so for now let's just see a simple example:

>>> int(True) # True behaves like 1 1 >>> int(False) # False behaves like 0 0 >>> bool(1) # 1 evaluates to True in a boolean context True >>> bool(-42) # and so does every non-zero number True >>> bool(0) # 0 evaluates to False False >>> # quick peak at the operators (and, or, not) >>> not True False >>> not False True >>> True and True True >>> False or True True

You can see that True and False are subclasses of integers when you try to add them. Python upcasts them to integers and performs addition:

>>> 1 + True 2 >>> False + 42 42 >>> 7 - True 6

Note

Upcasting is a type conversion operation that goes from a subclass to its parent. In the example presented here, True and False, which belong to a class derived from the integer class, are converted back to integers when needed. This topic is about inheritance and will be explained in detail in Chapter 6, Advanced Concepts – OOP, Decorators, and Iterators.

Real numbers, or floating point numbers, are represented in Python according to the IEEE 754 double-precision binary floating-point format, which is stored in 64 bits of information divided into three sections: sign, exponent, and mantissa.

Note

Quench your thirst for knowledge about this format on Wikipedia: http://en.wikipedia.org/wiki/Double-precision_floating-point_format

Usually programming languages give coders two different formats: single and double precision. The former taking up 32 bits of memory, and the latter 64. Python supports only the double format. Let's see a simple example:

>>> pi = 3.1415926536 # how many digits of PI can you remember? >>> radius = 4.5 >>> area = pi * (radius ** 2) >>> area 63.61725123519331

The sys.float_info struct sequence holds information about how floating point numbers will behave on your system. This is what I see on my box:

>>> import sys >>> sys.float_info sys.float_info(max=1.7976931348623157e+308, max_exp=1024, max_10_exp=308, min=2.2250738585072014e-308, min_exp=-1021, min_10_exp=-307, dig=15, mant_dig=53, epsilon=2.220446049250313e-16, radix=2, rounds=1)

Let's make a few considerations here: we have 64 bits to represent float numbers. This means we can represent at most 2 ** 64 == 18,446,744,073,709,551,616 numbers with that amount of bits. Take a look at the max and epsilon value for the float numbers, and you'll realize it's impossible to represent them all. There is just not enough space so they are approximated to the closest representable number. You probably think that only extremely big or extremely small numbers suffer from this issue. Well, think again:

>>> 3 * 0.1 – 0.3 # this should be 0!!! 5.551115123125783e-17

What does this tell you? It tells you that double precision numbers suffer from approximation issues even when it comes to simple numbers like 0.1 or 0.3. Why is this important? It can be a big problem if you're handling prices, or financial calculations, or any kind of data that needs not to be approximated. Don't worry, Python gives you the Decimal type, which doesn't suffer from these issues, we'll see them in a bit.

Python gives you complex numbers support out of the box. If you don't know what complex numbers are, you can look them up on the Web. They are numbers that can be expressed in the form a + ib where a and b are real numbers, and i (or j if you're an engineer) is the imaginary unit, that is, the square root of -1. a and b are called respectively the real and imaginary part of the number.

It's actually unlikely you'll be using them, unless you're coding something scientific. Let's see a small example:

>>> c = 3.14 + 2.73j >>> c.real # real part 3.14 >>> c.imag # imaginary part 2.73 >>> c.conjugate() # conjugate of A + Bj is A - Bj (3.14-2.73j) >>> c * 2 # multiplication is allowed (6.28+5.46j) >>> c ** 2 # power operation as well (2.4067000000000007+17.1444j) >>> d = 1 + 1j # addition and subtraction as well >>> c - d (2.14+1.73j)

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Integers

Python integers have unlimited range, subject only to the available virtual memory. This means that it doesn't really matter how big a number you want to store: as long as it can fit in your computer's memory, Python will take care of it. Integer numbers can be positive, negative, and 0 (zero). They support all the basic mathematical operations, as shown in the following example:

>>> a = 12 >>> b = 3 >>> a + b # addition 15 >>> b - a # subtraction -9 >>> a // b # integer division 4 >>> a / b # true division 4.0 >>> a * b # multiplication 36 >>> b ** a # power operator 531441 >>> 2 ** 1024 # a very big number, Python handles it gracefully 17976931348623159077293051907890247336179769789423065727343008115 77326758055009631327084773224075360211201138798713933576587897688 14416622492847430639474124377767893424865485276302219601246094119 45308295208500576883815068234246288147391311054082723716335051068 4586298239947245938479716304835356329624224137216

The preceding code should be easy to understand. Just notice one important thing: Python has two division operators, one performs the so-called true division (/), which returns the quotient of the operands, and the other one, the so-called integer division (//), which returns the floored quotient of the operands. See how that is different for positive and negative numbers:

>>> 7 / 4 # true division 1.75 >>> 7 // 4 # integer division, flooring returns 1 1 >>> -7 / 4 # true division again, result is opposite of previous -1.75 >>> -7 // 4 # integer div., result not the opposite of previous -2

This is an interesting example. If you were expecting a -1 on the last line, don't feel bad, it's just the way Python works. The result of an integer division in Python is always rounded towards minus infinity. If instead of flooring you want to truncate a number to an integer, you can use the built-in int function, like shown in the following example:

>>> int(1.75) 1 >>> int(-1.75) -1

Notice that truncation is done towards 0.

There is also an operator to calculate the remainder of a division. It's called modulo operator, and it's represented by a percent (%):

>>> 10 % 3 # remainder of the division 10 // 3 1 >>> 10 % 4 # remainder of the division 10 // 4 2

Boolean algebra is that subset of algebra in which the values of the variables are the truth values: true and false. In Python, True and False are two keywords that are used to represent truth values. Booleans are a subclass of integers, and behave respectively like 1 and 0. The equivalent of the int class for Booleans is the bool class, which returns either True or False. Every built-in Python object has a value in the Boolean context, which means they basically evaluate to either True or False when fed to the bool function. We'll see all about this in Chapter 3, Iterating and Making Decisions.

Boolean values can be combined in Boolean expressions using the logical operators and, or, and not. Again, we'll see them in full in the next chapter, so for now let's just see a simple example:

>>> int(True) # True behaves like 1 1 >>> int(False) # False behaves like 0 0 >>> bool(1) # 1 evaluates to True in a boolean context True >>> bool(-42) # and so does every non-zero number True >>> bool(0) # 0 evaluates to False False >>> # quick peak at the operators (and, or, not) >>> not True False >>> not False True >>> True and True True >>> False or True True

You can see that True and False are subclasses of integers when you try to add them. Python upcasts them to integers and performs addition:

>>> 1 + True 2 >>> False + 42 42 >>> 7 - True 6

Note

Upcasting is a type conversion operation that goes from a subclass to its parent. In the example presented here, True and False, which belong to a class derived from the integer class, are converted back to integers when needed. This topic is about inheritance and will be explained in detail in Chapter 6, Advanced Concepts – OOP, Decorators, and Iterators.

Real numbers, or floating point numbers, are represented in Python according to the IEEE 754 double-precision binary floating-point format, which is stored in 64 bits of information divided into three sections: sign, exponent, and mantissa.

Note

Quench your thirst for knowledge about this format on Wikipedia: http://en.wikipedia.org/wiki/Double-precision_floating-point_format

Usually programming languages give coders two different formats: single and double precision. The former taking up 32 bits of memory, and the latter 64. Python supports only the double format. Let's see a simple example:

>>> pi = 3.1415926536 # how many digits of PI can you remember? >>> radius = 4.5 >>> area = pi * (radius ** 2) >>> area 63.61725123519331

The sys.float_info struct sequence holds information about how floating point numbers will behave on your system. This is what I see on my box:

>>> import sys >>> sys.float_info sys.float_info(max=1.7976931348623157e+308, max_exp=1024, max_10_exp=308, min=2.2250738585072014e-308, min_exp=-1021, min_10_exp=-307, dig=15, mant_dig=53, epsilon=2.220446049250313e-16, radix=2, rounds=1)

Let's make a few considerations here: we have 64 bits to represent float numbers. This means we can represent at most 2 ** 64 == 18,446,744,073,709,551,616 numbers with that amount of bits. Take a look at the max and epsilon value for the float numbers, and you'll realize it's impossible to represent them all. There is just not enough space so they are approximated to the closest representable number. You probably think that only extremely big or extremely small numbers suffer from this issue. Well, think again:

>>> 3 * 0.1 – 0.3 # this should be 0!!! 5.551115123125783e-17

What does this tell you? It tells you that double precision numbers suffer from approximation issues even when it comes to simple numbers like 0.1 or 0.3. Why is this important? It can be a big problem if you're handling prices, or financial calculations, or any kind of data that needs not to be approximated. Don't worry, Python gives you the Decimal type, which doesn't suffer from these issues, we'll see them in a bit.

Python gives you complex numbers support out of the box. If you don't know what complex numbers are, you can look them up on the Web. They are numbers that can be expressed in the form a + ib where a and b are real numbers, and i (or j if you're an engineer) is the imaginary unit, that is, the square root of -1. a and b are called respectively the real and imaginary part of the number.

It's actually unlikely you'll be using them, unless you're coding something scientific. Let's see a small example:

>>> c = 3.14 + 2.73j >>> c.real # real part 3.14 >>> c.imag # imaginary part 2.73 >>> c.conjugate() # conjugate of A + Bj is A - Bj (3.14-2.73j) >>> c * 2 # multiplication is allowed (6.28+5.46j) >>> c ** 2 # power operation as well (2.4067000000000007+17.1444j) >>> d = 1 + 1j # addition and subtraction as well >>> c - d (2.14+1.73j)

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Booleans

Boolean algebra is that subset of algebra in which the values of the variables are the truth values: true and false. In Python, True and False are two keywords that are used to represent truth values. Booleans are a subclass of integers, and behave respectively like 1 and 0. The equivalent of the int class for Booleans is the bool class, which returns either True or False. Every built-in Python object has a value in the Boolean context, which means they basically evaluate to either True or False when fed to the bool function. We'll see all about this in Chapter 3, Iterating and Making Decisions.

Boolean values can be combined in Boolean expressions using the logical operators and, or, and not. Again, we'll see them in full in the next chapter, so for now let's just see a simple example:

>>> int(True) # True behaves like 1 1 >>> int(False) # False behaves like 0 0 >>> bool(1) # 1 evaluates to True in a boolean context True >>> bool(-42) # and so does every non-zero number True >>> bool(0) # 0 evaluates to False False >>> # quick peak at the operators (and, or, not) >>> not True False >>> not False True >>> True and True True >>> False or True True

You can see that True and False are subclasses of integers when you try to add them. Python upcasts them to integers and performs addition:

>>> 1 + True 2 >>> False + 42 42 >>> 7 - True 6

Note

Upcasting is a type conversion operation that goes from a subclass to its parent. In the example presented here, True and False, which belong to a class derived from the integer class, are converted back to integers when needed. This topic is about inheritance and will be explained in detail in Chapter 6, Advanced Concepts – OOP, Decorators, and Iterators.

Real numbers, or floating point numbers, are represented in Python according to the IEEE 754 double-precision binary floating-point format, which is stored in 64 bits of information divided into three sections: sign, exponent, and mantissa.

Note

Quench your thirst for knowledge about this format on Wikipedia: http://en.wikipedia.org/wiki/Double-precision_floating-point_format

Usually programming languages give coders two different formats: single and double precision. The former taking up 32 bits of memory, and the latter 64. Python supports only the double format. Let's see a simple example:

>>> pi = 3.1415926536 # how many digits of PI can you remember? >>> radius = 4.5 >>> area = pi * (radius ** 2) >>> area 63.61725123519331

The sys.float_info struct sequence holds information about how floating point numbers will behave on your system. This is what I see on my box:

>>> import sys >>> sys.float_info sys.float_info(max=1.7976931348623157e+308, max_exp=1024, max_10_exp=308, min=2.2250738585072014e-308, min_exp=-1021, min_10_exp=-307, dig=15, mant_dig=53, epsilon=2.220446049250313e-16, radix=2, rounds=1)

Let's make a few considerations here: we have 64 bits to represent float numbers. This means we can represent at most 2 ** 64 == 18,446,744,073,709,551,616 numbers with that amount of bits. Take a look at the max and epsilon value for the float numbers, and you'll realize it's impossible to represent them all. There is just not enough space so they are approximated to the closest representable number. You probably think that only extremely big or extremely small numbers suffer from this issue. Well, think again:

>>> 3 * 0.1 – 0.3 # this should be 0!!! 5.551115123125783e-17

What does this tell you? It tells you that double precision numbers suffer from approximation issues even when it comes to simple numbers like 0.1 or 0.3. Why is this important? It can be a big problem if you're handling prices, or financial calculations, or any kind of data that needs not to be approximated. Don't worry, Python gives you the Decimal type, which doesn't suffer from these issues, we'll see them in a bit.

Python gives you complex numbers support out of the box. If you don't know what complex numbers are, you can look them up on the Web. They are numbers that can be expressed in the form a + ib where a and b are real numbers, and i (or j if you're an engineer) is the imaginary unit, that is, the square root of -1. a and b are called respectively the real and imaginary part of the number.

It's actually unlikely you'll be using them, unless you're coding something scientific. Let's see a small example:

>>> c = 3.14 + 2.73j >>> c.real # real part 3.14 >>> c.imag # imaginary part 2.73 >>> c.conjugate() # conjugate of A + Bj is A - Bj (3.14-2.73j) >>> c * 2 # multiplication is allowed (6.28+5.46j) >>> c ** 2 # power operation as well (2.4067000000000007+17.1444j) >>> d = 1 + 1j # addition and subtraction as well >>> c - d (2.14+1.73j)

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Reals

Real numbers, or floating point numbers, are represented in Python according to the IEEE 754 double-precision binary floating-point format, which is stored in 64 bits of information divided into three sections: sign, exponent, and mantissa.

Note

Quench your thirst for knowledge about this format on Wikipedia: http://en.wikipedia.org/wiki/Double-precision_floating-point_format

Usually programming languages give coders two different formats: single and double precision. The former taking up 32 bits of memory, and the latter 64. Python supports only the double format. Let's see a simple example:

>>> pi = 3.1415926536 # how many digits of PI can you remember? >>> radius = 4.5 >>> area = pi * (radius ** 2) >>> area 63.61725123519331

The sys.float_info struct sequence holds information about how floating point numbers will behave on your system. This is what I see on my box:

>>> import sys >>> sys.float_info sys.float_info(max=1.7976931348623157e+308, max_exp=1024, max_10_exp=308, min=2.2250738585072014e-308, min_exp=-1021, min_10_exp=-307, dig=15, mant_dig=53, epsilon=2.220446049250313e-16, radix=2, rounds=1)

Let's make a few considerations here: we have 64 bits to represent float numbers. This means we can represent at most 2 ** 64 == 18,446,744,073,709,551,616 numbers with that amount of bits. Take a look at the max and epsilon value for the float numbers, and you'll realize it's impossible to represent them all. There is just not enough space so they are approximated to the closest representable number. You probably think that only extremely big or extremely small numbers suffer from this issue. Well, think again:

>>> 3 * 0.1 – 0.3 # this should be 0!!! 5.551115123125783e-17

What does this tell you? It tells you that double precision numbers suffer from approximation issues even when it comes to simple numbers like 0.1 or 0.3. Why is this important? It can be a big problem if you're handling prices, or financial calculations, or any kind of data that needs not to be approximated. Don't worry, Python gives you the Decimal type, which doesn't suffer from these issues, we'll see them in a bit.

Python gives you complex numbers support out of the box. If you don't know what complex numbers are, you can look them up on the Web. They are numbers that can be expressed in the form a + ib where a and b are real numbers, and i (or j if you're an engineer) is the imaginary unit, that is, the square root of -1. a and b are called respectively the real and imaginary part of the number.

It's actually unlikely you'll be using them, unless you're coding something scientific. Let's see a small example:

>>> c = 3.14 + 2.73j >>> c.real # real part 3.14 >>> c.imag # imaginary part 2.73 >>> c.conjugate() # conjugate of A + Bj is A - Bj (3.14-2.73j) >>> c * 2 # multiplication is allowed (6.28+5.46j) >>> c ** 2 # power operation as well (2.4067000000000007+17.1444j) >>> d = 1 + 1j # addition and subtraction as well >>> c - d (2.14+1.73j)

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Complex numbers

Python gives you complex numbers support out of the box. If you don't know what complex numbers are, you can look them up on the Web. They are numbers that can be expressed in the form a + ib where a and b are real numbers, and i (or j if you're an engineer) is the imaginary unit, that is, the square root of -1. a and b are called respectively the real and imaginary part of the number.

It's actually unlikely you'll be using them, unless you're coding something scientific. Let's see a small example:

>>> c = 3.14 + 2.73j >>> c.real # real part 3.14 >>> c.imag # imaginary part 2.73 >>> c.conjugate() # conjugate of A + Bj is A - Bj (3.14-2.73j) >>> c * 2 # multiplication is allowed (6.28+5.46j) >>> c ** 2 # power operation as well (2.4067000000000007+17.1444j) >>> d = 1 + 1j # addition and subtraction as well >>> c - d (2.14+1.73j)

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Fractions and decimals

Let's finish the tour of the number department with a look at fractions and decimals. Fractions hold a rational numerator and denominator in their lowest forms. Let's see a quick example:

>>> from fractions import Fraction >>> Fraction(10, 6) # mad hatter? Fraction(5, 3) # notice it's been reduced to lowest terms >>> Fraction(1, 3) + Fraction(2, 3) # 1/3 + 2/3 = 3/3 = 1/1 Fraction(1, 1) >>> f = Fraction(10, 6) >>> f.numerator 5 >>> f.denominator 3

Although they can be very useful at times, it's not that common to spot them in commercial software. Much easier instead, is to see decimal numbers being used in all those contexts where precision is everything, for example, scientific and financial calculations.

Note

It's important to remember that arbitrary precision decimal numbers come at a price in performance, of course. The amount of data to be stored for each number is far greater than it is for fractions or floats as well as the way they are handled, which requires the Python interpreter much more work behind the scenes. Another interesting thing to know is that you can get and set the precision by accessing decimal.getcontext().prec.

Let's see a quick example with Decimal numbers:

>>> from decimal import Decimal as D # rename for brevity >>> D(3.14) # pi, from float, so approximation issues Decimal('3.140000000000000124344978758017532527446746826171875') >>> D('3.14') # pi, from a string, so no approximation issues Decimal('3.14') >>> D(0.1) * D(3) - D(0.3) # from float, we still have the issue Decimal('2.775557561565156540423631668E-17') >>> D('0.1') * D(3) - D('0.3') # from string, all perfect Decimal('0.0')

Notice that when we construct a Decimal number from a float, it takes on all the approximation issues the float may come from. On the other hand, when the Decimal has no approximation issues, for example, when we feed an int or a string representation to the constructor, then the calculation has no quirky behavior. When it comes to money, use decimals.

This concludes our introduction to built-in numeric types, let's now see sequences.

Let's start with immutable sequences: strings, tuples, and bytes.

Textual data in Python is handled with str objects, more commonly known as strings. They are immutable sequences of unicode code points. Unicode code points can represent a character, but can also have other meanings, such as formatting data for example. Python, unlike other languages, doesn't have a char type, so a single character is rendered simply by a string of length 1. Unicode is an excellent way to handle data, and should be used for the internals of any application. When it comes to store textual data though, or send it on the network, you may want to encode it, using an appropriate encoding for the medium you're using. String literals are written in Python using single, double or triple quotes (both single or double). If built with triple quotes, a string can span on multiple lines. An example will clarify the picture:

>>> # 4 ways to make a string >>> str1 = 'This is a string. We built it with single quotes.' >>> str2 = "This is also a string, but built with double quotes." >>> str3 = '''This is built using triple quotes, ... so it can span multiple lines.''' >>> str4 = """This too ... is a multiline one ... built with triple double-quotes.""" >>> str4 #A 'This too\nis a multiline one\nbuilt with triple double-quotes.' >>> print(str4) #B This too is a multiline one built with triple double-quotes.

In #A and #B, we print str4, first implicitly, then explicitly using the print function. A nice exercise would be to find out why they are different. Are you up to the challenge? (hint, look up the str function)

Strings, like any sequence, have a length. You can get this by calling the len function:

>>> len(str1) 49

Using the encode/decode methods, we can encode unicode strings and decode bytes objects. Utf-8 is a variable length character encoding, capable of encoding all possible unicode code points. It is the dominant encoding for the Web (and not only). Notice also that by adding a literal b in front of a string declaration, we're creating a bytes object.

>>> s = "This is üŋíc0de" # unicode string: code points >>> type(s) <class 'str'> >>> encoded_s = s.encode('utf-8') # utf-8 encoded version of s >>> encoded_s b'This is \xc3\xbc\xc5\x8b\xc3\xadc0de' # result: bytes object >>> type(encoded_s) # another way to verify it <class 'bytes'> >>> encoded_s.decode('utf-8') # let's revert to the original 'This is üŋíc0de' >>> bytes_obj = b"A bytes object" # a bytes object >>> type(bytes_obj) <class 'bytes'>

When manipulating sequences, it's very common to have to access them at one precise position (indexing), or to get a subsequence out of them (slicing). When dealing with immutable sequences, both operations are read-only.

While indexing comes in one form, a zero-based access to any position within the sequence, slicing comes in different forms. When you get a slice of a sequence, you can specify the start and stop positions, and the step. They are separated with a colon (:) like this: my_sequence[start:stop:step]. All the arguments are optional, start is inclusive, stop is exclusive. It's much easier to show an example, rather than explain them further in words:

>>> s = "The trouble is you think you have time." >>> s[0] # indexing at position 0, which is the first char 'T' >>> s[5] # indexing at position 5, which is the sixth char 'r' >>> s[:4] # slicing, we specify only the stop position 'The ' >>> s[4:] # slicing, we specify only the start position 'trouble is you think you have time.' >>> s[2:14] # slicing, both start and stop positions 'e trouble is' >>> s[2:14:3] # slicing, start, stop and step (every 3 chars) 'erb ' >>> s[:] # quick way of making a copy 'The trouble is you think you have time.'

Of all the lines, the last one is probably the most interesting. If you don't specify a parameter, Python will fill in the default for you. In this case, start will be the start of the string, stop will be the end of the sting, and step will be the default 1. This is an easy and quick way of obtaining a copy of the string s (same value, but different object). Can you find a way to get the reversed copy of a string using slicing? (don't look it up, find it for yourself)

The last immutable sequence type we're going to see is the tuple. A tuple is a sequence of arbitrary Python objects. In a tuple, items are separated by commas. They are used everywhere in Python, because they allow for patterns that are hard to reproduce in other languages. Sometimes tuples are used implicitly, for example to set up multiple variables on one line, or to allow a function to return multiple different objects (usually a function returns one object only, in many other languages), and even in the Python console, you can use tuples implicitly to print multiple elements with one single instruction. We'll see examples for all these cases:

>>> t = () # empty tuple >>> type(t) <class 'tuple'> >>> one_element_tuple = (42, ) # you need the comma! >>> three_elements_tuple = (1, 3, 5) >>> a, b, c = 1, 2, 3 # tuple for multiple assignment >>> a, b, c # implicit tuple to print with one instruction (1, 2, 3) >>> 3 in three_elements_tuple # membership test True

Notice that the membership operator in can also be used with lists, strings, dictionaries, and in general with collection and sequence objects.

Note

Notice that to create a tuple with one item, we need to put that comma after the item. The reason is that without the comma that item is just itself wrapped in braces, kind of in a redundant mathematical expression. Notice also that on assignment, braces are optional so my_tuple = 1, 2, 3 is the same as my_tuple = (1, 2, 3).

One thing that tuple assignment allows us to do, is one-line swaps, with no need for a third temporary variable. Let's see first a more traditional way of doing it:

>>> a, b = 1, 2 >>> c = a # we need three lines and a temporary var c >>> a = b >>> b = c >>> a, b # a and b have been swapped (2, 1)

And now let's see how we would do it in Python:

>>> a, b = b, a # this is the Pythonic way to do it >>> a, b (1, 2)

Take a look at the line that shows you the Pythonic way of swapping two values: do you remember what I wrote in Chapter 1, Introduction and First Steps – Take a Deep Breath. A Python program is typically one-fifth to one-third the size of equivalent Java or C++ code, and features like one-line swaps contribute to this. Python is elegant, where elegance in this context means also economy.

Because they are immutable, tuples can be used as keys for dictionaries (we'll see this shortly). The dict objects need keys to be immutable because if they could change, then the value they reference wouldn't be found any more (because the path to it depends on the key). If you are into data structures, you know how nice a feature this one is to have. To me, tuples are Python's built-in data that most closely represent a mathematical vector. This doesn't mean that this was the reason for which they were created though. Tuples usually contain an heterogeneous sequence of elements, while on the other hand lists are most of the times homogeneous. Moreover, tuples are normally accessed via unpacking or indexing, while lists are usually iterated over.

Strings and bytes

Textual data in Python is handled with str objects, more commonly known as strings. They are immutable sequences of unicode code points. Unicode code points can represent a character, but can also have other meanings, such as formatting data for example. Python, unlike other languages, doesn't have a char type, so a single character is rendered simply by a string of length 1. Unicode is an excellent way to handle data, and should be used for the internals of any application. When it comes to store textual data though, or send it on the network, you may want to encode it, using an appropriate encoding for the medium you're using. String literals are written in Python using single, double or triple quotes (both single or double). If built with triple quotes, a string can span on multiple lines. An example will clarify the picture:

>>> # 4 ways to make a string >>> str1 = 'This is a string. We built it with single quotes.' >>> str2 = "This is also a string, but built with double quotes." >>> str3 = '''This is built using triple quotes, ... so it can span multiple lines.''' >>> str4 = """This too ... is a multiline one ... built with triple double-quotes.""" >>> str4 #A 'This too\nis a multiline one\nbuilt with triple double-quotes.' >>> print(str4) #B This too is a multiline one built with triple double-quotes.

In #A and #B, we print str4, first implicitly, then explicitly using the print function. A nice exercise would be to find out why they are different. Are you up to the challenge? (hint, look up the str function)

Strings, like any sequence, have a length. You can get this by calling the len function:

>>> len(str1) 49

Using the encode/decode methods, we can encode unicode strings and decode bytes objects. Utf-8 is a variable length character encoding, capable of encoding all possible unicode code points. It is the dominant encoding for the Web (and not only). Notice also that by adding a literal b in front of a string declaration, we're creating a bytes object.

>>> s = "This is üŋíc0de" # unicode string: code points >>> type(s) <class 'str'> >>> encoded_s = s.encode('utf-8') # utf-8 encoded version of s >>> encoded_s b'This is \xc3\xbc\xc5\x8b\xc3\xadc0de' # result: bytes object >>> type(encoded_s) # another way to verify it <class 'bytes'> >>> encoded_s.decode('utf-8') # let's revert to the original 'This is üŋíc0de' >>> bytes_obj = b"A bytes object" # a bytes object >>> type(bytes_obj) <class 'bytes'>

When manipulating sequences, it's very common to have to access them at one precise position (indexing), or to get a subsequence out of them (slicing). When dealing with immutable sequences, both operations are read-only.

While indexing comes in one form, a zero-based access to any position within the sequence, slicing comes in different forms. When you get a slice of a sequence, you can specify the start and stop positions, and the step. They are separated with a colon (:) like this: my_sequence[start:stop:step]. All the arguments are optional, start is inclusive, stop is exclusive. It's much easier to show an example, rather than explain them further in words:

>>> s = "The trouble is you think you have time." >>> s[0] # indexing at position 0, which is the first char 'T' >>> s[5] # indexing at position 5, which is the sixth char 'r' >>> s[:4] # slicing, we specify only the stop position 'The ' >>> s[4:] # slicing, we specify only the start position 'trouble is you think you have time.' >>> s[2:14] # slicing, both start and stop positions 'e trouble is' >>> s[2:14:3] # slicing, start, stop and step (every 3 chars) 'erb ' >>> s[:] # quick way of making a copy 'The trouble is you think you have time.'

Of all the lines, the last one is probably the most interesting. If you don't specify a parameter, Python will fill in the default for you. In this case, start will be the start of the string, stop will be the end of the sting, and step will be the default 1. This is an easy and quick way of obtaining a copy of the string s (same value, but different object). Can you find a way to get the reversed copy of a string using slicing? (don't look it up, find it for yourself)

The last immutable sequence type we're going to see is the tuple. A tuple is a sequence of arbitrary Python objects. In a tuple, items are separated by commas. They are used everywhere in Python, because they allow for patterns that are hard to reproduce in other languages. Sometimes tuples are used implicitly, for example to set up multiple variables on one line, or to allow a function to return multiple different objects (usually a function returns one object only, in many other languages), and even in the Python console, you can use tuples implicitly to print multiple elements with one single instruction. We'll see examples for all these cases:

>>> t = () # empty tuple >>> type(t) <class 'tuple'> >>> one_element_tuple = (42, ) # you need the comma! >>> three_elements_tuple = (1, 3, 5) >>> a, b, c = 1, 2, 3 # tuple for multiple assignment >>> a, b, c # implicit tuple to print with one instruction (1, 2, 3) >>> 3 in three_elements_tuple # membership test True

Notice that the membership operator in can also be used with lists, strings, dictionaries, and in general with collection and sequence objects.

Note

Notice that to create a tuple with one item, we need to put that comma after the item. The reason is that without the comma that item is just itself wrapped in braces, kind of in a redundant mathematical expression. Notice also that on assignment, braces are optional so my_tuple = 1, 2, 3 is the same as my_tuple = (1, 2, 3).

One thing that tuple assignment allows us to do, is one-line swaps, with no need for a third temporary variable. Let's see first a more traditional way of doing it:

>>> a, b = 1, 2 >>> c = a # we need three lines and a temporary var c >>> a = b >>> b = c >>> a, b # a and b have been swapped (2, 1)

And now let's see how we would do it in Python:

>>> a, b = b, a # this is the Pythonic way to do it >>> a, b (1, 2)

Take a look at the line that shows you the Pythonic way of swapping two values: do you remember what I wrote in Chapter 1, Introduction and First Steps – Take a Deep Breath. A Python program is typically one-fifth to one-third the size of equivalent Java or C++ code, and features like one-line swaps contribute to this. Python is elegant, where elegance in this context means also economy.

Because they are immutable, tuples can be used as keys for dictionaries (we'll see this shortly). The dict objects need keys to be immutable because if they could change, then the value they reference wouldn't be found any more (because the path to it depends on the key). If you are into data structures, you know how nice a feature this one is to have. To me, tuples are Python's built-in data that most closely represent a mathematical vector. This doesn't mean that this was the reason for which they were created though. Tuples usually contain an heterogeneous sequence of elements, while on the other hand lists are most of the times homogeneous. Moreover, tuples are normally accessed via unpacking or indexing, while lists are usually iterated over.

Encoding and decoding strings

Using the encode/decode methods, we can encode unicode strings and decode bytes objects. Utf-8 is a variable length character encoding, capable of encoding all possible unicode code points. It is the dominant encoding for the Web (and not only). Notice also that by adding a literal b in front of a string declaration, we're creating a bytes object.

>>> s = "This is üŋíc0de" # unicode string: code points >>> type(s) <class 'str'> >>> encoded_s = s.encode('utf-8') # utf-8 encoded version of s >>> encoded_s b'This is \xc3\xbc\xc5\x8b\xc3\xadc0de' # result: bytes object >>> type(encoded_s) # another way to verify it <class 'bytes'> >>> encoded_s.decode('utf-8') # let's revert to the original 'This is üŋíc0de' >>> bytes_obj = b"A bytes object" # a bytes object >>> type(bytes_obj) <class 'bytes'>

When manipulating sequences, it's very common to have to access them at one precise position (indexing), or to get a subsequence out of them (slicing). When dealing with immutable sequences, both operations are read-only.

While indexing comes in one form, a zero-based access to any position within the sequence, slicing comes in different forms. When you get a slice of a sequence, you can specify the start and stop positions, and the step. They are separated with a colon (:) like this: my_sequence[start:stop:step]. All the arguments are optional, start is inclusive, stop is exclusive. It's much easier to show an example, rather than explain them further in words:

>>> s = "The trouble is you think you have time." >>> s[0] # indexing at position 0, which is the first char 'T' >>> s[5] # indexing at position 5, which is the sixth char 'r' >>> s[:4] # slicing, we specify only the stop position 'The ' >>> s[4:] # slicing, we specify only the start position 'trouble is you think you have time.' >>> s[2:14] # slicing, both start and stop positions 'e trouble is' >>> s[2:14:3] # slicing, start, stop and step (every 3 chars) 'erb ' >>> s[:] # quick way of making a copy 'The trouble is you think you have time.'

Of all the lines, the last one is probably the most interesting. If you don't specify a parameter, Python will fill in the default for you. In this case, start will be the start of the string, stop will be the end of the sting, and step will be the default 1. This is an easy and quick way of obtaining a copy of the string s (same value, but different object). Can you find a way to get the reversed copy of a string using slicing? (don't look it up, find it for yourself)

The last immutable sequence type we're going to see is the tuple. A tuple is a sequence of arbitrary Python objects. In a tuple, items are separated by commas. They are used everywhere in Python, because they allow for patterns that are hard to reproduce in other languages. Sometimes tuples are used implicitly, for example to set up multiple variables on one line, or to allow a function to return multiple different objects (usually a function returns one object only, in many other languages), and even in the Python console, you can use tuples implicitly to print multiple elements with one single instruction. We'll see examples for all these cases:

>>> t = () # empty tuple >>> type(t) <class 'tuple'> >>> one_element_tuple = (42, ) # you need the comma! >>> three_elements_tuple = (1, 3, 5) >>> a, b, c = 1, 2, 3 # tuple for multiple assignment >>> a, b, c # implicit tuple to print with one instruction (1, 2, 3) >>> 3 in three_elements_tuple # membership test True

Notice that the membership operator in can also be used with lists, strings, dictionaries, and in general with collection and sequence objects.

Note

Notice that to create a tuple with one item, we need to put that comma after the item. The reason is that without the comma that item is just itself wrapped in braces, kind of in a redundant mathematical expression. Notice also that on assignment, braces are optional so my_tuple = 1, 2, 3 is the same as my_tuple = (1, 2, 3).

One thing that tuple assignment allows us to do, is one-line swaps, with no need for a third temporary variable. Let's see first a more traditional way of doing it:

>>> a, b = 1, 2 >>> c = a # we need three lines and a temporary var c >>> a = b >>> b = c >>> a, b # a and b have been swapped (2, 1)

And now let's see how we would do it in Python:

>>> a, b = b, a # this is the Pythonic way to do it >>> a, b (1, 2)

Take a look at the line that shows you the Pythonic way of swapping two values: do you remember what I wrote in Chapter 1, Introduction and First Steps – Take a Deep Breath. A Python program is typically one-fifth to one-third the size of equivalent Java or C++ code, and features like one-line swaps contribute to this. Python is elegant, where elegance in this context means also economy.

Because they are immutable, tuples can be used as keys for dictionaries (we'll see this shortly). The dict objects need keys to be immutable because if they could change, then the value they reference wouldn't be found any more (because the path to it depends on the key). If you are into data structures, you know how nice a feature this one is to have. To me, tuples are Python's built-in data that most closely represent a mathematical vector. This doesn't mean that this was the reason for which they were created though. Tuples usually contain an heterogeneous sequence of elements, while on the other hand lists are most of the times homogeneous. Moreover, tuples are normally accessed via unpacking or indexing, while lists are usually iterated over.

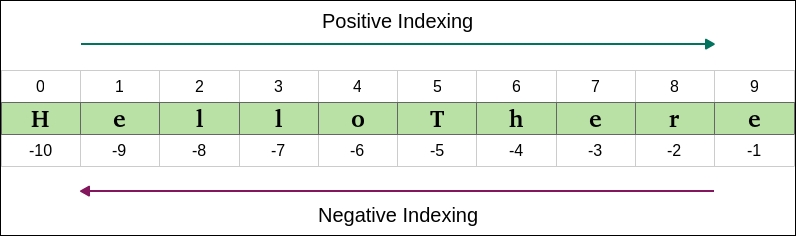

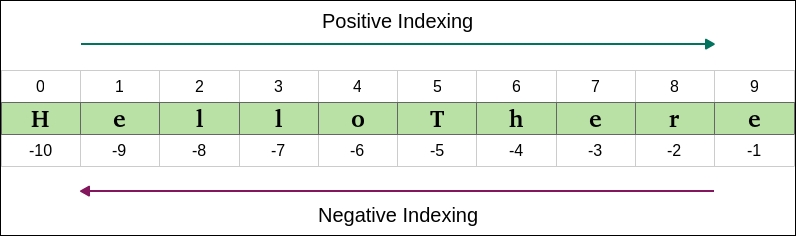

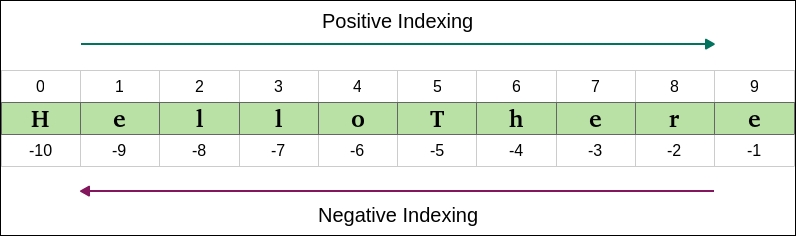

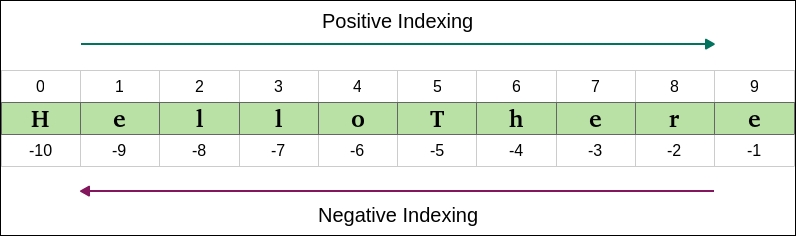

Indexing and slicing strings

When manipulating sequences, it's very common to have to access them at one precise position (indexing), or to get a subsequence out of them (slicing). When dealing with immutable sequences, both operations are read-only.

While indexing comes in one form, a zero-based access to any position within the sequence, slicing comes in different forms. When you get a slice of a sequence, you can specify the start and stop positions, and the step. They are separated with a colon (:) like this: my_sequence[start:stop:step]. All the arguments are optional, start is inclusive, stop is exclusive. It's much easier to show an example, rather than explain them further in words:

>>> s = "The trouble is you think you have time." >>> s[0] # indexing at position 0, which is the first char 'T' >>> s[5] # indexing at position 5, which is the sixth char 'r' >>> s[:4] # slicing, we specify only the stop position 'The ' >>> s[4:] # slicing, we specify only the start position 'trouble is you think you have time.' >>> s[2:14] # slicing, both start and stop positions 'e trouble is' >>> s[2:14:3] # slicing, start, stop and step (every 3 chars) 'erb ' >>> s[:] # quick way of making a copy 'The trouble is you think you have time.'

Of all the lines, the last one is probably the most interesting. If you don't specify a parameter, Python will fill in the default for you. In this case, start will be the start of the string, stop will be the end of the sting, and step will be the default 1. This is an easy and quick way of obtaining a copy of the string s (same value, but different object). Can you find a way to get the reversed copy of a string using slicing? (don't look it up, find it for yourself)

The last immutable sequence type we're going to see is the tuple. A tuple is a sequence of arbitrary Python objects. In a tuple, items are separated by commas. They are used everywhere in Python, because they allow for patterns that are hard to reproduce in other languages. Sometimes tuples are used implicitly, for example to set up multiple variables on one line, or to allow a function to return multiple different objects (usually a function returns one object only, in many other languages), and even in the Python console, you can use tuples implicitly to print multiple elements with one single instruction. We'll see examples for all these cases:

>>> t = () # empty tuple >>> type(t) <class 'tuple'> >>> one_element_tuple = (42, ) # you need the comma! >>> three_elements_tuple = (1, 3, 5) >>> a, b, c = 1, 2, 3 # tuple for multiple assignment >>> a, b, c # implicit tuple to print with one instruction (1, 2, 3) >>> 3 in three_elements_tuple # membership test True

Notice that the membership operator in can also be used with lists, strings, dictionaries, and in general with collection and sequence objects.

Note

Notice that to create a tuple with one item, we need to put that comma after the item. The reason is that without the comma that item is just itself wrapped in braces, kind of in a redundant mathematical expression. Notice also that on assignment, braces are optional so my_tuple = 1, 2, 3 is the same as my_tuple = (1, 2, 3).

One thing that tuple assignment allows us to do, is one-line swaps, with no need for a third temporary variable. Let's see first a more traditional way of doing it:

>>> a, b = 1, 2 >>> c = a # we need three lines and a temporary var c >>> a = b >>> b = c >>> a, b # a and b have been swapped (2, 1)

And now let's see how we would do it in Python:

>>> a, b = b, a # this is the Pythonic way to do it >>> a, b (1, 2)

Take a look at the line that shows you the Pythonic way of swapping two values: do you remember what I wrote in Chapter 1, Introduction and First Steps – Take a Deep Breath. A Python program is typically one-fifth to one-third the size of equivalent Java or C++ code, and features like one-line swaps contribute to this. Python is elegant, where elegance in this context means also economy.

Because they are immutable, tuples can be used as keys for dictionaries (we'll see this shortly). The dict objects need keys to be immutable because if they could change, then the value they reference wouldn't be found any more (because the path to it depends on the key). If you are into data structures, you know how nice a feature this one is to have. To me, tuples are Python's built-in data that most closely represent a mathematical vector. This doesn't mean that this was the reason for which they were created though. Tuples usually contain an heterogeneous sequence of elements, while on the other hand lists are most of the times homogeneous. Moreover, tuples are normally accessed via unpacking or indexing, while lists are usually iterated over.

Tuples

The last immutable sequence type we're going to see is the tuple. A tuple is a sequence of arbitrary Python objects. In a tuple, items are separated by commas. They are used everywhere in Python, because they allow for patterns that are hard to reproduce in other languages. Sometimes tuples are used implicitly, for example to set up multiple variables on one line, or to allow a function to return multiple different objects (usually a function returns one object only, in many other languages), and even in the Python console, you can use tuples implicitly to print multiple elements with one single instruction. We'll see examples for all these cases:

>>> t = () # empty tuple >>> type(t) <class 'tuple'> >>> one_element_tuple = (42, ) # you need the comma! >>> three_elements_tuple = (1, 3, 5) >>> a, b, c = 1, 2, 3 # tuple for multiple assignment >>> a, b, c # implicit tuple to print with one instruction (1, 2, 3) >>> 3 in three_elements_tuple # membership test True

Notice that the membership operator in can also be used with lists, strings, dictionaries, and in general with collection and sequence objects.

Note

Notice that to create a tuple with one item, we need to put that comma after the item. The reason is that without the comma that item is just itself wrapped in braces, kind of in a redundant mathematical expression. Notice also that on assignment, braces are optional so my_tuple = 1, 2, 3 is the same as my_tuple = (1, 2, 3).

One thing that tuple assignment allows us to do, is one-line swaps, with no need for a third temporary variable. Let's see first a more traditional way of doing it:

>>> a, b = 1, 2 >>> c = a # we need three lines and a temporary var c >>> a = b >>> b = c >>> a, b # a and b have been swapped (2, 1)

And now let's see how we would do it in Python:

>>> a, b = b, a # this is the Pythonic way to do it >>> a, b (1, 2)

Take a look at the line that shows you the Pythonic way of swapping two values: do you remember what I wrote in Chapter 1, Introduction and First Steps – Take a Deep Breath. A Python program is typically one-fifth to one-third the size of equivalent Java or C++ code, and features like one-line swaps contribute to this. Python is elegant, where elegance in this context means also economy.

Because they are immutable, tuples can be used as keys for dictionaries (we'll see this shortly). The dict objects need keys to be immutable because if they could change, then the value they reference wouldn't be found any more (because the path to it depends on the key). If you are into data structures, you know how nice a feature this one is to have. To me, tuples are Python's built-in data that most closely represent a mathematical vector. This doesn't mean that this was the reason for which they were created though. Tuples usually contain an heterogeneous sequence of elements, while on the other hand lists are most of the times homogeneous. Moreover, tuples are normally accessed via unpacking or indexing, while lists are usually iterated over.

Mutable sequences differ from their immutable sisters in that they can be changed after creation. There are two mutable sequence types in Python: lists and byte arrays. I said before that the dictionary is the king of data structures in Python. I guess this makes the list its rightful queen.

Python lists are mutable sequences. They are very similar to tuples, but they don't have the restrictions due to immutability. Lists are commonly used to store collections of homogeneous objects, but there is nothing preventing you to store heterogeneous collections as well. Lists can be created in many different ways, let's see an example:

>>> [] # empty list [] >>> list() # same as [] [] >>> [1, 2, 3] # as with tuples, items are comma separated [1, 2, 3] >>> [x + 5 for x in [2, 3, 4]] # Python is magic [7, 8, 9] >>> list((1, 3, 5, 7, 9)) # list from a tuple [1, 3, 5, 7, 9] >>> list('hello') # list from a string ['h', 'e', 'l', 'l', 'o']

In the previous example, I showed you how to create a list using different techniques. I would like you to take a good look at the line that says Python is magic, which I am not expecting you to fully understand at this point (unless you cheated and you're not a novice!). That is called a list comprehension, a very powerful functional feature of Python, which we'll see in detail in Chapter 5, Saving Time and Memory. I just wanted to make your mouth water at this point.

Creating lists is good, but the real fun comes when we use them, so let's see the main methods they gift us with:

>>> a = [1, 2, 1, 3] >>> a.append(13) # we can append anything at the end >>> a [1, 2, 1, 3, 13] >>> a.count(1) # how many `1` are there in the list? 2 >>> a.extend([5, 7]) # extend the list by another (or sequence) >>> a [1, 2, 1, 3, 13, 5, 7] >>> a.index(13) # position of `13` in the list (0-based indexing) 4 >>> a.insert(0, 17) # insert `17` at position 0 >>> a [17, 1, 2, 1, 3, 13, 5, 7] >>> a.pop() # pop (remove and return) last element 7 >>> a.pop(3) # pop element at position 3 1 >>> a [17, 1, 2, 3, 13, 5] >>> a.remove(17) # remove `17` from the list >>> a [1, 2, 3, 13, 5] >>> a.reverse() # reverse the order of the elements in the list >>> a [5, 13, 3, 2, 1] >>> a.sort() # sort the list >>> a [1, 2, 3, 5, 13] >>> a.clear() # remove all elements from the list >>> a []

The preceding code gives you a roundup of list's main methods. I want to show you how powerful they are, using extend as an example. You can extend lists using any sequence type:

>>> a = list('hello') # makes a list from a string >>> a ['h', 'e', 'l', 'l', 'o'] >>> a.append(100) # append 100, heterogeneous type >>> a ['h', 'e', 'l', 'l', 'o', 100] >>> a.extend((1, 2, 3)) # extend using tuple >>> a ['h', 'e', 'l', 'l', 'o', 100, 1, 2, 3] >>> a.extend('...') # extend using string >>> a ['h', 'e', 'l', 'l', 'o', 100, 1, 2, 3, '.', '.', '.']

Now, let's see what are the most common operations you can do with lists:

>>> a = [1, 3, 5, 7] >>> min(a) # minimum value in the list 1 >>> max(a) # maximum value in the list 7 >>> sum(a) # sum of all values in the list 16 >>> len(a) # number of elements in the list 4 >>> b = [6, 7, 8] >>> a + b # `+` with list means concatenation [1, 3, 5, 7, 6, 7, 8] >>> a * 2 # `*` has also a special meaning [1, 3, 5, 7, 1, 3, 5, 7]

The last two lines in the preceding code are quite interesting because they introduce us to a concept called operator overloading. In short, it means that operators such as +, -. *, %, and so on, may represent different operations according to the context they are used in. It doesn't make any sense to sum two lists, right? Therefore, the + sign is used to concatenate them. Hence, the * sign is used to concatenate the list to itself according to the right operand. Now, let's take a step further down the rabbit hole and see something a little more interesting. I want to show you how powerful the sort method can be and how easy it is in Python to achieve results that require a great deal of effort in other languages:

>>> from operator import itemgetter >>> a = [(5, 3), (1, 3), (1, 2), (2, -1), (4, 9)] >>> sorted(a) [(1, 2), (1, 3), (2, -1), (4, 9), (5, 3)] >>> sorted(a, key=itemgetter(0)) [(1, 3), (1, 2), (2, -1), (4, 9), (5, 3)] >>> sorted(a, key=itemgetter(0, 1)) [(1, 2), (1, 3), (2, -1), (4, 9), (5, 3)] >>> sorted(a, key=itemgetter(1)) [(2, -1), (1, 2), (5, 3), (1, 3), (4, 9)] >>> sorted(a, key=itemgetter(1), reverse=True) [(4, 9), (5, 3), (1, 3), (1, 2), (2, -1)]

The preceding code deserves a little explanation. First of all, a is a list of tuples. This means each element in a is a tuple (a 2-tuple, to be picky). When we call sorted(some_list), we get a sorted version of some_list. In this case, the sorting on a 2-tuple works by sorting them on the first item in the tuple, and on the second when the first one is the same. You can see this behavior in the result of sorted(a), which yields [(1, 2), (1, 3), ...]. Python also gives us the ability to control on which element(s) of the tuple the sorting must be run against. Notice that when we instruct the sorted function to work on the first element of each tuple (by key=itemgetter(0)), the result is different: [(1, 3), (1, 2), ...]. The sorting is done only on the first element of each tuple (which is the one at position 0). If we want to replicate the default behavior of a simple sorted(a) call, we need to use key=itemgetter(0, 1), which tells Python to sort first on the elements at position 0 within the tuples, and then on those at position 1. Compare the results and you'll see they match.

For completeness, I included an example of sorting only on the elements at position 1, and the same but in reverse order. If you have ever seen sorting in Java, I expect you to be on your knees crying with joy at this very moment.

The Python sorting algorithm is very powerful, and it was written by Tim Peters (we've already seen this name, can you recall when?). It is aptly named Timsort, and it is a blend between merge and insertion sort and has better time performances than most other algorithms used for mainstream programming languages. Timsort is a stable sorting algorithm, which means that when multiple records have the same key, their original order is preserved. We've seen this in the result of sorted(a, key=itemgetter(0)) which has yielded [(1, 3), (1, 2), ...] in which the order of those two tuples has been preserved because they have the same value at position 0.

To conclude our overview of mutable sequence types, let's spend a couple of minutes on the bytearray type. Basically, they represent the mutable version of bytes objects. They expose most of the usual methods of mutable sequences as well as most of the methods of the bytes type. Items are integers in the range [0, 256).

Note

When it comes to intervals, I'm going to use the standard notation for open/closed ranges. A square bracket on one end means that the value is included, while a round brace means it's excluded. The granularity is usually inferred by the type of the edge elements so, for example, the interval [3, 7] means all integers between 3 and 7, inclusive. On the other hand, (3, 7) means all integers between 3 and 7 exclusive (hence 4, 5, and 6). Items in a bytearray type are integers between 0 and 256, 0 is included, 256 is not. One reason intervals are often expressed like this is to ease coding. If we break a range [a, b) into N consecutive ranges, we can easily represent the original one as a concatenation like this:

The middle points (k i) being excluded on one end, and included on the other end, allow for easy concatenation and splitting when intervals are handled in the code.

Let's see a quick example with the type bytearray:

>>> bytearray() # empty bytearray object bytearray(b'') >>> bytearray(10) # zero-filled instance with given length bytearray(b'\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00') >>> bytearray(range(5)) # bytearray from iterable of integers bytearray(b'\x00\x01\x02\x03\x04') >>> name = bytearray(b'Lina') # A - bytearray from bytes >>> name.replace(b'L', b'l') bytearray(b'lina') >>> name.endswith(b'na') True >>> name.upper() bytearray(b'LINA') >>> name.count(b'L') 1

As you can see in the preceding code, there are a few ways to create a bytearray object. They can be useful in many situations, for example, when receiving data through a socket, they eliminate the need to concatenate data while polling, hence they prove very handy. On the line #A, I created the name bytearray from the string b'Lina' to show you how the bytearray object exposes methods from both sequences and strings, which is extremely handy. If you think about it, they can be considered as mutable strings.

Lists

Python lists are mutable sequences. They are very similar to tuples, but they don't have the restrictions due to immutability. Lists are commonly used to store collections of homogeneous objects, but there is nothing preventing you to store heterogeneous collections as well. Lists can be created in many different ways, let's see an example:

>>> [] # empty list [] >>> list() # same as [] [] >>> [1, 2, 3] # as with tuples, items are comma separated [1, 2, 3] >>> [x + 5 for x in [2, 3, 4]] # Python is magic [7, 8, 9] >>> list((1, 3, 5, 7, 9)) # list from a tuple [1, 3, 5, 7, 9] >>> list('hello') # list from a string ['h', 'e', 'l', 'l', 'o']

In the previous example, I showed you how to create a list using different techniques. I would like you to take a good look at the line that says Python is magic, which I am not expecting you to fully understand at this point (unless you cheated and you're not a novice!). That is called a list comprehension, a very powerful functional feature of Python, which we'll see in detail in Chapter 5, Saving Time and Memory. I just wanted to make your mouth water at this point.

Creating lists is good, but the real fun comes when we use them, so let's see the main methods they gift us with:

>>> a = [1, 2, 1, 3] >>> a.append(13) # we can append anything at the end >>> a [1, 2, 1, 3, 13] >>> a.count(1) # how many `1` are there in the list? 2 >>> a.extend([5, 7]) # extend the list by another (or sequence) >>> a [1, 2, 1, 3, 13, 5, 7] >>> a.index(13) # position of `13` in the list (0-based indexing) 4 >>> a.insert(0, 17) # insert `17` at position 0 >>> a [17, 1, 2, 1, 3, 13, 5, 7] >>> a.pop() # pop (remove and return) last element 7 >>> a.pop(3) # pop element at position 3 1 >>> a [17, 1, 2, 3, 13, 5] >>> a.remove(17) # remove `17` from the list >>> a [1, 2, 3, 13, 5] >>> a.reverse() # reverse the order of the elements in the list >>> a [5, 13, 3, 2, 1] >>> a.sort() # sort the list >>> a [1, 2, 3, 5, 13] >>> a.clear() # remove all elements from the list >>> a []

The preceding code gives you a roundup of list's main methods. I want to show you how powerful they are, using extend as an example. You can extend lists using any sequence type: