Exploring the world of TDD

In a nutshell, TDD is a technique that allows us to write automated tests with short feedback loops. It is an iterative process that incorporates testing into the software development process, allowing developers to use the same techniques for writing their tests as they use for writing production code.

TDD was created as an Agile working practice, as it allows teams to deliver code in an iterative process, consisting of writing functional code, verifying new code with tests, and iteratively refactoring new code, if required.

Introduction to the Agile methodology

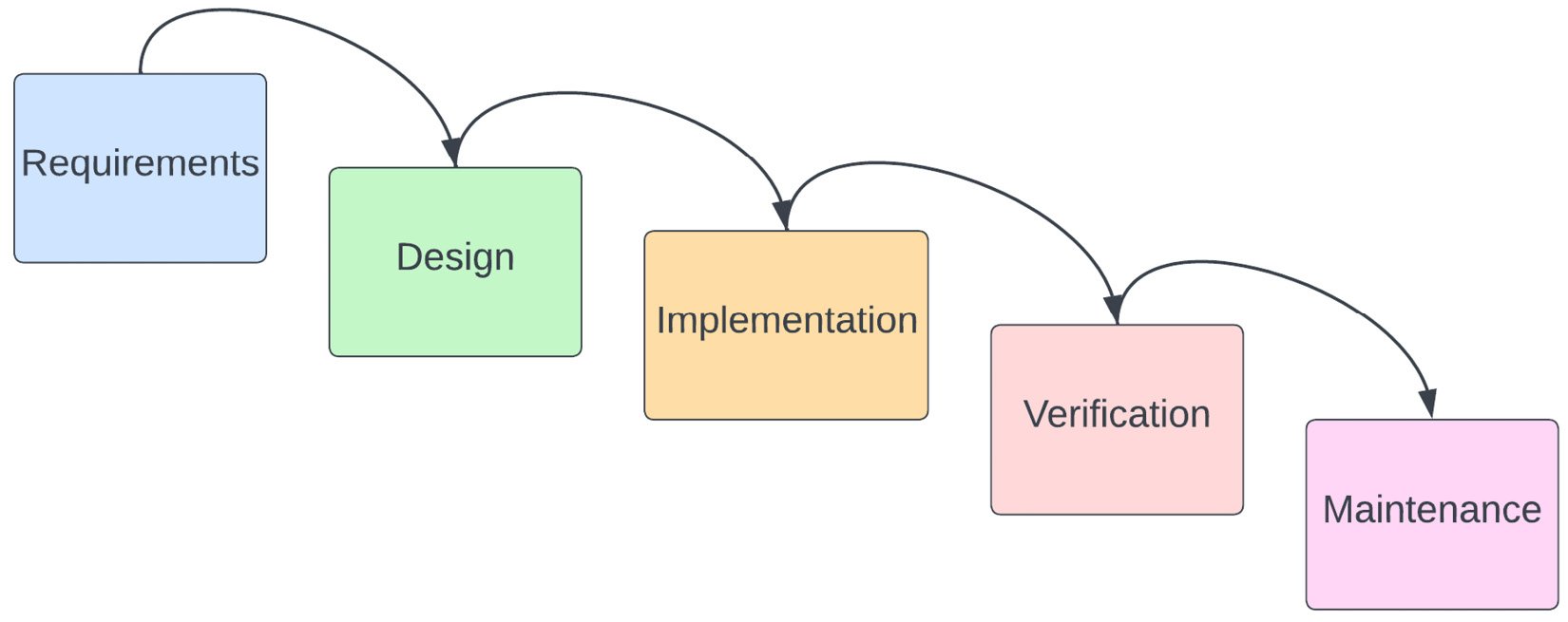

This precursor to the Agile movement was the waterfall methodology, which was the most popular project management technique. This process involves delivering software projects in stages, with work starting on each stage once the stage before it is completed, just like water flows downstream. Figure 1.1 shows the five stages of the waterfall methodology:

Figure 1.1 – The five stages of the waterfall methodology

Intuition from manufacturing and construction projects might suggest that it is natural to divide the software delivery process into sequential phases, gathering and formulating all requirements at the start of the project. However, this way of working poses three difficulties when used to deliver large software projects:

- Changing the course of the project or requirements is difficult. A working solution is only available at the end of the process, requiring verification of a large deliverable. Testing an entire project is much more difficult than testing small deliverables.

- Customers need to decide all of their requirements in detail at the beginning of the project. The waterfall allows for minimal customer involvement, as they are only consulted in the requirements and verification phases.

- The process requires detailed documentation, which specifies both requirements and the software design approach. Crucially, the project documentation includes timelines and estimates that the clients need to approve prior to project initiation.

The waterfall model is all about planning work

Project management with the waterfall methodology allows you to plan your project in well-defined, linear phases. This approach is intuitive and suitable for projects with clearly defined goals and boundaries. In practice, however, the waterfall model lacks the flexibility and iterative approach required for delivering complex software projects.

A better way of working named Agile emerged, which could address the challenges of the waterfall methodology. TDD relies on the principles of the Agile methodology. The literature on Agile working practices is extensive, so we won’t be looking at Agile in detail, but a brief understanding of the origins of TDD will allow us to understand its approach and get into its mindset.

Agile software development is an umbrella term for multiple code delivery and project planning practices such as SCRUM, Kanban, Extreme Programming (XP), and TDD.

As implied by its name, it is all about the ability to respond and adapt to change. One of the main disadvantages of the waterfall way of working was its inflexibility, and Agile was designed to address this issue.

The Agile manifesto was written and signed by 17 software engineering leaders and pioneers in 2001. It outlines the 4 core values and 12 central principles of Agile. The manifesto is available freely at agilemanifesto.org.

The four core Agile values highlight the spirit of the movement:

- Individuals and interactions over processes and tools: This means that the team involved in the delivery of the project is more important than their technical tools and processes.

- Working software over comprehensive documentation: This means that delivering working functionality to customers is the number one priority. While documentation is important, teams should always focus on consistently delivering value.

- Customer collaboration over contract negotiation: This means that customers should be involved in a feedback loop over the lifetime of the project, ensuring that the project and work continue to deliver value and satisfy their needs and requirements.

- Responding to change over following a plan: This means that teams should be responsive to change over following a predefined plan or roadmap. The team should be able to pivot and change direction whenever required.

Agile is all about people

The Agile methodology is not a prescriptive list of practices. It is all about teams working together to overcome uncertainty and change during the life cycle of a project. Agile teams are interdisciplinary, consisting of engineers, software testing professionals, product managers, and more. This ensures that the team members with a variety of skills collaborate to deliver the software project as a whole.

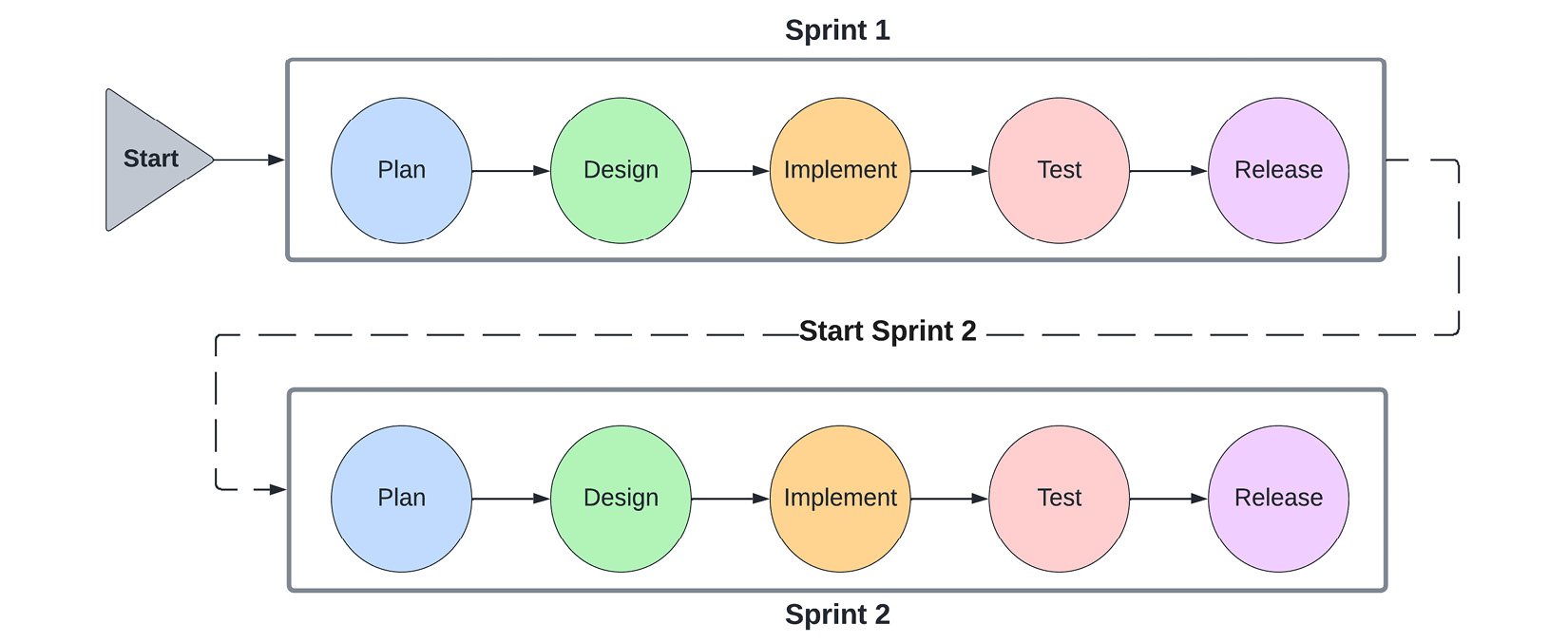

Unlike the waterfall model, the stages of the Agile software delivery methodology repeat, focusing on delivering software in small increments or iterations, as opposed to the big deliverables of waterfall. In Agile nomenclature, these iterations are called sprints.

Figure 1.2 depicts the stages of Agile project delivery:

Figure 1.2 – The stages of Agile software delivery

Let’s look at the cyclical stages of Agile software delivery:

- We begin with the Plan phase. The product owner discusses project requirements that will be delivered in the current sprint with key stakeholders. The outcome of this phase is the prioritized list of client requirements that will be implemented in this sprint.

- Once the requirements and scope of the project are settled, the Design phase begins. This phase involves both technical architecture design, as well as UI/UX design. This phase builds on the requirements from the Plan phase.

- Next, the Implement phase begins. The designs are used as the guide from which we implement the scoped functionality. Since the sprint is short, if any discrepancies are found during implementation, then the team can easily move to earlier phases.

- As soon as a deliverable is complete, the Test phase begins. The Test phase runs almost concurrently with the Implement phase, as test specifications can be written as soon as the Design phase is completed. A deliverable cannot be considered finished until its tests have passed. Work can move back and forth between the Implement and Test phases, as the engineers fix any identified defects.

- Finally, once all testing and implementation are completed successfully, the Release phase begins. This phase completes any client-facing documentation or release notes. At the end of this phase, the sprint is considered closed. A new sprint can begin, following the same cycle.

The customer gets a new deliverable at the end of each sprint, enabling them to see whether the product still suits their requirements and inform changes for future sprints. The deliverable of each sprint is tested before it is released, ensuring that later sprints do not break existing functionality and deliver new functionality. The scope and effort of the testing performed are limited to exercising the functionality developed during the sprint.

One of the signatories of the Agile manifesto was software engineer Kent Beck. He is credited with having rediscovered and formalized the methodology of TDD.

Since then, Agile has been highly successful for many teams, becoming an industry standard because it enables them to verify functionality as it is being delivered. It combines testing with software delivery and refactoring, removing the separation between the code writing and testing process, and shortening the feedback loop between the engineering team and the customer requirements. This shorter loop is the principle that gives flexibility to Agile.

We will focus on learning how to leverage its process and techniques in our own Go projects throughout the chapters of this book.

Types of automated tests

Automated testing suites are tests that involve tools and frameworks to verify the behavior of software systems. They provide a repeatable way of performing the verification of system requirements. They are the norm for Agile teams, who must test their systems after each sprint and release to ensure that new functionality is shipped without disrupting old/existing functionality.

All automated tests define their inputs and expected outputs according to the requirements of the system under test. We will divide them into several types of tests according to three criteria:

- The amount of knowledge they have of the system

- The type of requirement they verify

- The scope of the functionality they cover

Each test we will study will be described according to these three traits.

System knowledge

As you can see in Figure 1.3, automated tests can be divided into three categories according to how much internal knowledge they have of the system they test:

Figure 1.3 – Types of tests according to system knowledge

Let’s explore the three categories of tests further:

- Black box tests are run from the perspective of the user. The internals of the system are treated as unknown by the test writer, as they would be to a user. Tests and expected outputs are formulated according to the requirement they verify. Black box tests tend not to be brittle if the internals of the system change.

- White box tests are run from the perspective of the developer. The internals of the system are fully known to the test writer, most likely a developer. These tests can be more detailed and potentially uncover hidden errors that black box testing cannot discover. White box tests are often brittle if the internals of the system change.

- Gray box tests are a mixture of black box and white box tests. The internals of the system are partially known to the test writer, as they would be to a specialist or privileged user. These tests can verify more advanced use cases and requirements than black box tests (for example security or certain non-functional requirements) and are usually more time-consuming to write and run as well.

Requirement types

In general, we should provide tests that verify both the functionality and usability of a system.

For example, we could have all the correct functionality on a page, but if it takes 5+ seconds to load, users will abandon it. In this case, the system is functional, but it does not satisfy your customers’ needs.

We can further divide our automated tests into two categories, based on the type of requirement that they verify:

- Functional tests: These tests cover the functionality of the system under test added during the sprint, with functional tests from prior sprints ensuring that there are no regressions in functionality in later sprints. These kinds of tests are usually black box tests, as these tests should be written and run according to the functionality that a typical user has access to.

- Non-functional tests: These tests cover all the aspects of the system that are not covered by functional requirements but affect the user experience and functioning of the system. These tests cover aspects such as performance, usability, and security aspects. These kinds of tests are usually white-box tests, as they usually need to be formulated according to implementation details.

Correctness and usability testing

Tests that verify the correctness of the system are known as functional tests, while tests that verify the usability and performance of the system are known as non-functional tests. Common non-functional tests are performance tests, load tests, and security tests.

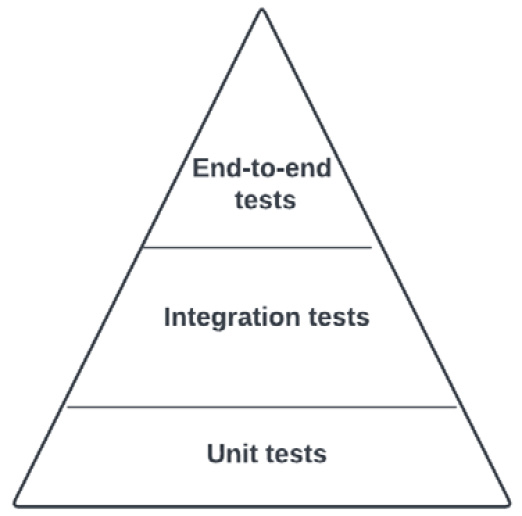

The testing pyramid

An important concept of testing in Agile is the testing pyramid. It lays out the types of automated tests that should be included in the automated testing suites of software systems. It provides guidance on the sequence and priority of each type of test to perform in order to ensure that new functionality is shipped with a proportionate amount of testing effort and without disrupting old/existing functionality.

Figure 1.4 presents the testing pyramid with its three types of tests: unit tests, integration tests, and end-to-end tests:

Figure 1.4 – The testing pyramid and its components

Each type of test can then be further described according to the three established traits of system knowledge, requirement type, and testing scope.

Unit tests

At the bottom of the testing pyramid, we have unit tests. They are presented at the bottom because they are the most numerous. They have a small testing scope, covering the functionality of individual components under a variety of conditions. Good unit tests should be tested in isolation from other components so that we can fully control the test environment and setup.

Since the number of unit tests increases as new features are added to the code, they need to be robust and fast to execute. Typically, test suites are run with each code change, so they need to provide feedback to engineers quickly.

Unit tests have been traditionally thought of as white-box tests since they are typically written by developers who know all the implementation details of the component. However, Go unit tests usually only test the exported/public functionality of the package. This brings them closer to gray-box tests.

We will explore unit tests further in Chapter 2, Unit Testing Essentials.

Integration tests

In the middle of the testing pyramid, we have integration tests. They are an essential part of the pyramid, but they should not be as numerous and should not be run as often as unit tests, which are at the bottom of the pyramid.

Unit tests verify that a single piece of functionality is working correctly, while integration tests extend the scope and test the communication between multiple components. These components can be external or internal to the system – a database, an external API, or another microservice in the system. Often, integration tests run in dedicated environments, which allows us to separate production and test data as well as reduce costs.

Integration tests could be black-box tests or gray-box tests. If the tests cover external APIs and customer-facing functionality, they can be categorized as black-box tests, while more specialized security or performance tests would be considered gray-box tests.

We will explore integration tests further in Chapter 4, Building Efficient Test Suites.

End-to-end tests

At the top of the testing pyramid, we have end-to-end tests. They are the least numerous of all the tests we have seen so far. They test the entire functionality of the application (as added during each sprint), ensuring that the project deliverables are working according to requirements and can potentially be shipped at the conclusion of a given sprint.

These tests can be the most time-consuming to write, maintain, and run since they can involve a large variety of scenarios. Just like integration tests, they are also typically run in dedicated environments that mimic production environments.

There are a lot of similarities between integration tests and end-to-end tests, especially in microservice architectures where one service’s end-to-end functionality involves integration with another service’s end-to-end functionality.

We will explore end-to-end tests further in Chapter 5, Performing Integration Testing, and Chapter 8, Testing Microservice Architectures.

Now that we understand the different types of automated tests, it’s time to look at how we can leverage the Agile practice of TDD to implement them alongside our code. TDD will help us write well-tested code that delivers all the components of the testing pyramid.

The iterative approach of TDD

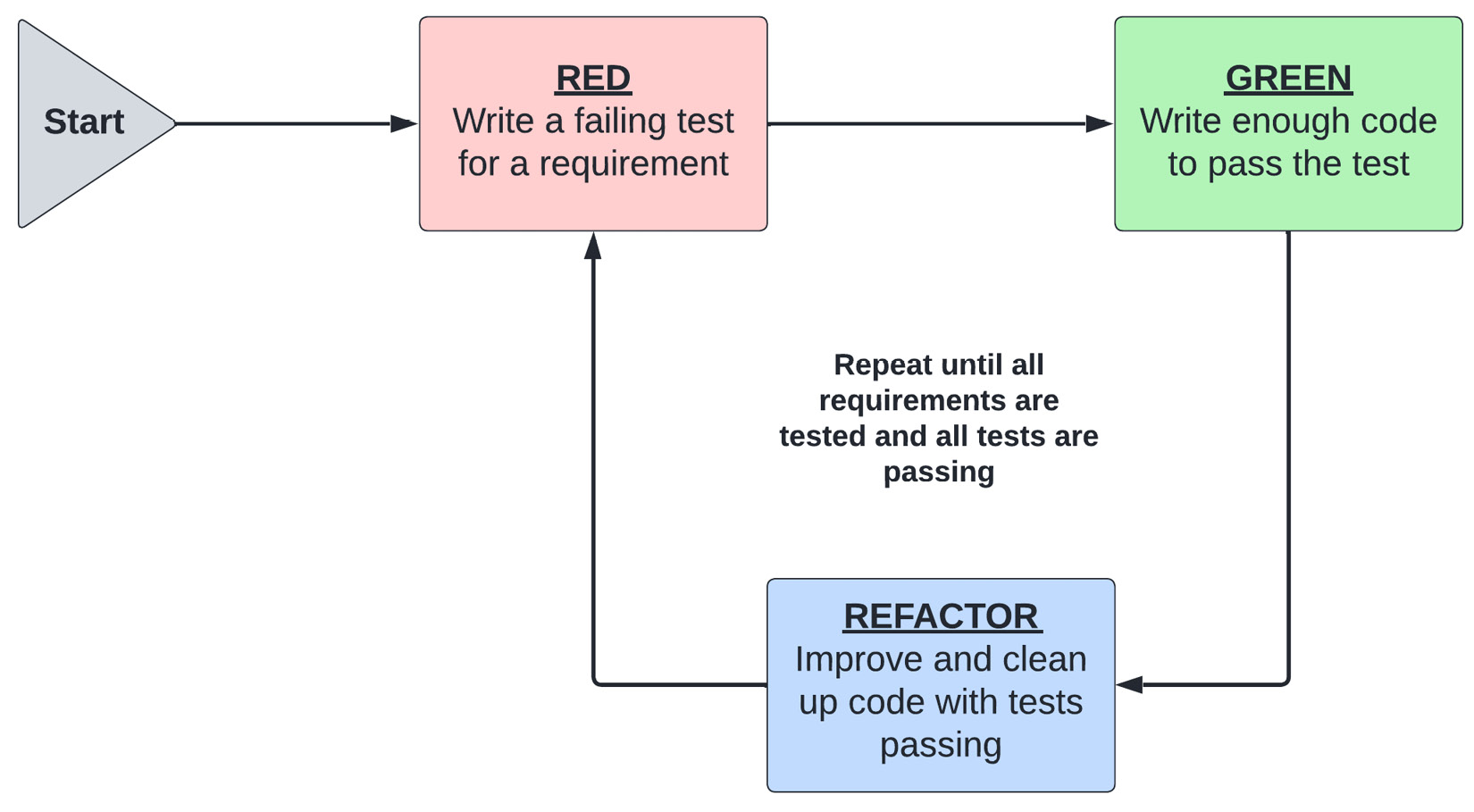

As we’ve mentioned before, TDD is an Agile practice that will be the focus of our exploration. The principle of TDD is simple: write the unit tests for a piece of functionality before implementing it.

TDD brings the testing process together with the implementation process, ensuring that every piece of functionality is tested as soon as it is written, making the software development process iterative, and giving developers quick feedback.

Figure 1.5 demonstrates the steps of the TDD process, known as the red, green, and refactor process:

Figure 1.5 – The steps of TDD

Let’s have a look at the cyclical phases of the TDD working process:

- We start at the red phase. We begin by considering what we want to test and translating this requirement into a test. Some requirements may be made up of several smaller requirements: at this point, we test only the first small requirement. This test will fail until the new functionality is implemented, giving a name to the red phase. The failing test is key because we want to ensure that the test will fail reliably regardless of what code we write.

- Next, we move to the green phase. We swap from test code to implementation, writing just enough code as required to make the failing test pass. The code does not need to be perfect or optimal, but it should be correct enough for the test to pass. It should focus on the requirement tested by the previously written failing test.

- Finally, we move to the refactor phase. This phase is all about cleaning up both the implementation and the test code, removing duplication, and optimizing our solution.

- We repeat this process until all the requirements are tested and implemented and all tests pass. The developer frequently swaps between testing and implementing code, extending functionality and tests accordingly.

That’s all there is to doing TDD!

TDD is all about developers

TDD is a developer-centric process where unit tests are written before implementation. Developers first write a failing test. Then, they write the simplest implementation to make the test pass. Finally, once the functionality is implemented and working as expected, they can refactor the code and test as needed. The process is repeated as many times as necessary. No piece of code or functionality is written without corresponding tests.

TDD best practices

The red, green, and refactor approach to TDD is simple, yet very powerful. While the process is simple, we can make some recommendations and best practices for how to write components and tests that can more easily be delivered with TDD.

Structure your tests

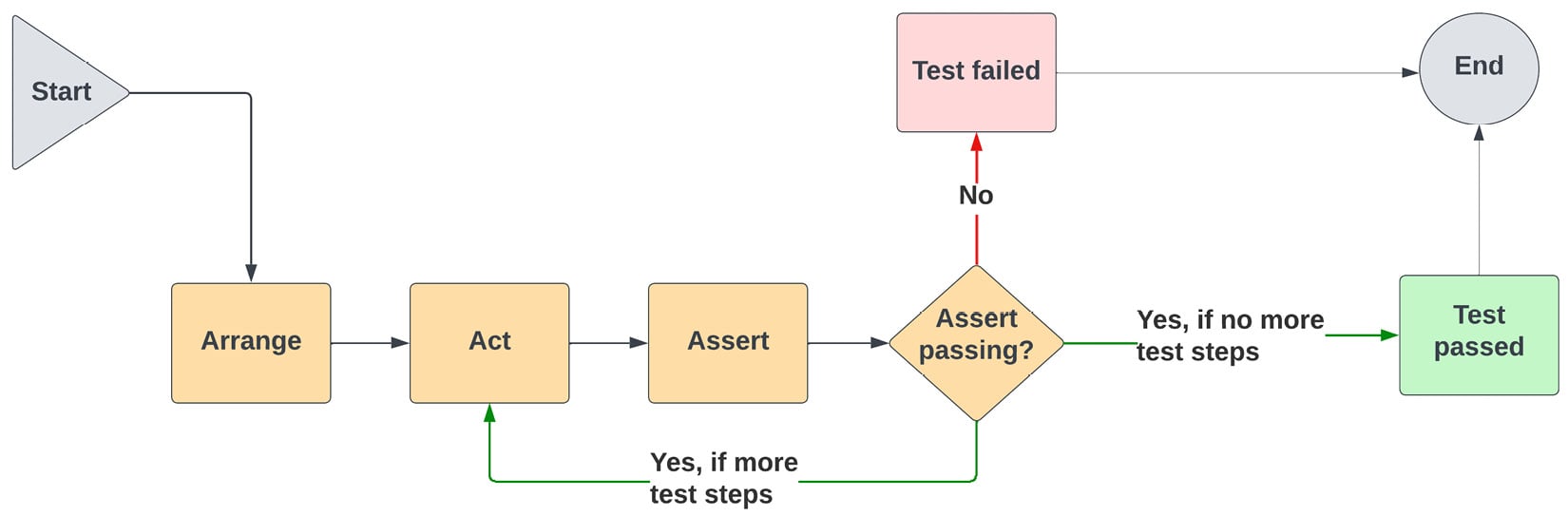

We can formulate a shared, repeatable, test structure to make tests more readable and maintainable. Figure 1.6 depicts the Arrange-Act-Assert (AAA) pattern that is often used with TDD:

Figure 1.6 – The steps of the Arrange-Act-Assert pattern

The AAA pattern describes how to structure tests in a uniform manner:

- We begin with the Arrange step, which is the setup part of the test. This is when we set up the Unit Under Test (UUT) and all of the dependencies that it requires during setup. We also set up the inputs and the preconditions used by the test scenario in this section.

- Next, the Act step is where we perform the actions specified by the test scenario. Depending on the type of test that we are implementing, this could simply be invoking a function, an external API, or even a database function. This step uses the preconditions and inputs defined in the Arrange step.

- Finally, the Assert step is where we confirm that the UUT behaves according to requirements. This step compares the output from the UUT with the expected output, as defined by the requirements.

- If the Assert step shows that the actual output from the UUT is not as expected, then the test is considered failed and the test is finished.

- If the Assert step shows that the actual output from the UUT is as expected, then we have two options: one option is that if there are no more test steps, the test is considered passed and the test is finished. The other option is that if there are more test steps, then we go back to the Act step and continue.

- The Act and Assert steps can be repeated as many times as necessary for your test scenario. However, you should avoid writing lengthy, complicated tests. This is described further in the best practices throughout this section.

Your team can leverage test helpers and frameworks to minimize setup and assertion code duplication. Using the AAA pattern will help to set the standard for how tests should be written and read, minimizing the cognitive load of new and existing team members and improving the maintainability of the code base.

Control scope

As we have seen, the scope of your test depends on the type of test you are writing. Regardless of the type of test, you should strive to restrict the functionality of your components and the assertions of your tests as much as possible. This is possible with TDD, which allows us to test and implement code at the same time.

Keeping things as simple as possible immediately brings some advantages:

- Easier debugging in the case of failures

- Easier to maintain and adjust tests when the Arrange and Assert steps are simple

- Faster execution time of tests, especially with the ability to run tests in parallel

Test outputs, not implementation

As we have seen from the previous definitions of tests, they are all about defining inputs and expected outputs. As developers who know implementation details, it can be tempting to add assertions that verify the inner workings of the UUT.

However, this is an anti-pattern that results in a tight coupling between the test and the implementation. Once tests are aware of implementation details, they need to be changed together with code changes. Therefore, when structuring tests, it is important to focus on testing externally visible outputs, not implementation details.

Keep tests independent

Tests are typically organized in test suites, which cover a variety of scenarios and requirements. While these test suites allow developers to leverage shared functionality, tests should run independently of each other.

Tests should start from a pre-defined and repeatable starting state that does not change with the number of runs and order of execution. Setup and clean-up code ensures that the starting point and end state of each test is as expected.

It is, therefore, best that tests create their own UUT against which to run modifications and verifications, as opposed to sharing one with other tests. Overall, this will ensure that your test suites are robust and can be run in parallel.

Adopting TDD and its best practices allows Agile teams to deliver well-tested code that is easy to maintain and modify. This is one of many benefits of TDD, which we will continue to explore in the next section.