Programming languages are broadly classified into compiled and interpreted languages.

In the compiled world, the code is first compiled into target machine code. This process of converting the code into binary is called compilation. The software program that converts the code into target machine code is called a compiler. During the compilation, the compiler runs a series of checks, passes, and validation on the code written and generates an efficient and optimized binary. A few examples of compiled languages are C, C++, and Rust.

In the interpreted world, the code is read and executed in a single pass. Since the compilation happens at runtime, the generated machine code is not as optimized as its compiled counterpart. Interpreted languages are significantly slower than compiled ones, but they provide dynamic typing and a smaller program size.

In this book, we will focus only on compiled languages.

Compiled languages

A compiler is a translator that translates source code into machine code (or in a more abstract way, converts the code from one programming language to another). A compiler is complicated because it should understand the language in which the source code is written (its syntax, semantics, and context); it should also understand the target machine code (its syntax, semantics, and context) and should create a representation that maps the source code into the target machine code.

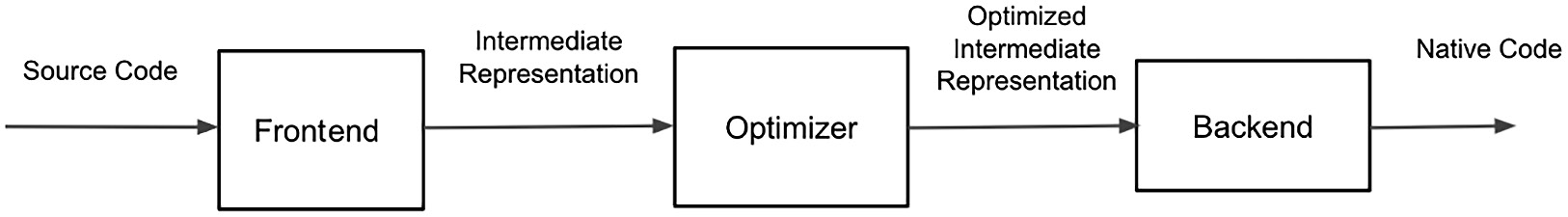

A compiler has the following components:

- Frontend – The frontend is responsible for handling the source language.

- Optimizer – The optimizer is responsible for optimizing the code.

- Backend – The backend is responsible for handling the target language.

Figure 1.1 – Components of a compiler

Frontend

The frontend focuses on handling the source language. The frontend parses the code upon receiving it. The code is then checked for any grammar or syntax issues. After that, the code is converted (mapped) into an intermediate representation (IR). Consider IR as a format that represents the code that the compiler processes. The IR is the compiler's version of your code.

Optimizer

The second component in the compiler is the optimizer. This is optional, but as the name indicates, the optimizer analyzes the IR and transforms it into a much more efficient one. Few compilers have multiple IRs. The compiler efficiently optimizes the code on every pass over the IR. The optimizer is an IR-to-IR transformer. The optimizer analyzes, runs passes, and rewrites the IR. The optimizations here include removing redundant computations, eliminating dead code (code that cannot be reached), and various other optimizing options, which will be explored in future chapters. It is important to note that the optimizers need not be language-specific. Since they act on the IR, they can be built as a generic component and reused with multiple languages.

Backend

The backend focuses on producing the target language. The backend receives the generated (optimized) IR and converts it into another language (such as machine code). It is also possible to chain multiple backends that convert the code into some other languages. The backend is responsible for generating the target machine code from the IR. This machine code is the actual code that runs on the bare metal. In order to produce efficient machine code, the backend should understand the architecture in which the code is executed.

Machine code is a set of instructions that instructs the machine to store some values in registers and do some computation on them. For example, the generated machine code is responsible for efficiently storing a 64-bit number in 32-bit architecture in a free register (and things like that). The backend should understand the target environment to efficiently create a set of instructions and properly select and schedule the instructions to increase the performance of the application execution.

Compiler efficiency

The faster the execution, the better the performance.

The efficiency of the compiler depends on how it selects the instruction, allocates the register, and schedules the instruction execution in the given architecture. An instruction set is a set of operations supported by a processor, and this overall design is called an Instruction Set Architecture (ISA). The ISA is an abstract model of a computer and is often referred to as computer architecture. Various processors convert the ISA in different implementations. The different implementations may vary in performance. The ISA is an interface between the hardware and the software.

If you are implementing a new programming language and you want this language to be running on different architectures (or, more abstractly, different processors), then you should build the backend for each of these architectures/targets. But building these backends for every architecture is difficult and will take time, cost, and effort to embark on a language creation journey.

What if we create a common IR and build a compiler that converts this IR into machine code that runs efficiently on various architecture? Let's call this compiler a low-level virtual machine. Now, the role of your frontend in the compiler chain is just to convert the source code into an IR that is compatible with a low-level virtual machine (such as LLVM). Now, the general purpose of a low-level virtual machine is to be a common reusable component that maps the IR into native code for various targets. But the low-level virtual machine will only understand the common IR. This IR is called the LLVM IR and the compiler is called LLVM.

Free Chapter

Free Chapter