Container as a Service (CaaS) became popular with the release of Docker in 2013. It made it easy to build and deploy containerized applications on on-premise data centers or over the cloud.

Containers changed the unit of scale for DevOps and site reliability engineers. Instead of one dedicated VM per application, multiple containers can run on a single virtual machine, which allows better server utilization and reduces costs. Also, it brings developer and operation teams closer together by eliminating the "worked on my machine" joke. This transition to containers has allowed multiple companies to modernize their legacy applications and move them to cloud.

To achieve fault-tolerance, high-availability, and scalability, an orchestrations tool, such as Docker Swarm, Kubernetes, or Apache Mesos, was needed to manage containers in a cluster of nodes. As a result, CaaS was introduced to build, ship, and run containers quickly and efficiently. It also handles heavy tasks, such as cluster management, scaling, blue/green deployment, canary updates, and rollbacks.

The most popular CaaS platform in the market today is AWS as 57% of the Kubernetes workload is running on Amazon Elastic Container Service (ECS), Elastic Kubernetes Service (EKS), and AWS Fargate, followed by Docker Cloud, CloudFoundry, and Google Container Engine.

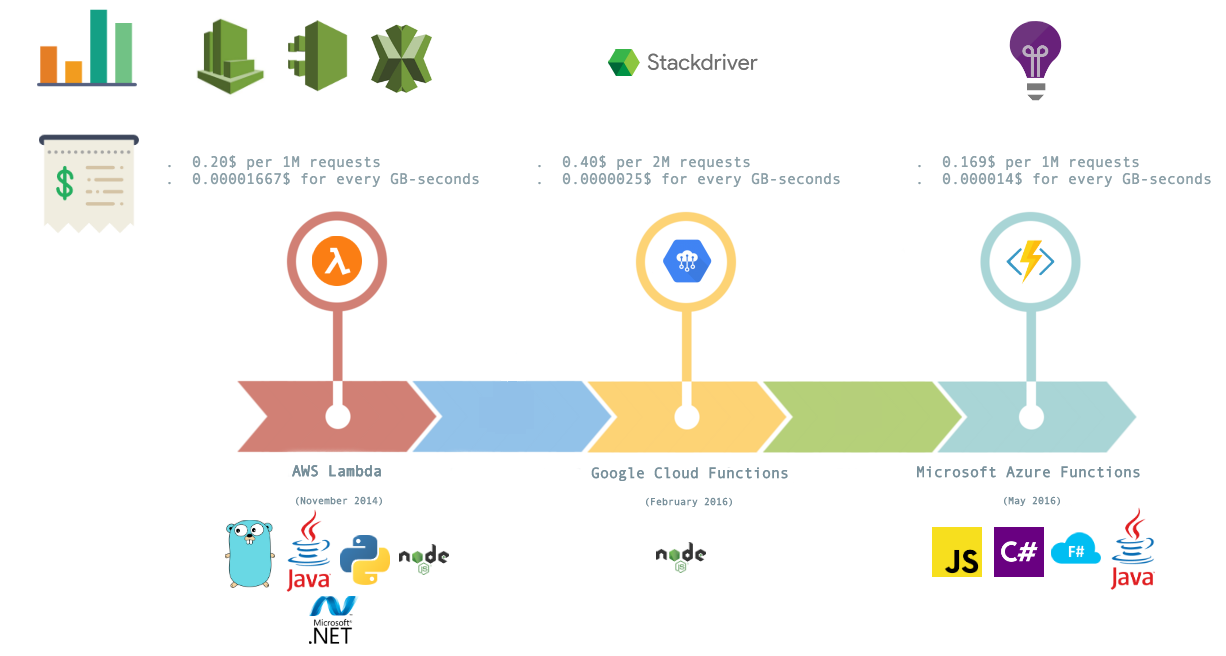

This model, CaaS, enables you to split your virtual machines further to achieve higher utilization and orchestrate containers across a cluster of machines, but the cloud user still needs to manage the life cycle of containers; as a solution to this, Function as a Service (FaaS) was introduced.

Free Chapter

Free Chapter