If we have trained a simple ensemble model that performs reasonably better than the baseline model and achieves acceptable performance according to the expected performance estimated during data preparation, we can progress with optimization. This is a point we really want to emphasize. It's strongly discouraged to begin model optimization and stacking when a simple ensemble technique fails to deliver useful results. If this is the case, it would be much better to take a step back and dive deeper into data analysis and feature engineering.

Common ML optimization techniques, such as hyperparameter optimization, model stacking, and even automated machine learning, help you get the last 10% of performance boost out of your model while the remaining 90% is achieved by a single ensemble model. If you decide to use any of those optimization techniques, it is advised to perform them in parallel and fully automated on a distributed cluster.

After seeing too many ML practitioners manually parametrizing, tuning, and stacking models together, we want to raise the important message that training and optimizing ML models is boring. It should rarely be done manually as it is much faster to perform it automatically as an end-to-end optimization process. Most of your time and effort should go into experimentation, data preparation, and feature engineering—that is, everything that cannot be easily automated and optimized using raw compute power. Prior knowledge about the data and an understanding of the ML use case and the business insights are the best places to dig deeper into when investing time in improving the model performance.

Hyperparameter optimization

Once you have achieved reasonable performance using a simple single model with default parameterization, you can move on to optimizing the hyperparameters of the model. Due to the combination and complexity of multiple parameters, it doesn't make a lot of sense to waste time on tuning the parameters by hand. Instead, this tuning should always be performed in an optimal automated way, which will always lead to a better cross- validation performance.

First, you need to define the parameter search space and sampling distribution for each trainable hyperparameter. This definition is either a continuous or categorical region with different sampling distributions; for example, uniform, logarithmic, or normal distributed sampling. This can usually be done by generating a parameter configuration using a hyperparameter optimization library.

The next thing you need to decide is the sampling technique of parameters in the search space. The three most common sampling and optimization techniques are the following:

- Grid sampling

- Random sampling

- Bayesian optimization

While the first two algorithms sample either in a grid or at random in the search space, the third algorithm performs a more intelligent search through Bayesian optimization. In practice, random and Bayesian sampling are used most often.

Note

To avoid any unnecessary compute time spent on wrong parameter configurations, it is recommended to define early stopping criteria when using hyperparameter optimization.

Training many combinations of different parameter sets is a computationally complex task. Hence, it is strongly recommended to parallelize this task on multiple machines and track all parameter combinations and model cross-validation performance at a central location. This is a particularly beneficial task for a highly scalable cloud computing environment where these tasks are performed automatically. In Azure Machine Learning, you can use the HyperDrive functionality to do exactly this. We will look at this in great detail in Chapter 9, Hyperparameter tuning and Automated Machine Learning.

Model stacking

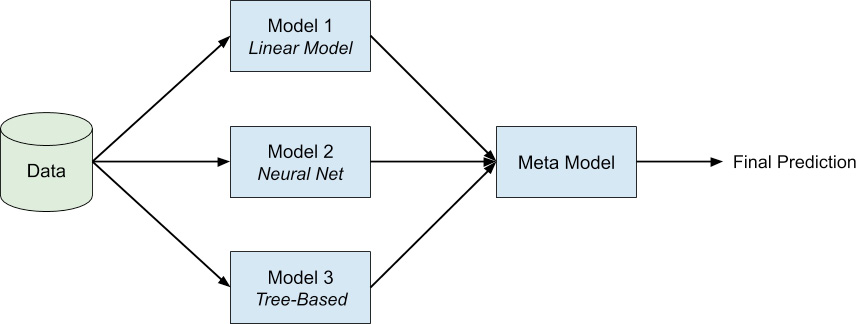

Model stacking is a very common technique used to improve prediction performance by putting a combination of multiple models into a single stacked model. Hence, the output of each model is fed into a meta-model, which itself is trained through cross-validation and hyperparameter tuning. By combining significantly different models into a single stacked model, you can always outperform a single model.

Figure 1.9 shows a stacked model consisting of different supervised models in level 1 that feed their output into another meta-model. This is a common architecture that further boosts prediction performance once all the feature engineering and model tuning options are fully exploited:

Figure 1.9: Model stacking

Model stacking adds a lot of complexity to your ML process while almost always leading to better performance. This technique will get out the last 1% performance gain of your algorithm. To efficiently stack models into a meta ensemble, it is recommended that you do it fully automated; for example, through techniques such as Azure Automated Machine Learning. One thing to be aware of, however, is that you can easily overfit the training data or create stacked models that are magnitudes larger in size than single models.

Azure Automated Machine Learning

As we have shown, constructing ML models is a complex step-by-step process that requires a lot of different skills, such as domain knowledge (prior knowledge that allows you to get insight into data), mathematical expertise, and computer science skills. During this process, there is still human error and bias involved, which might not only affect the model's performance and accuracy, but also the insights that you want to gain out of it.

Azure Automated Machine Learning could be used to combine and automated all of this by reducing the time to value. For several industries, automated machine learning can leverage ML and AI technology by automating manual modeling tasks, such that the data scientists can focus on more complex issues. Particularly when using repetitive ML tasks, such as data preprocessing, feature engineering, model selection, parameter tuning and model stacking, it could be useful to use Azure Automated Machine Learning.

We will go into much more detail and see real-world examples in Chapter 9, Hyperparameter tuning and Automated Machine Learning.

Free Chapter

Free Chapter