The architecture of different neural networks

Neural networks come in various types, each with a specific architecture suited to a different kind of task. The following list contains general descriptions of some of the most common types:

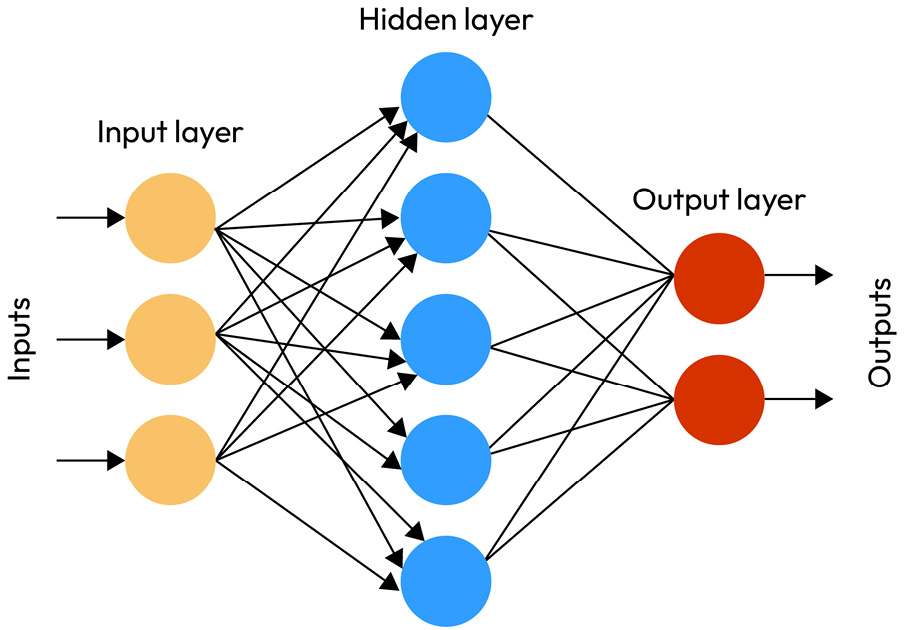

- Feedforward neural network (FNN): This is the most straightforward type of neural network. Information in this network moves in one direction only, from the input layer through any hidden layers to the output layer. There are no cycles or loops in the network; it’s a straight, “feedforward” path.

Figure 6.2 – Feedforward neural network

- Multilayer perceptron (MLP): An MLP is a type of feedforward network that has at least one hidden layer in addition to its input and output layers. The layers are fully connected, meaning each neuron in a layer connects with every neuron in the next layer. MLPs can model complex patterns and are widely used for tasks such as image recognition...