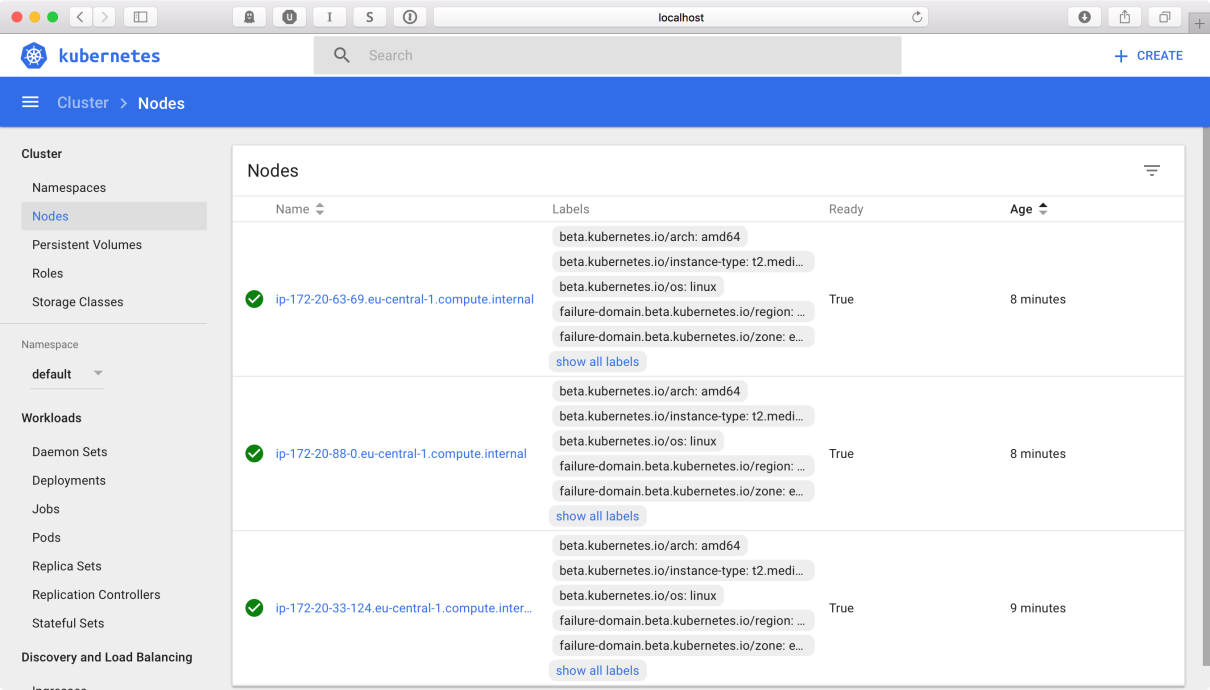

A running instance of an image is called a container. Docker launches them using the Docker images as read-only templates. If you start an image, you have a running container of this image. Naturally, you can have many running containers of the same image. In fact, we will do it very often a little bit later, using Kubernetes.

To run a container, we use the docker run command:

docker run [OPTIONS] IMAGE [COMMAND] [ARG...]

There are a lot of run command options and switches that can be used; we will get to know them later on. Some of the options include the network configuration, for example (we will explain Docker's networking concepts in Chapter 2, Networking and Persistent Storage). Others, the same as the -it (from interactive), tell the Docker engine to behave differently; in this case, to make the container interactive and to attach a terminal to its output and input. Let's just focus on the idea of the container to better understand the whole picture. We are going to use the docker run command in a short while to test our setup.

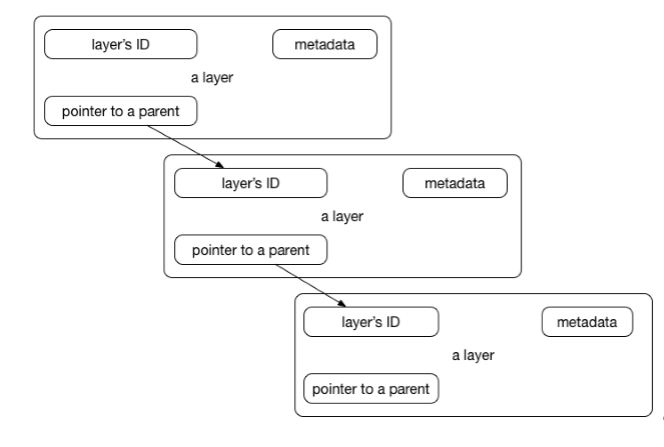

So, what happens under the hood when we run the docker run command? Docker will check if the image that you would like to run is available on your local machine. If not, it will be pulled down from the remote repository. The Docker engine takes the image and adds a writable layer on top of the image's layers stack. Next, it initializes the image's name, ID, and resource limits, such as CPU and memory. In this phase, Docker will also set up a container's IP address by finding and attaching an available IP address from a pool. The last step of the execution will be the actual command, passed as the last parameter of the docker run command. If the it option has been used, Docker will capture and provide the container output, it will be displayed in the console. You can now do things you would normally do when preparing an operating system to run your applications. This can be installing packages (via apt-get, for example), pulling source code with Git, building your Java application using Maven, and so on. All of these actions will modify the filesystem in the top, writable layer. If you then execute the commit command, a new image containing all of your changes will be created, kind of frozen, and ready to be run later. To stop a container, use the docker stop command:

docker stop

A container when stopped will retain all settings and filesystem changes (in the top layer that is writeable). All processes running in the container will be stopped and you will lose everything in memory. This is what differentiates a stopped container from a Docker image.

To list all containers you have on your system, either running or stopped, execute the docker ps command:

docker ps -a

As a result, the Docker client will list a table containing container IDs (a unique identifier you can use to refer to the container in other commands), creation date, the command used to start a container, status, exposed ports, and a name, either assigned by you or the funny name Docker has picked for you. To remove a container, you can just use the docker rm command. If you want to remove a couple of them at once, you can use the list of containers (given by the docker ps command) and a filter:

docker rm $(docker ps -a -q -f status=exited)

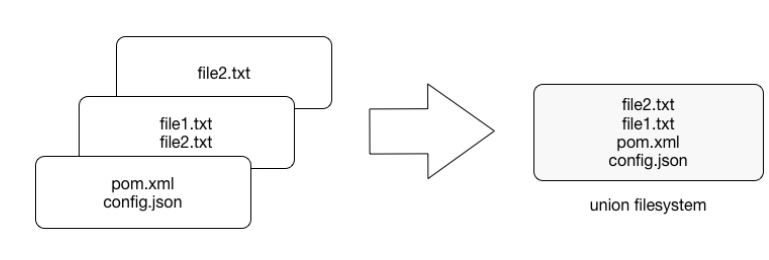

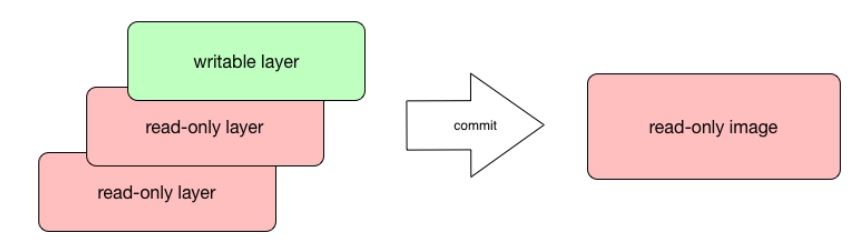

We have said that a Docker image is always read-only and immutable. If it did not have the possibility to change the image, it would not be very useful. So how's the image modification possible except by, of course, altering a Dockerfile and doing a rebuild? When the container is started, the writable layer on top of the layers stack is for our disposal. We can actually make changes to a running container; this can be adding or modifying files, the same as installing a software package, configuring the operating system, and so on. If you modify a file in the running container, the file will be taken out of the underlying (parent) read-only layer and placed in the top, writable layer. Our changes are only possible in the top layer. The union filesystem will then cover the underlying file. The original, underlying file will not be modified; it still exists safely in the underlying, read-only layer. By issuing the docker commit command, you create a new read-only image from a running container (and all it changes in the writable layer):

docker commit <container-id> <image-name>

The docker commit command saves changes you have made to the container in the writable layer. To avoid data corruption or inconsistency, Docker will pause a container you are committing changes into. The result of the docker commit command is a brand new, read-only image, which you can create new containers from:

In response to a successful commit, Docker will output the full ID of a newly generated image. If you remove the container without issuing a commit first and then relaunch the same image again, Docker will start a fresh container without any of the changes made in the previously running container. In either case, with or without a commit, your changes to the filesystem will never affect the base image. Creating images by altering the top writable layer in the container is useful when debugging and experimenting, but it's usually better to use a Dockerfile to manage your images in a documented and maintainable way.

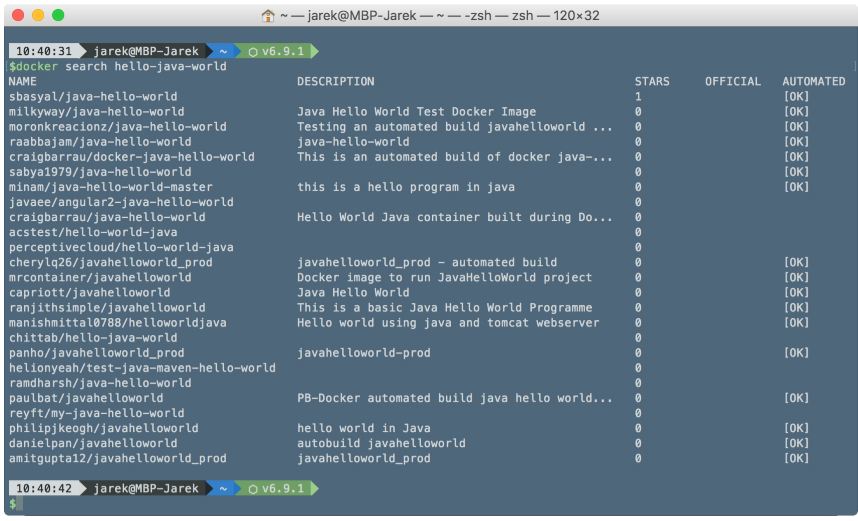

We have now learned about the build (Dockerfile and the image) and runtime (container) pieces of our containerization world. We are still missing the last element, the distribution component. The distribution component of Docker consists of the Docker registry, index, and repository. Let's focus on them now to have a complete picture.

Free Chapter

Free Chapter