To log on into an existent database repository, follow these instructions:

Launch Spoon.

If the repository dialog window doesn't show up, select Tools | Repository | Connect... from the main menu. The repository dialog window appears.

In the list, select the repository you want to log in.

Type your username and password. If you didn't create a user, use the default

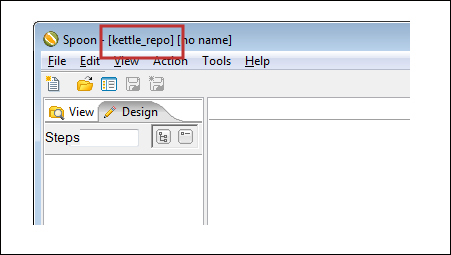

admin/admin, and click on OK.You are logged into the repository. You will see the name of the repository in the upper-left corner of Spoon:

In the preceding section, you opened Spoon and logged into a database repository.

If you want to work with the repository storage system, you have to log in to the repository before starting to work. In order to do that, you have to choose the repository and provide a repository username and password.

The repository dialog that allows you to log into repository can be opened from the main Spoon menu. If you intend to log into the repository often, you'd better select Tools | Options... and check the general option Show repository dialog at startup?, which will cause the repository dialog to always show up when you launch Spoon.

It is possible to log into the repository automatically. Suppose you have a repository named MY_REPO, and you use the default user. By adding the following lines to the kettle.properties file, the next time you launch Spoon you will log into the repository automatically:

KETTLE_REPOSITORY=MY_REPO KETTLE_USER=admin KETTLE_PASSWORD=admin

Tip

For details on the kettle.properties file, refer to the Kettle variables section in Chapter 3, Manipulating Real-world Data.

As a final note, take into account that the log information is exposed and as such, autologin is not recommended.

In a repository, the jobs and transformations are organized in folders. A folder in a repository fulfills the same purpose as a folder in your drive—it allows you to keep your work organized. Once you create a folder, you can save both transformations and jobs in it.

While connected to a repository, you can design, preview, and run jobs and transformations just as you do with files. However, there are some differences when it comes to opening, creating, or saving your work. So let's summarize how you do those tasks when logged into a repository:

Besides jobs and transformations, there are some additional PDI elements that you can define:

|

Element |

Description |

|---|---|

|

Connections |

This element defines connections to relational databases. These are covered in Chapter 8, Working with Databases. |

|

Security |

This element provides security to the users. They are needed to log into the repository. There are two predefined users: |

You can also define some elements not covered in this book, but worth mentioning:

|

Element |

Description |

|---|---|

|

Slaves |

Slave servers are the servers installed in remote machines to execute jobs and transformations remotely. |

|

Partitions |

Partitioning is a mechanism by which you can send individual rows to different copies of the same step, for example, based on a field value. |

|

Clusters |

Clusters are a group of slave servers which collectively execute a job or a transformation. |

All the elements can also be created, modified, and deleted from the repository explorer. Once you create any of these elements, they are automatically shared by all repository users.

You shouldn't have any difficulty in designing jobs and transformations under a database repository-based method. The way you work is exactly the same as the way you learned through all the chapters while working under the file-based method. The main change, besides the way you open and save your work, is the way you refer to other jobs and transformations. Here you have a list of the main situations:

You may regularly back up your database repository in the same way as you would do with any database. You do it by using the utilities provided by the RDBMS, for example, mysqldump in MySQL. However, PDI offers you a method for creating a backup in an XML file.

You create a backup from the Tools | Repository | Export Repository... option. You will be asked for the name and location of the

XMLfile that will contain the backup data. To backup a single folder, open the Repository explorer and right-click on the name of the folder.You restore a backup from the Tools | Repository | Import Repository... option. You will be asked for the name and location of the

XMLfile that contains the backup.

Both in the export and the import operations, Kettle offers you the possibility to apply some rules to make sure that the transformations and jobs adhere to the given standards.

As an example, suppose that you want to make sure that there are no disabled hops in your jobs. One of the rules that Kettle offers does this verification—the name of the rule is 'Job has no disabled hops'. The list of available rules is quite limited but still useful for meeting some basic requirements.