Artificial intelligence, machine learning, and deep learning

In this section, we learn what exactly ML is. We will learn to differentiate between learning and non-learning AI. However, before that, we’ll introduce ourselves to AI, ML, and DL.

Artificial intelligence (AI)

There are different definitions of AI. However, one of the best is John McCarthy’s definition. McCarthy was the first to coin the term artificial intelligence in one of his proposals for the 1956 Dartmouth Conference. He defined the outlines of this field by many major contributions such as the Lisp programming language, utility computing, and timesharing. According to the father of AI in What is Artificial Intelligence? (https://www-formal.stanford.edu/jmc/whatisai.pdf):

AI is about making computers, programs, machines, or others mimic or imitate human intelligence. As humans, we perceive the world, which is a very complex task, and we reason, generalize, plan, and interact with our surroundings. Although it is fascinating to master these tasks within just a few years of our childhood, the most interesting aspect of our intelligence is the ability to improve the learning process and optimize performance through experience!

Unfortunately, we still barely scratch the surface of knowing about our own brains, intelligence, and other associated functionalities such as vision and reasoning. Thus, the trek of creating “intelligent” machines has just started relatively recently in civilization and written history. One of the most flourishing directions of AI has been learning-based AI.

AI can be seen as an umbrella that covers two types of intelligence: learning and non-learning AI. It is important to distinguish between AI that improves with experience and one that does not!

For example, let’s say you want to use AI to improve the accuracy of a physician identifying a certain disease, given a set of symptoms. You can create a simple recommendation system based on some generic cases by asking domain experts (senior physicians). The pseudocode for such a system is shown in the following code block:

//Example of Non-learning AI (My AI Doctor!)

Patient.age //get the patient age

Patient. temperature //get the patient temperature

Patient.night_sweats //get if the patient has night sweats

Paitent.Cough //get if the patient cough

// AI program starts

if Patient.age > 70:

if Patient.temperature > 39 and Paitent.Cough:

print("Recommend Disease A")

return

elif Patient.age < 10:

if Patient.tempreture > 37 and not Paitent.Cough:

if Patient.night_sweats:

print("Recommend Disease B")

return

else:

print("I cannot resolve this case!")

return This program mimics how a physician may reason for a similar scenario. Using simple if-else statements with few lines of code, we can bring “intelligence” to our program.

Important note

This is an example of non-learning-based AI. As you may expect, the program will not evolve with experience. In other words, the logic will not improve with more patients, though the program still represents a clear form of AI.

In this section, we learned about AI and explored how to distinguish between learning and non-learning-based AI. In the next section, we will look at ML.

Machine learning (ML)

ML is a subset of AI. The key idea of ML is to enable computer programs to learn from experience. The aim is to allow programs to learn without the need to dictate the rules by humans. In the example of the AI doctor we saw in the previous section, the main issue is creating the rules. This process is extremely difficult, time-consuming, and error-prone. For the program to work properly, you would need to ask experienced/senior physicians to express the logic they usually use to handle similar patients. In other scenarios, we do not know exactly what the rules are and what mechanisms are involved in the process, such as object recognition and object tracking.

ML comes as a solution to learning the rules that control the process by exploring special training data collected for this task (see Figure 1.1):

Figure 1.1 – ML learns implicit rules from data

ML has three major types: supervised, unsupervised, and reinforcement learning. The main difference between them comes from the nature of the training data used and the learning process itself. This is usually related to the problem and the available training data.

Deep learning (DL)

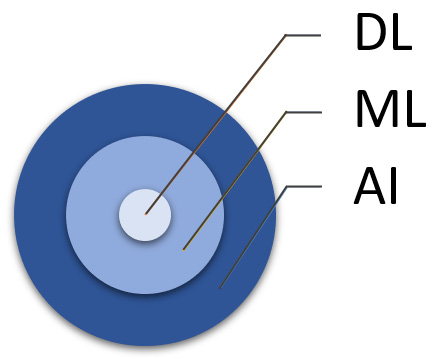

DL is a subset of ML, and it can be seen as the heart of ML (see Figure 1.2). Most of the amazing applications of ML are possible because of DL. DL learns and discovers complex patterns and structures in the training data that are usually hard to do using other ML approaches, such as decision trees. DL learns by using artificial neural networks (ANNs) composed of multiple layers or too many layers (an order of 10 or more), inspired by the human brain; hence the neural in the name. It has three types of layers: input, output, and hidden. The input layer receives the input, while the output layer gives the prediction of the ANN. The hidden layers are responsible for discovering the hidden patterns in the training data. Generally, each layer (from the input to the output layers) learns a more abstract representation of the data, given the output of the previous layer. The more hidden layers your ANN has, the more complex and non-linear the ANN will be. Thus, ANNs will have more freedom to better approximate the relationship between the input and output or to learn your training data. For example, AlexNet is composed of 8 layers, VGGNet is composed of 16 to 19 layers, and ResNet-50 is composed of 50 layers:

Figure 1.2 – How DL, ML, and AI are related

The main issue with DL is that it requires a large-scale training dataset to converge because we usually have a tremendous number of parameters (weights) to tweak to minimize the loss. In ML, loss is a way to penalize wrong predictions. At the same time, it is an indication of how well the model is learning the training data. Collecting and annotating such large datasets is extremely hard and expensive.

Nowadays, using synthetic data as an alternative or complementary to real data is a hot topic. It is a trending topic in research and industry. Many companies such as Google (Google’s Waymo utilizes synthetic data to train autonomous cars) and Microsoft (they use synthetic data to handle privacy issues with sensitive data) started recently to invest in using synthetic data to train next-generation ML models.